1、[LG] Variance Covariance Regularization Enforces Pairwise Independence in Self-Supervised Representations

2、[LG] Is margin all you need? An extensive empirical study of active learning on tabular data

3、[LG] Images as Weight Matrices: Sequential Image Generation Through Synaptic Learning Rules

4、[LG] TEMPERA: Test-Time Prompt Editing via Reinforcement Learning

5、[LG] Canary in a Coalmine: Better Membership Inference with Ensembled Adversarial Queries

[CV] VQ3D: Learning a 3D-Aware Generative Model on ImageNet

[CV] DiffusioNeRF: Regularizing Neural Radiance Fields with Denoising Diffusion Models

[CV] Vid2Avatar: 3D Avatar Reconstruction from Videos in the Wild via Self-supervised Scene Decomposition

[CV] Text-driven Visual Synthesis with Latent Diffusion Prior

摘要:用方差协方差正则化增强自监督表示的成对独立性、边际采样表格数据主动学习实证研究、基于突触学习规则的序列图像生成、基于强化学习的测试时提示编辑、基于集成对抗查询改善成员推断、ImageNet上的3D感知生成模型学习、基于去噪扩散模型的神经辐射场正则化、基于自监督场景分解的真实场景视频3D化身重建、基于潜扩散先验的文本驱动视觉合成

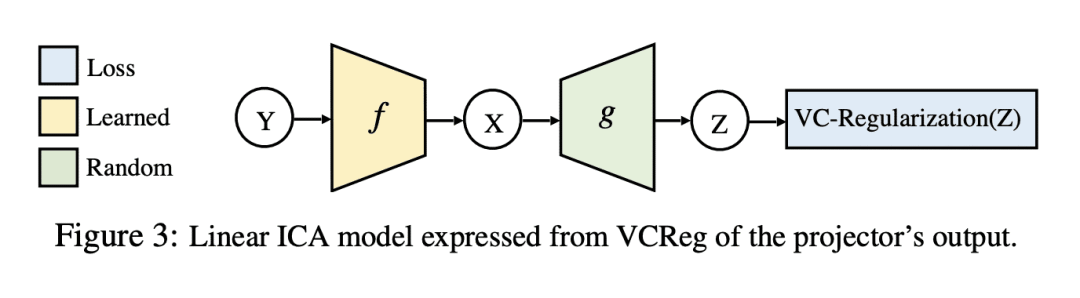

1、[LG] Variance Covariance Regularization Enforces Pairwise Independence in Self-Supervised Representations

G Mialon, R Balestriero, Y LeCun

[Meta AI]

用方差协方差正则化增强自监督表示的成对独立性

要点:

-

VCReg 是一种自监督学习方法,使 projector 输出协方差矩阵正规化; -

这种正则化在习得表示特征间强制实现了成对独立性; -

成对独立性可使需要线性探测的下游任务的性能得到改善; -

VCReg 还可用于独立成分分析。

一句话总结:

方差协方差正则化(VCReg)增强了自监督学习中特征间的成对独立性,进而提高了下游任务的性能。

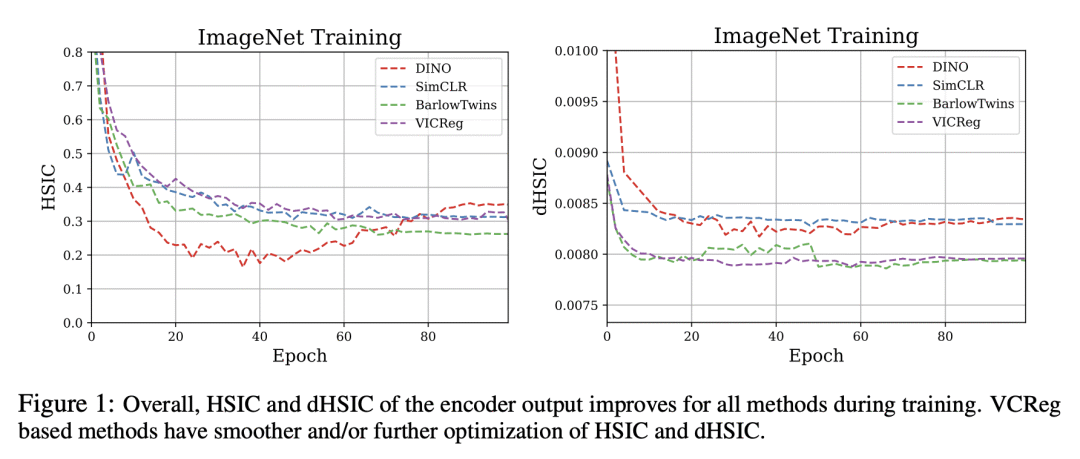

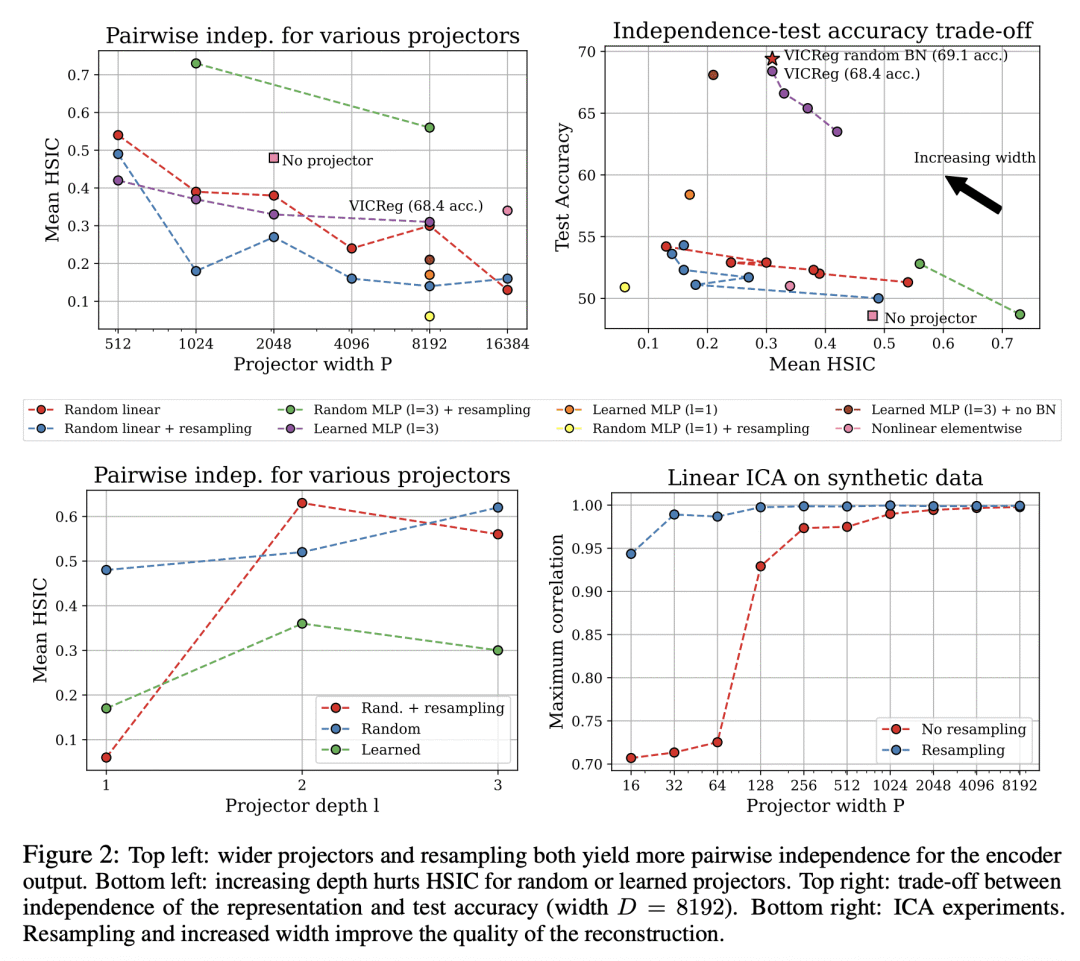

Self-Supervised Learning (SSL) methods such as VICReg, Barlow Twins or W-MSE avoid collapse of their joint embedding architectures by constraining or regularizing the covariance matrix of their projector’s output. This study highlights important properties of such strategy, which we coin Variance-Covariance regularization (VCReg). More precisely, we show that VCReg enforces pairwise independence between the features of the learned representation. This result emerges by bridging VCReg applied on the projector’s output to kernel independence criteria applied on the projector’s input. This provides the first theoretical motivations and explanations of VCReg. We empirically validate our findings where (i) we put in evidence which projector’s characteristics favor pairwise independence, (ii) we use these findings to obtain nontrivial performance gains for VICReg, (iii) we demonstrate that the scope of VCReg goes beyond SSL by using it to solve Independent Component Analysis. We hope that our findings will support the adoption of VCReg in SSL and beyond.

https://openreview.net/forum?id=Nn-7OXvqmSW

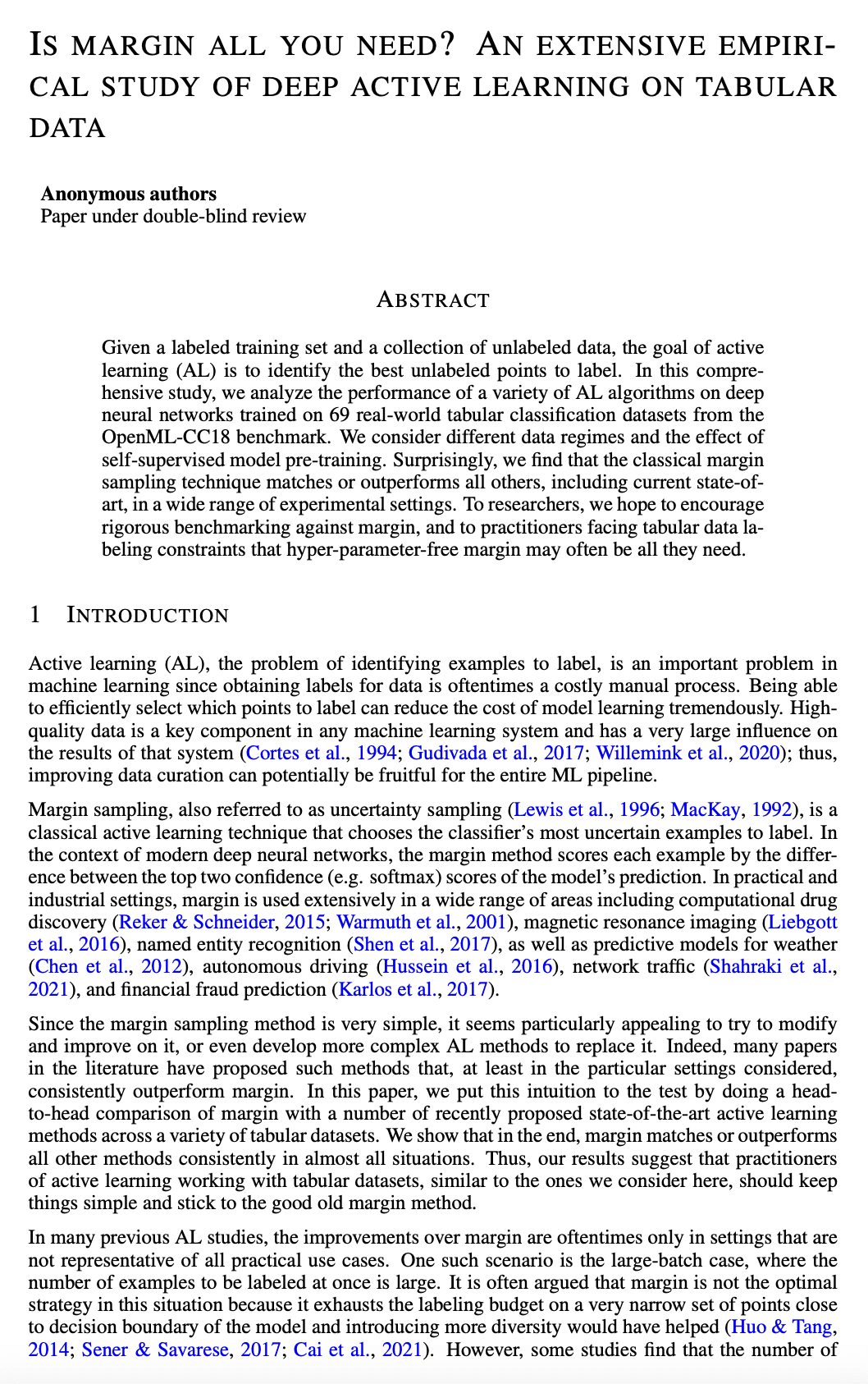

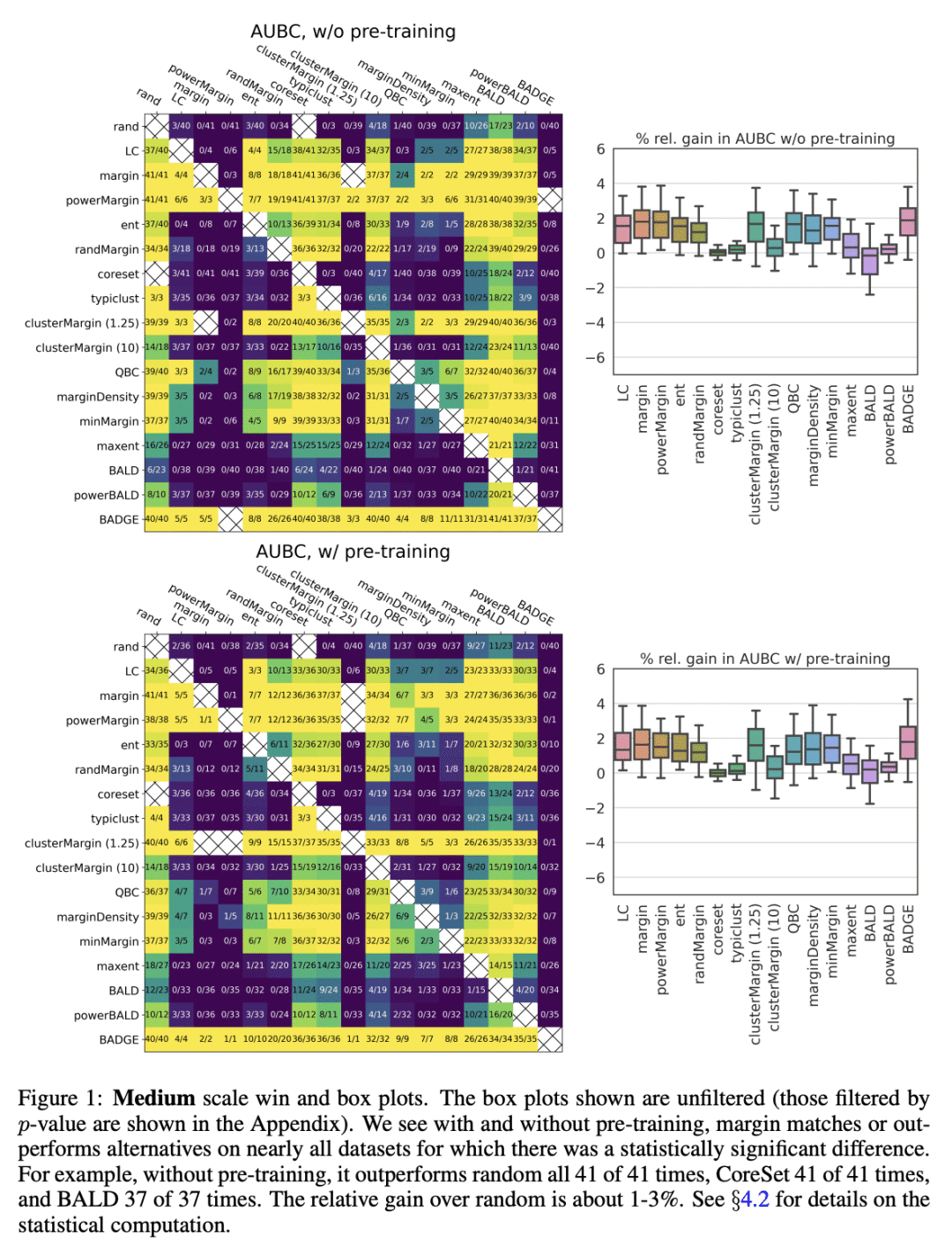

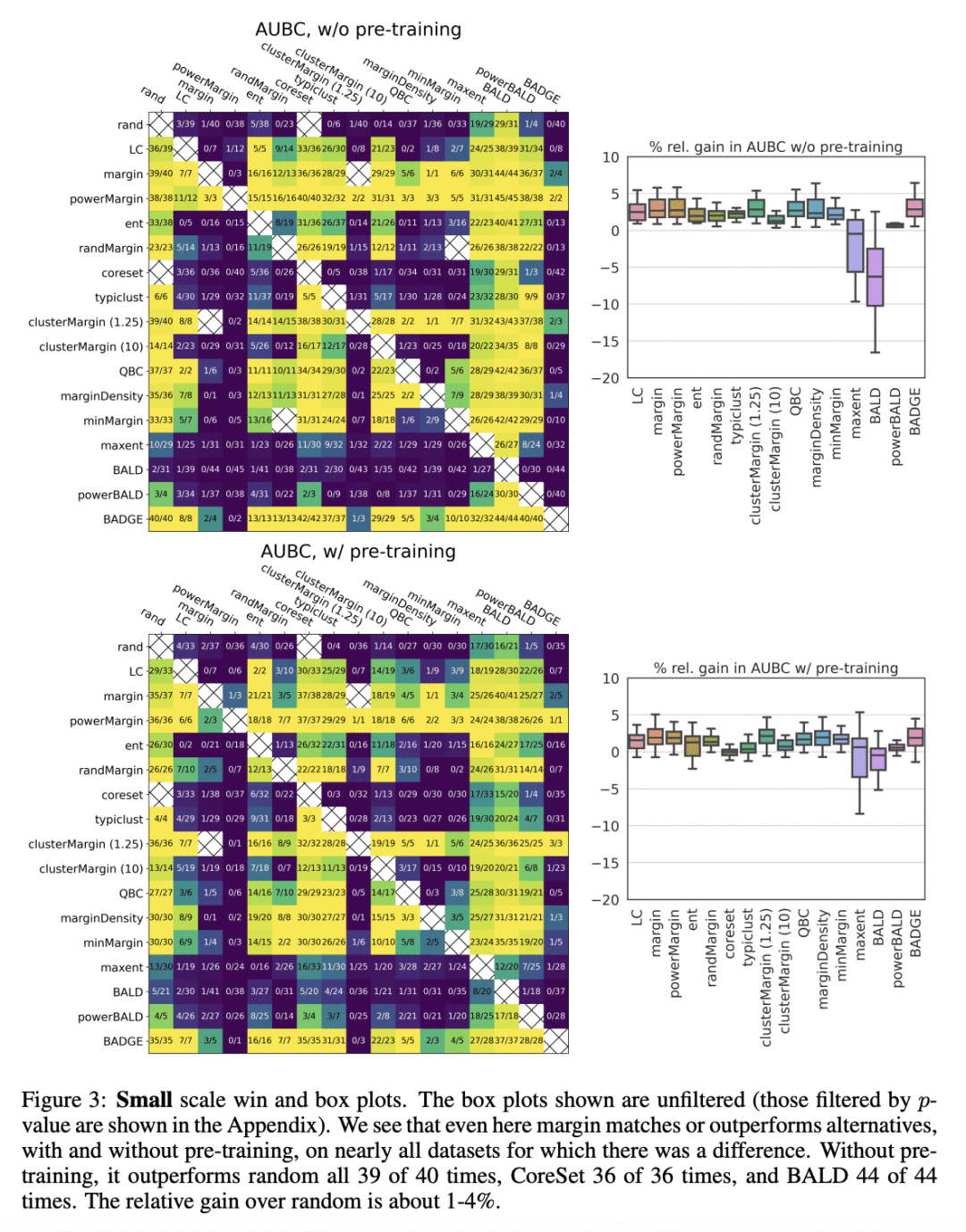

2、[LG] Is margin all you need? An extensive empirical study of active learning on tabular data

D Bahri, H Jiang, T Schuster, A Rostamizadeh

[Google]

边际采样表格数据主动学习实证研究

要点:

-

主动学习的目的是从未标记数据集合中找出最佳的未标记点进行标记; -

研究分析了各种主动学习算法在 OpenML-CC18 基准的 69 个真实世界表格分类数据集上训练的深度神经网络的性能; -

在广泛的实验环境中,边际采样与所有其他包括最先进的主动学习方法相匹配或更好; -

结果表明,对于面临数据标记受限的从业者来说,边际采样是一种强大而有效的免超参数方法。

一句话总结:

边际采样是一种免超参数的主动学习技术,在表格数据上与最先进的方法相匹配或更好。

Given a labeled training set and a collection of unlabeled data, the goal of active learning (AL) is to identify the best unlabeled points to label. In this comprehensive study, we analyze the performance of a variety of AL algorithms on deep neural networks trained on 69 real-world tabular classification datasets from the OpenML-CC18 benchmark. We consider different data regimes and the effect of self-supervised model pre-training. Surprisingly, we find that the classical margin sampling technique matches or outperforms all others, including current state-of-art, in a wide range of experimental settings. To researchers, we hope to encourage rigorous benchmarking against margin, and to practitioners facing tabular data labeling constraints that hyper-parameter-free margin may often be all they need.

https://openreview.net/forum?id=wXdEKf5mV6N

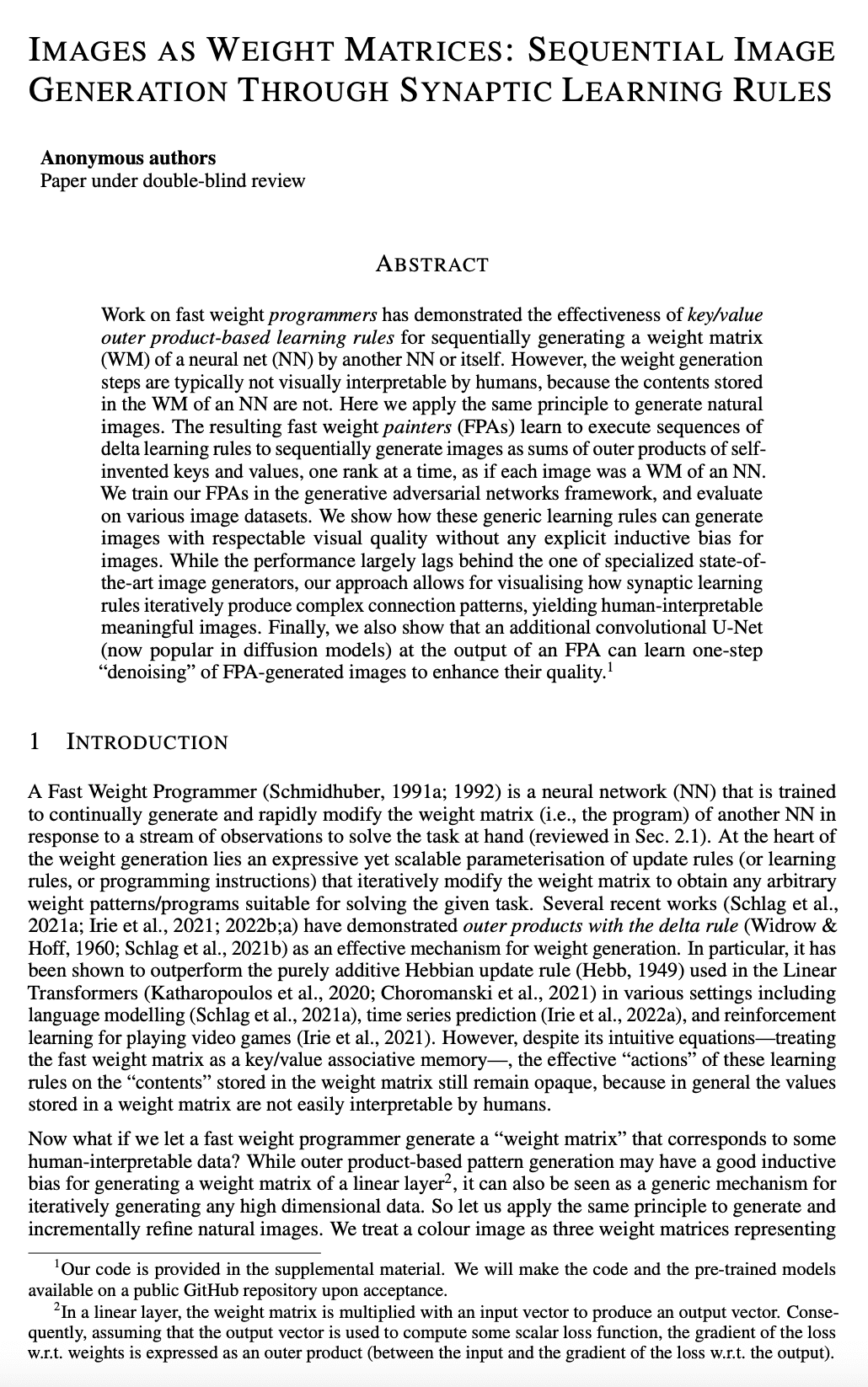

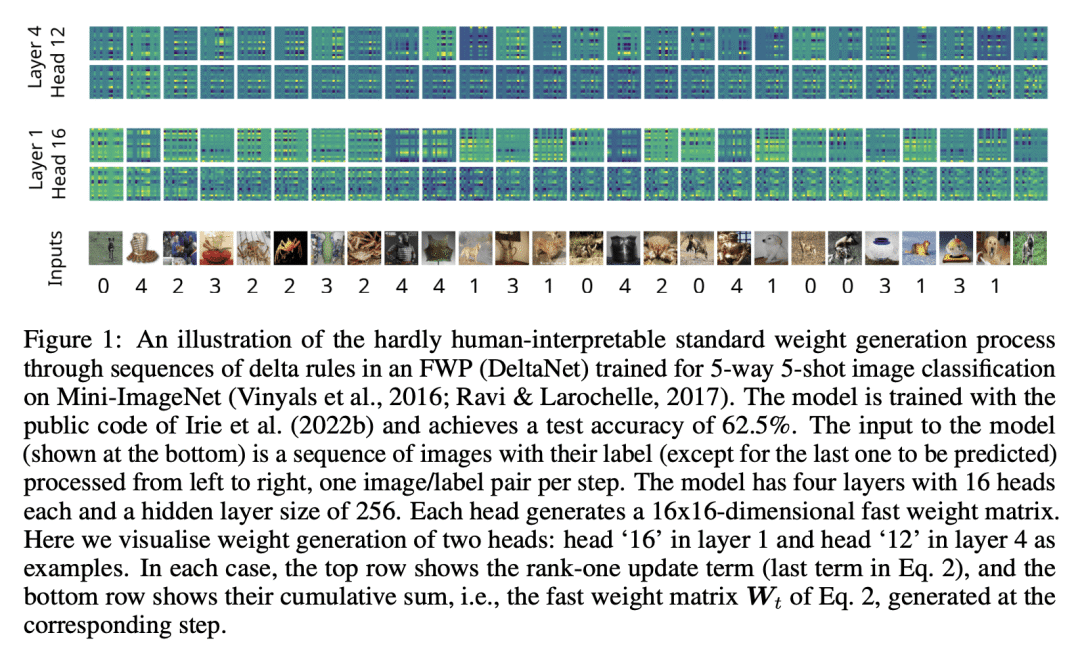

3、[LG] Images as Weight Matrices: Sequential Image Generation Through Synaptic Learning Rules

K Irie, J Schmidhuber

[IDSIA]

图像作为权重矩阵: 基于突触学习规则的序列图像生成

要点:

-

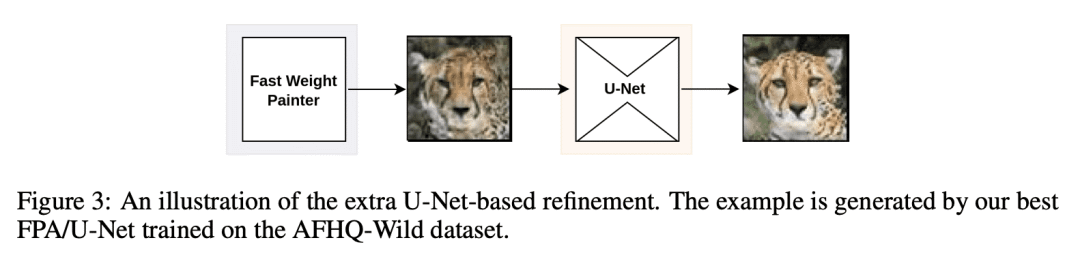

将快速权重编程器(FWP)应用于图像生成,在六个不同的图像域可视化了迭代 FFP 过程; -

由此产生的 FPA 通过执行基于自发明学习模式的 NN 控制学习规则序列来学习生成看起来像自然图像的权重矩阵; -

本文研究的方法并不是生成图像的最佳方法,但其展示了 NN 如何以目标导向的方式学习控制突触权重学习规则序列,以生成复杂而有意义的权重模式; -

在 FPA 输出端有一个额外的卷积 U-Net,可以对 FPA 生成的图像进行一步式的”去噪 “学习,以提高其质量。

一句话总结:

神经网络可以使用通用的突触学习规则和外积生成自然图像,从而实现复杂连接模式的人可理解的可视化。

Work on fast weight programmers has demonstrated the effectiveness of key/value outer product-based learning rules for sequentially generating a weight matrix (WM) of a neural net (NN) by another NN or itself. However, the weight generation steps are typically not visually interpretable by humans, because the contents stored in the WM of an NN are not. Here we apply the same principle to generate natural images. The resulting fast weight painters (FPAs) learn to execute sequences of delta learning rules to sequentially generate images as sums of outer products of self-invented keys and values, one rank at a time, as if each image was a WM of an NN. We train our FPAs in the generative adversarial networks framework, and evaluate on various image datasets. We show how these generic learning rules can generate images with respectable visual quality without any explicit inductive bias for images. While the performance largely lags behind the one of specialized state-of-the-art image generators, our approach allows for visualising how synaptic learning rules iteratively produce complex connection patterns, yielding human-interpretable meaningful images. Finally, we also show that an additional convolutional U-Net (now popular in diffusion models) at the output of an FPA can learn one-step ”denoising” of FPA-generated images to enhance their quality.

https://openreview.net/forum?id=ddad0PNUvV

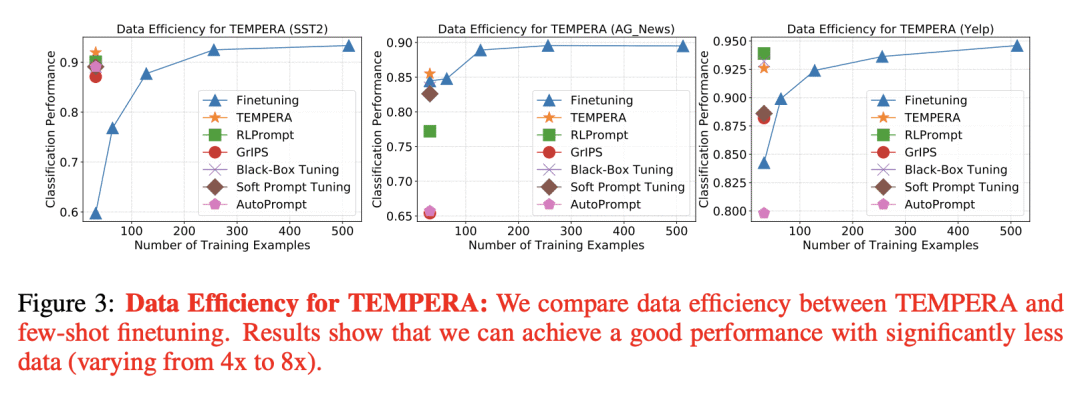

4、[LG] TEMPERA: Test-Time Prompt Editing via Reinforcement Learning

T Zhang, X Wang, D Zhou, D Schuurmans, J E Gonzalez

[Google & UC Berkeley]

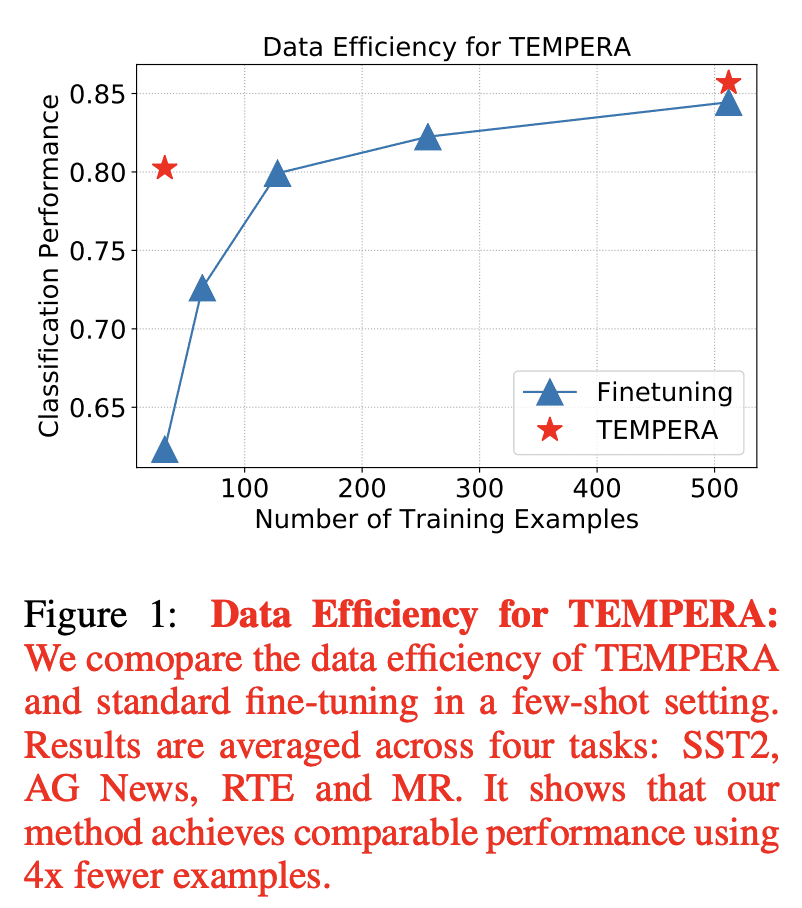

TEMPERA: 基于强化学习的测试时提示编辑

要点:

-

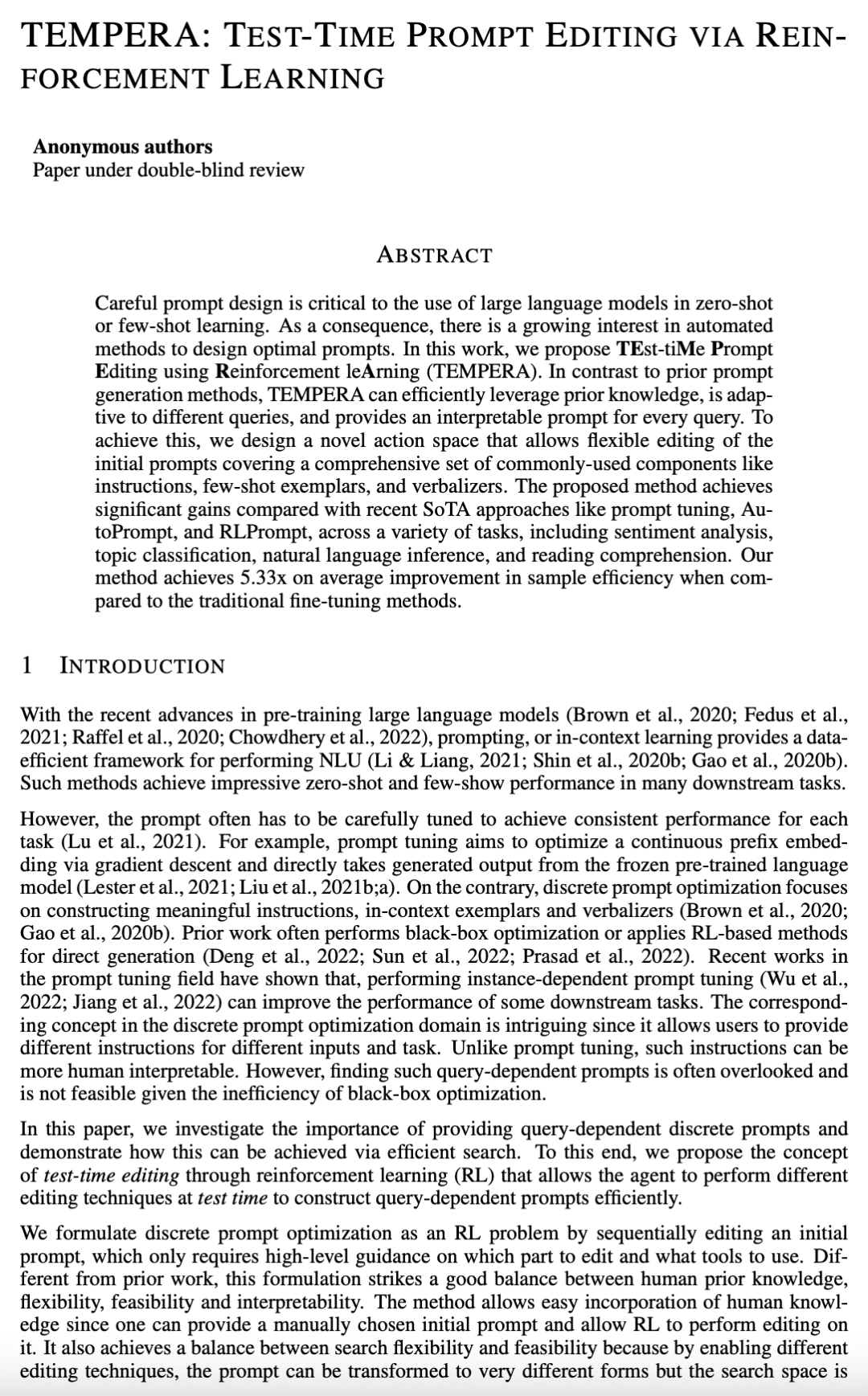

TEMPERA 是一种新的大型语言模型测试时提示编辑方法,可有效地利用先验知识并自适应不同的查询; -

TEMPERA 与最近的 SoTA 方法(如提示微调、AutoPrompt 和 RLPrompt)相比,在各种任务中取得了显著的收益; -

与传统微调方法相比,TEMPERA 实现了平均 5.33 倍的采样效率提升; -

所提方法为每个查询提供了一个可解释的提示,并且可以很容易地纳入人工的先验知识。

一句话总结:

TEMPERA 是一种针对大型语言模型的测试时提示编辑方法,有效利用了先验知识,能自适应不同查询,并为每个查询提供可解释的提示。

Careful prompt design is critical to the use of large language models in zero-shot or few-shot learning. As a consequence, there is a growing interest in automated methods to design optimal prompts. In this work, we propose Test-time Prompt Editing using Reinforcement learning (TEMPERA). In contrast to prior prompt generation methods, TEMPERA can efficiently leverage prior knowledge, is adaptive to different queries and provides an interpretable prompt for every query. To achieve this, we design a novel action space that allows flexible editing of the initial prompts covering a wide set of commonly-used components like instructions, few-shot exemplars, and verbalizers. The proposed method achieves significant gains compared with recent SoTA approaches like prompt tuning, AutoPrompt, and RLPrompt, across a variety of tasks including sentiment analysis, topic classification, natural language inference, and reading comprehension. Our method achieves 5.33x on average improvement in sample efficiency when compared to the traditional fine-tuning methods.

https://openreview.net/forum?id=gSHyqBijPFO

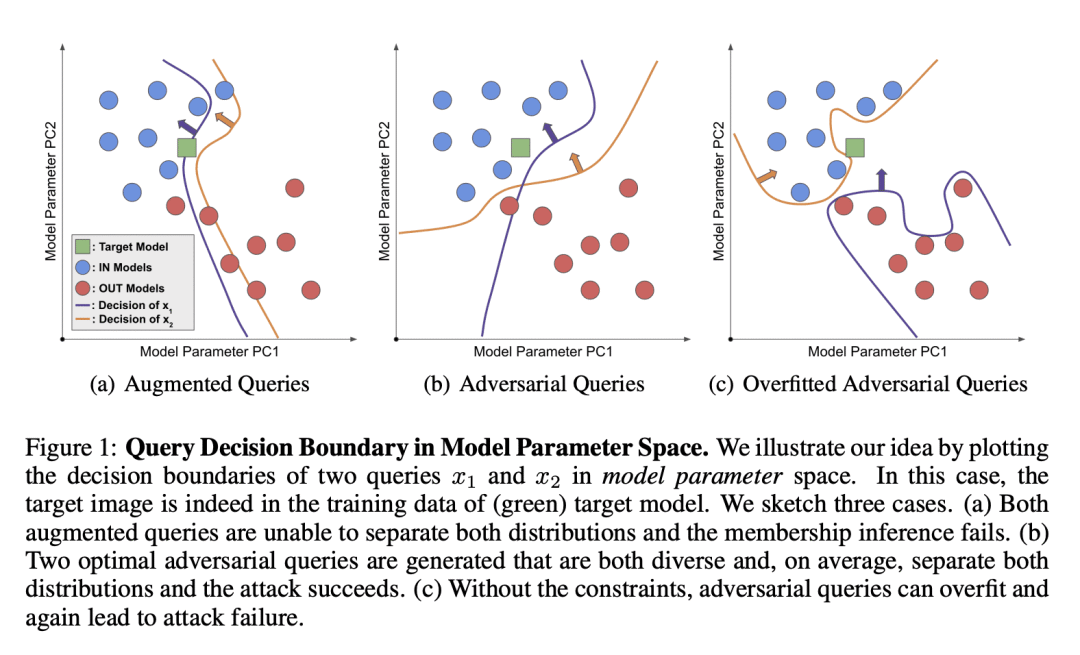

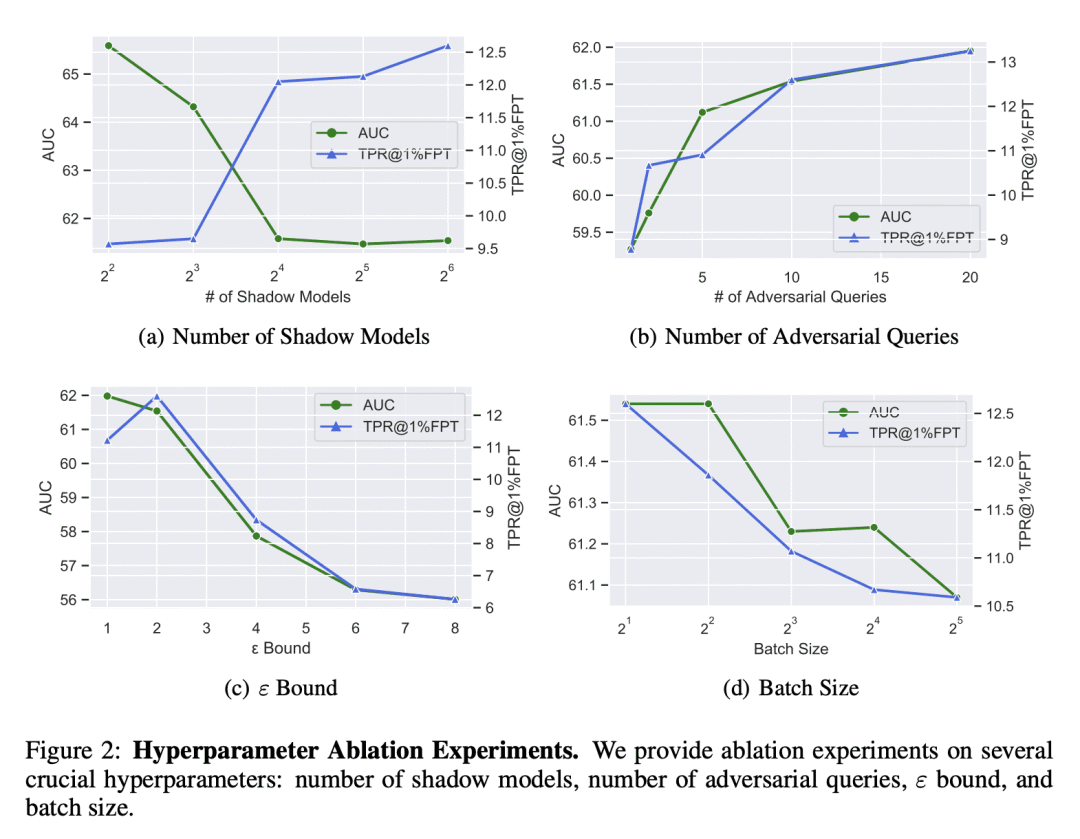

5、[LG] Canary in a Coalmine: Better Membership Inference with Ensembled Adversarial Queries

Y Wen, A Bansal, H Kazemi, E Borgnia, M Goldblum…

[University of Maryland & University of Chicago & New York University]

Canary in a Coalmine: 基于集成对抗查询改善成员推断

要点:

-

成员推断通过使用统计技术来辨别目标样本是否包含在模型的训练集中,现有的成员推断方法由于对模型行为的稀疏采样,推断能力很差; -

Canary 攻击用对抗工具来优化鉴别性和多样性查询,以增强成员推断技术; -

集成对抗查询为使用/不使用目标数据样本训练的模型提供了最大程度的不同结果; -

Canary 攻击在黑盒设置中实现了成员推断的最先进性能。

一句话总结:

对抗性查询为在机器学习模型中进行成员推断提供了一种更有效和准确的方法。

As industrial applications are increasingly automated by machine learning models, enforcing personal data ownership and intellectual property rights requires tracing training data back to their rightful owners. Membership inference algorithms approach this problem by using statistical techniques to discern whether a target sample was included in a model’s training set. However, existing methods only utilize the unaltered target sample or simple augmentations of the target to compute statistics. Such a sparse sampling of the model’s behavior carries little information, leading to poor inference capabilities. In this work, we use adversarial tools to directly optimize for queries that are discriminative and diverse. Our improvements achieve significantly more accurate membership inference than existing methods, especially in offline scenarios and in the low false-positive regime which is critical in legal settings.

https://openreview.net/forum?id=b7SBTEBFnC

另外几篇值得关注的论文:

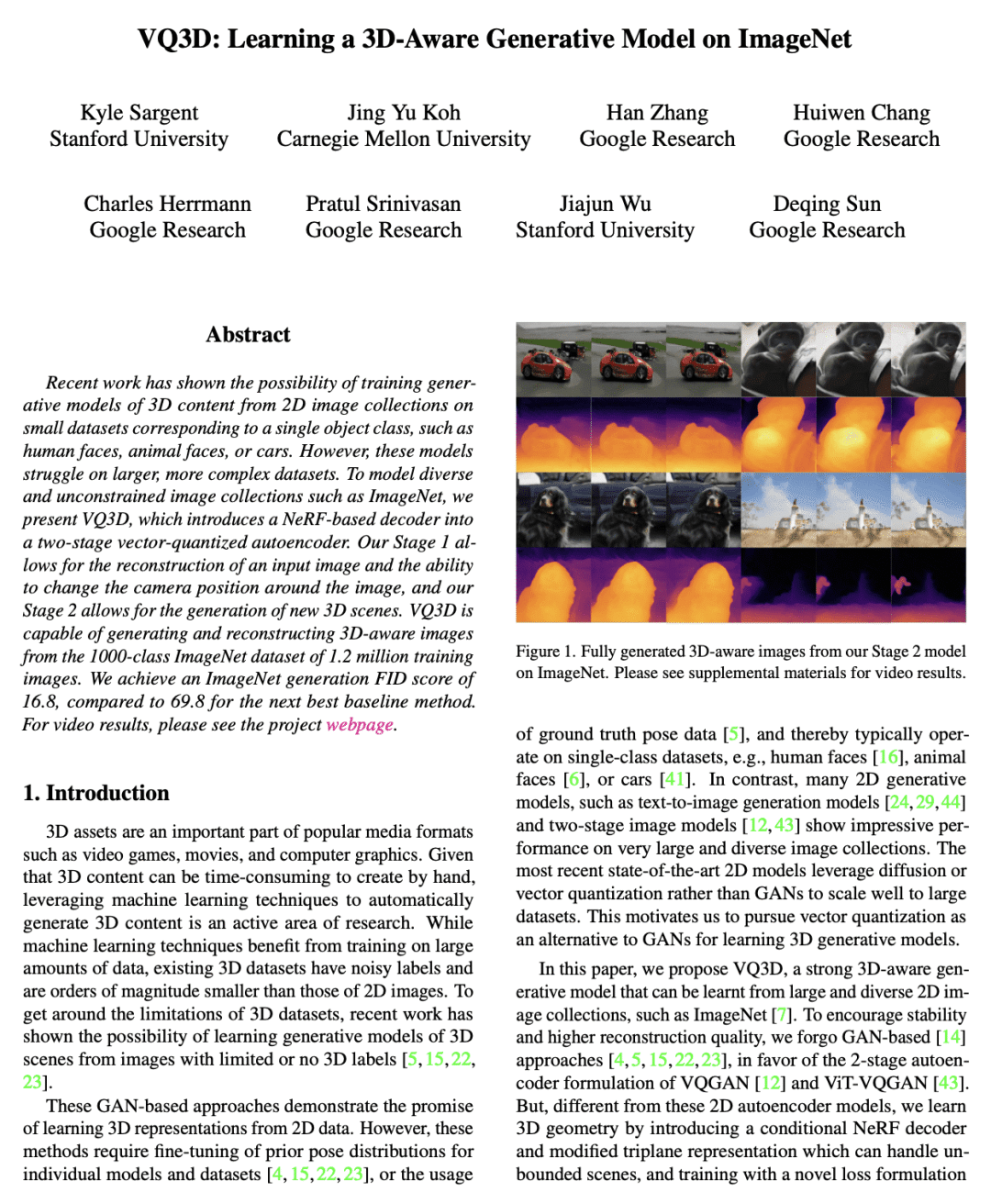

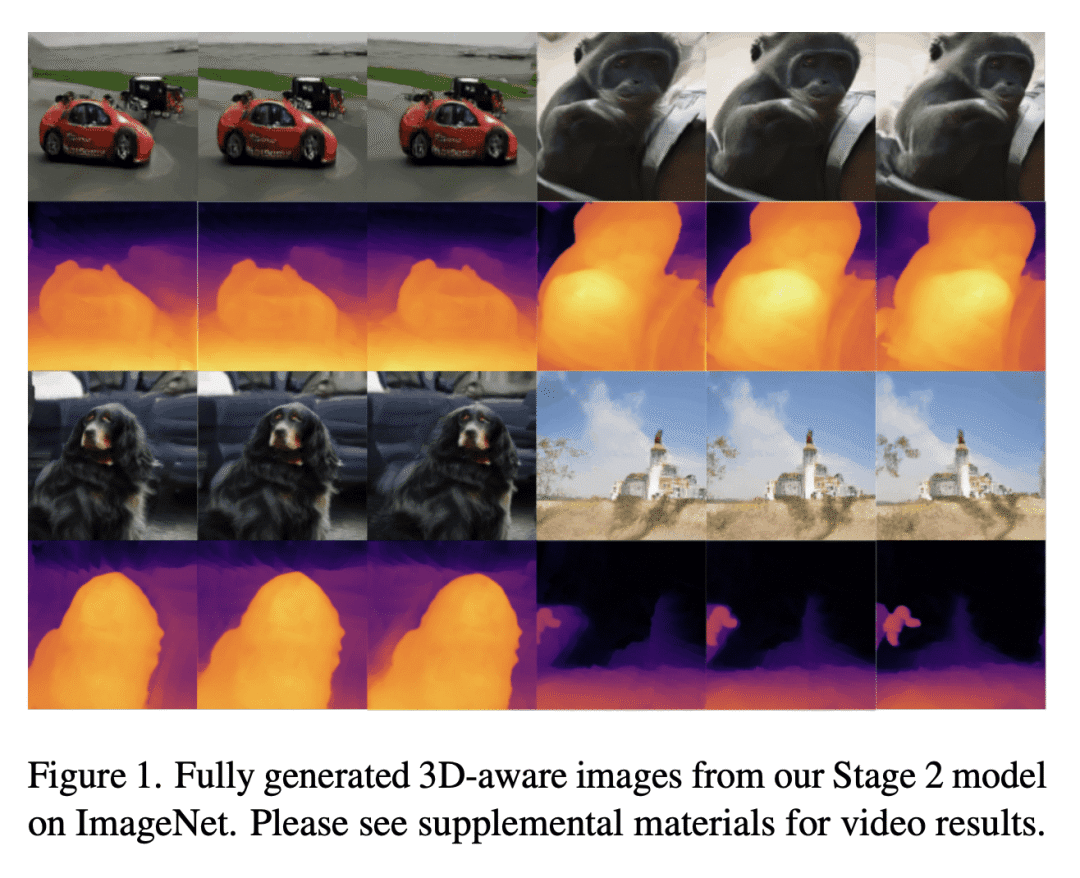

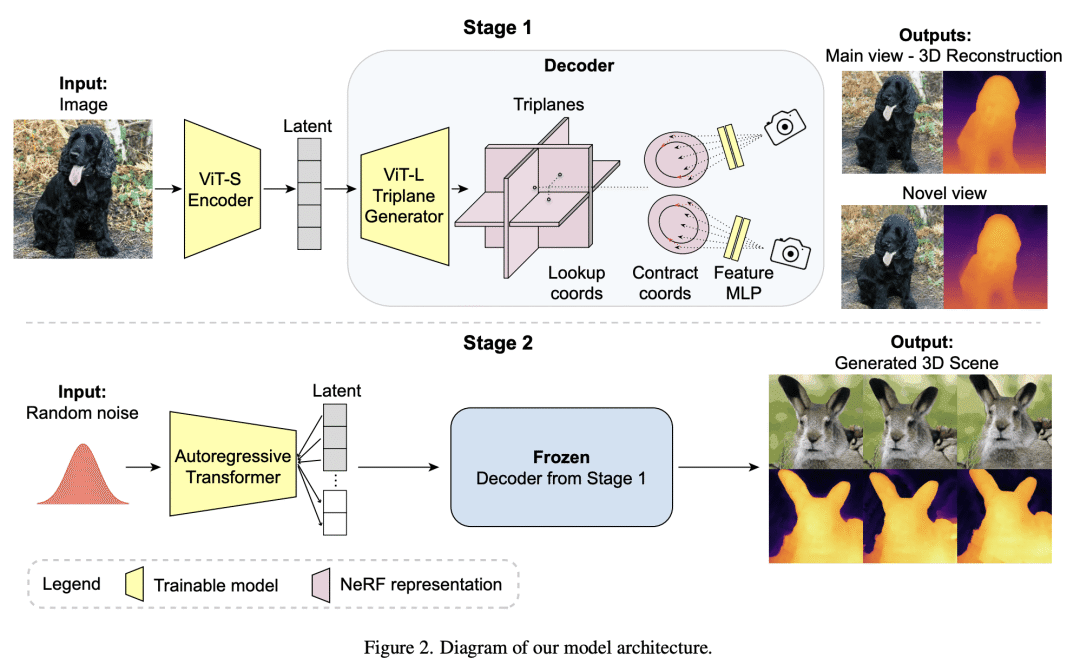

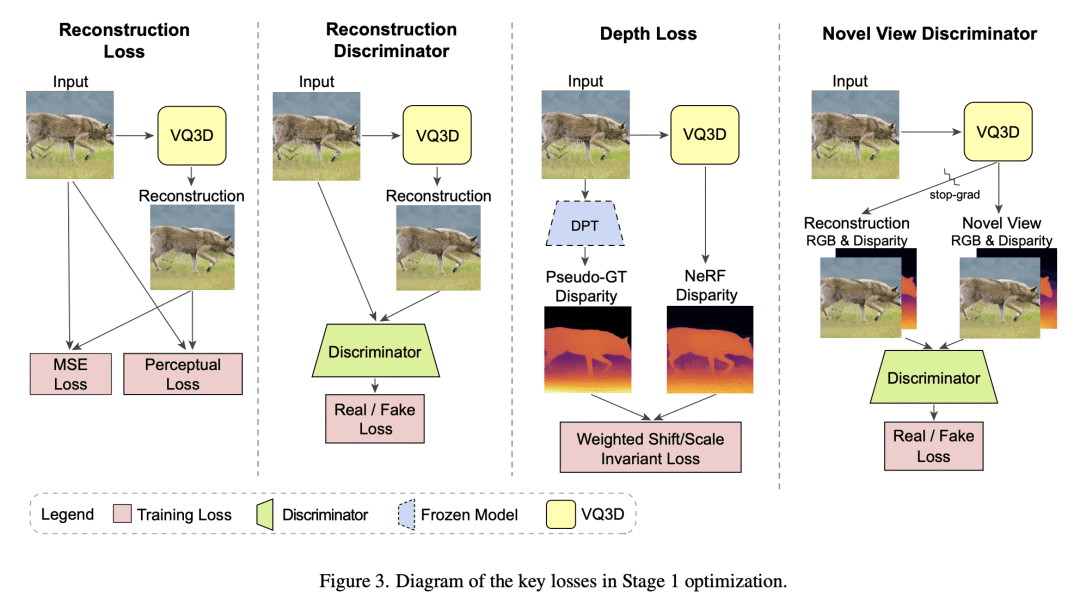

[CV] VQ3D: Learning a 3D-Aware Generative Model on ImageNet

K Sargent, J Y Koh, H Zhang, H Chang, C Herrmann, P Srinivasan, J Wu, D Sun

[Stanford University & CMU & Google Research]

VQ3D: ImageNet上的3D感知生成模型学习

要点:

-

VQ3D 将基于 NeRF 的解码器引入到两阶段矢量量化自编码器中,以建模多样化无约束图像集,如ImageNet; -

VQ3D 实现了 16.8 的 ImageNet 生成 FID 得分,而次好的基线方法为 69.8; -

VQ3D 不需要为每个数据集或真实姿态调整姿态超参数,在训练中可利用伪深度估计器; -

VQ3D 的第1阶段能实现3D感知图像编辑和操纵。

一句话总结:

VQ3D 将基于 NeRF 的解码器引入到两阶段矢量量化自编码器中,以建模多样化无约束图像集合,如 ImageNet,并实现了 ImageNet 生成 FID 得分 16.8,而次好的基线方法为 69.8。

Recent work has shown the possibility of training generative models of 3D content from 2D image collections on small datasets corresponding to a single object class, such as human faces, animal faces, or cars. However, these models struggle on larger, more complex datasets. To model diverse and unconstrained image collections such as ImageNet, we present VQ3D, which introduces a NeRF-based decoder into a two-stage vector-quantized autoencoder. Our Stage 1 allows for the reconstruction of an input image and the ability to change the camera position around the image, and our Stage 2 allows for the generation of new 3D scenes. VQ3D is capable of generating and reconstructing 3D-aware images from the 1000-class ImageNet dataset of 1.2 million training images. We achieve an ImageNet generation FID score of 16.8, compared to 69.8 for the next best baseline method.

https://arxiv.org/abs/2302.06833

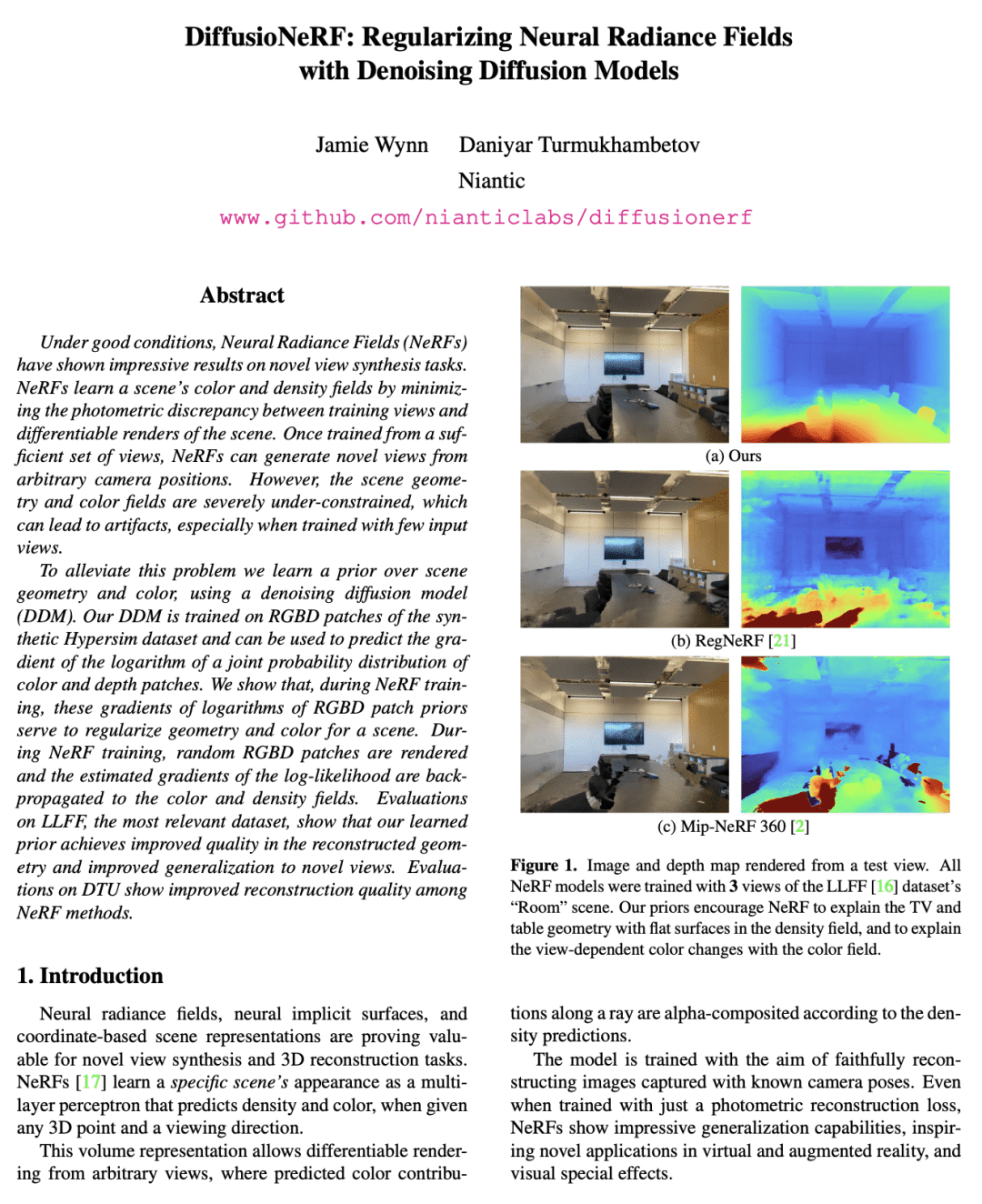

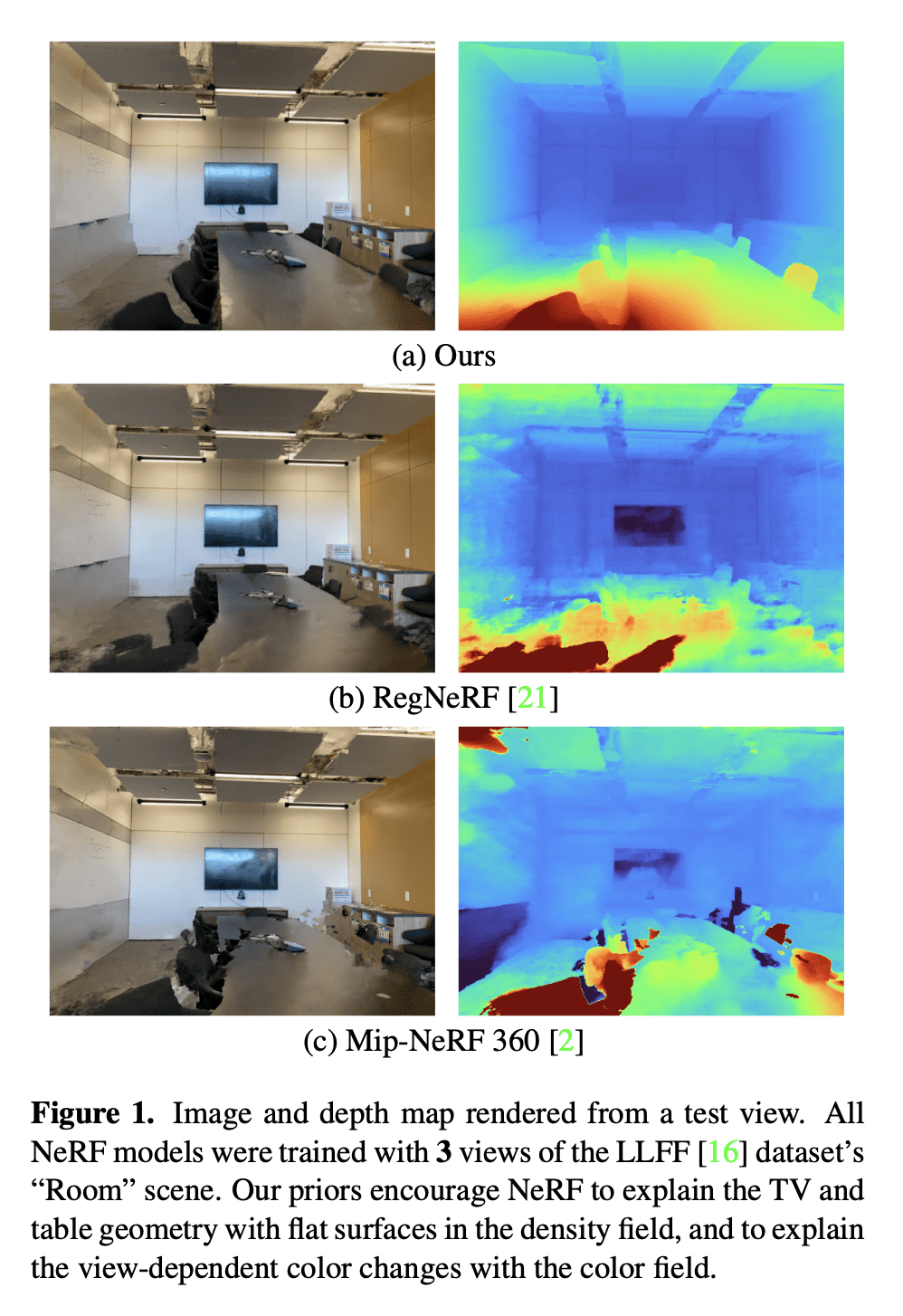

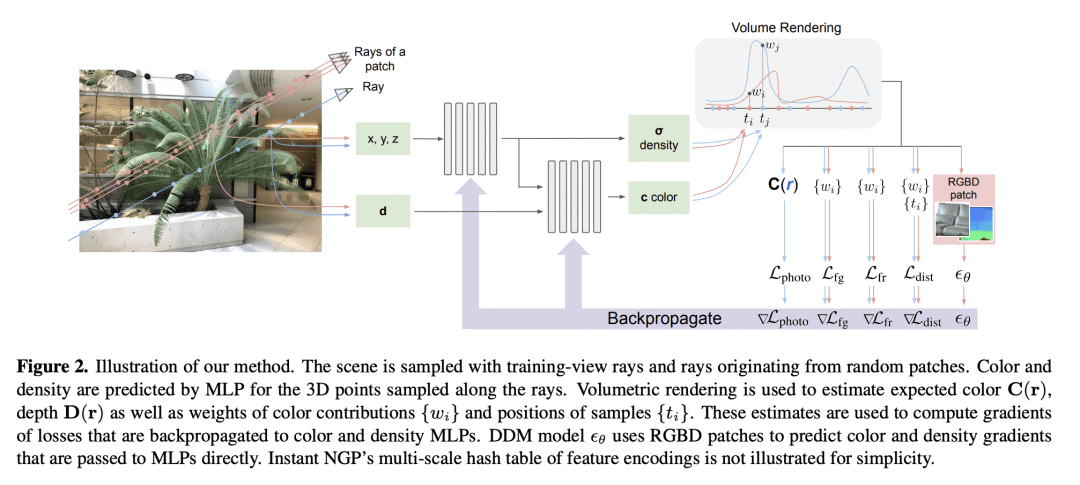

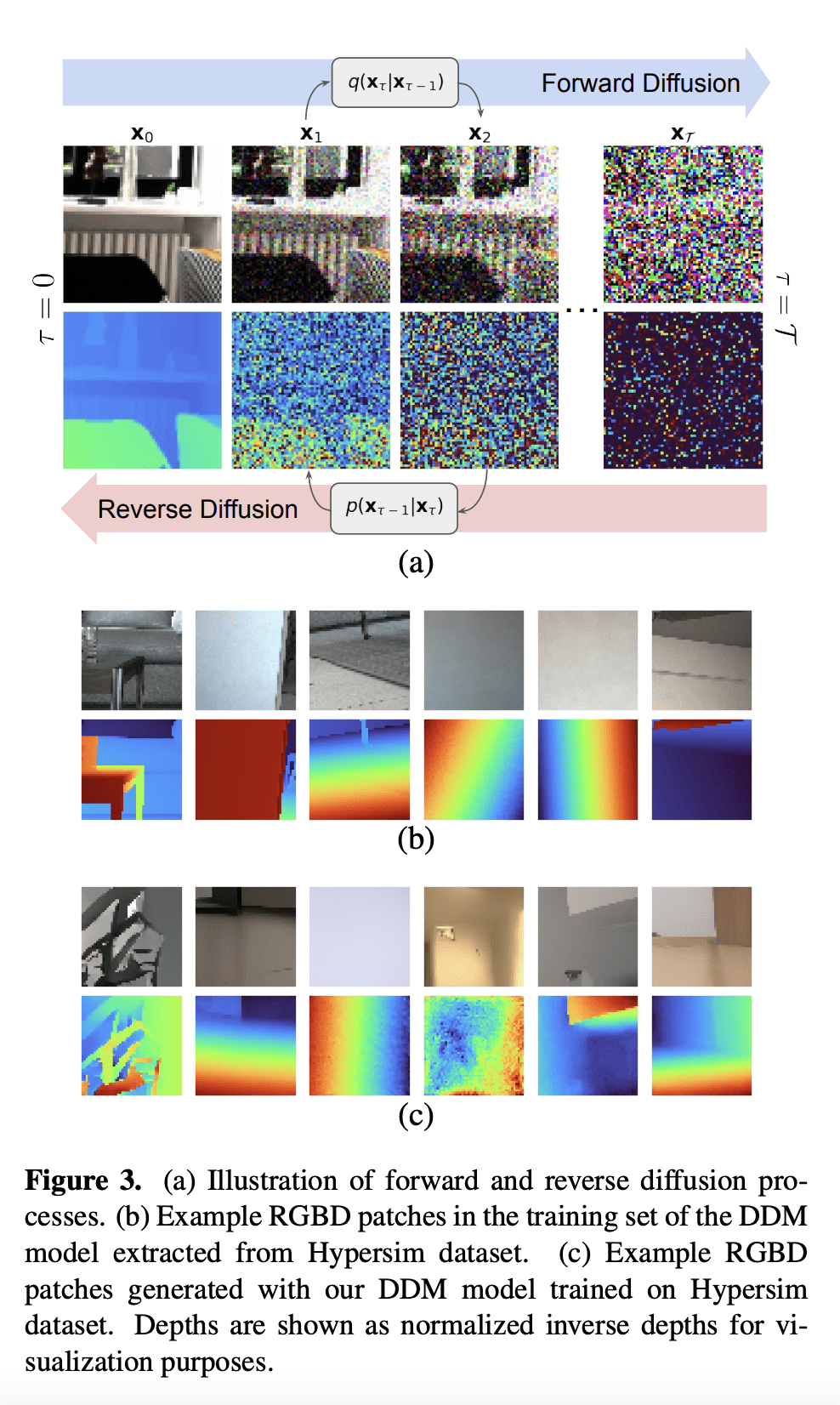

[CV] DiffusioNeRF: Regularizing Neural Radiance Fields with Denoising Diffusion Models

J Wynn, D Turmukhambetov

[Niantic]

DiffusioNeRF: 基于去噪扩散模型的神经辐射场正则化

要点:

-

DiffusioNeRF 用去噪扩散模型(DDM)作为场景几何和颜色的先验,在训练期间对神经辐射场(NeRF)进行正则化; -

DDM 在合成 Hypersim 数据集的 RGBD 图块上进行训练,用于预测颜色和深度图块的联合概率分布的对数梯度; -

通过对 LLFF 和 DTU 数据集的评估,正则化方案提高了新视图合成和 3D 重建的性能; -

所提框架是通用的,可用于正则化 NeRF 的其他方面或用梯度下降优化的其他任务,如自监督单目深度估计或自监督立体匹配。

一句话总结:

DiffusioNeRF 用去噪扩散模型(DDM)对神经辐射场(NeRF)进行正则化,以改善 3D 重建和新视图合成。

To alleviate this problem we learn a prior over scene geometry and color, using a denoising diffusion model (DDM). Our DDM is trained on RGBD patches of the synthetic Hypersim dataset and can be used to predict the gradient of the logarithm of a joint probability distribution of color and depth patches. We show that, during NeRF training, these gradients of logarithms of RGBD patch priors serve to regularize geometry and color for a scene. During NeRF training, random RGBD patches are rendered and the estimated gradients of the log-likelihood are backpropagated to the color and density fields. Evaluations on LLFF, the most relevant dataset, show that our learned prior achieves improved quality in the reconstructed geometry and improved generalization to novel views. Evaluations on DTU show improved reconstruction quality among NeRF methods.

https://arxiv.org/abs/2302.12231

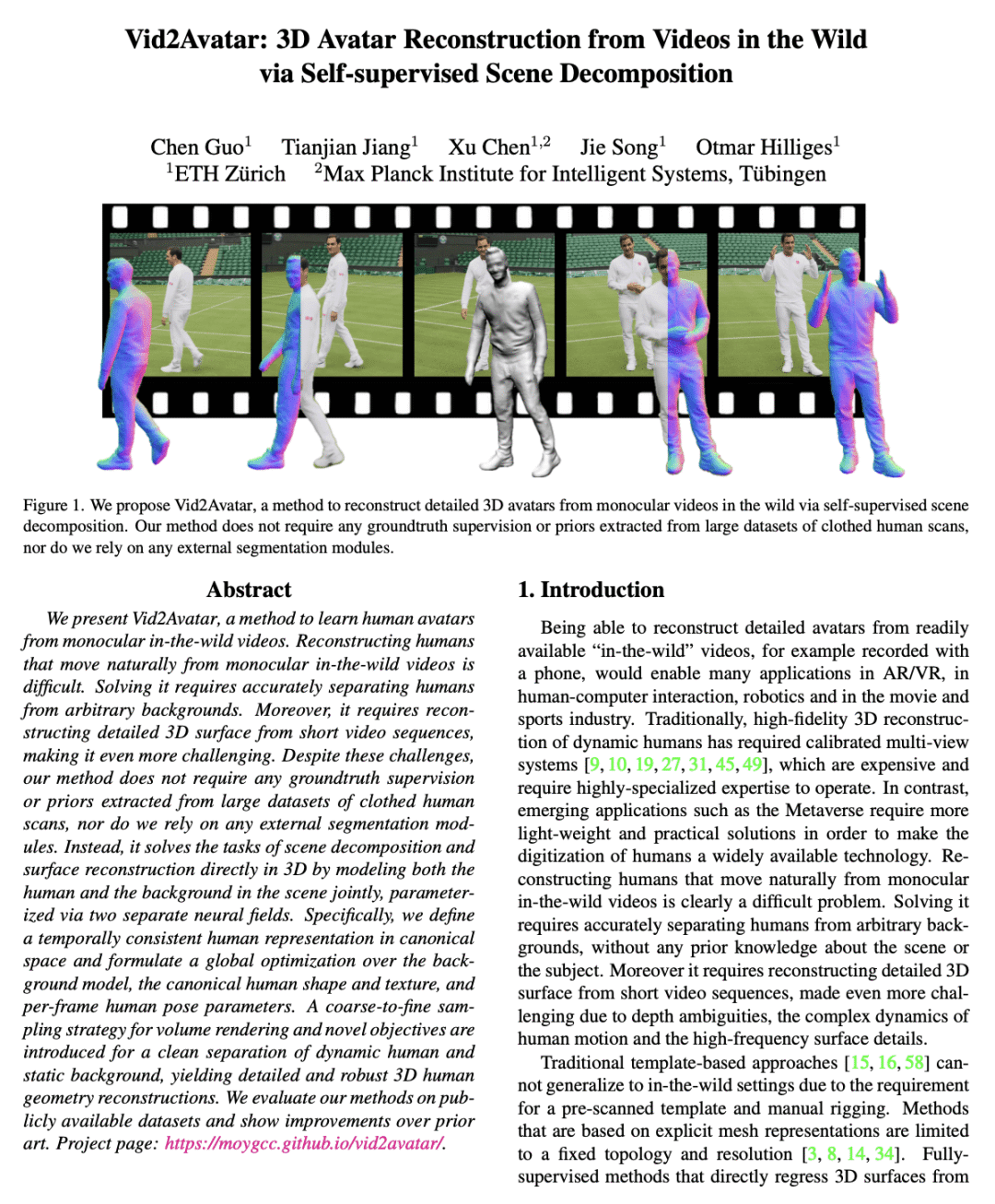

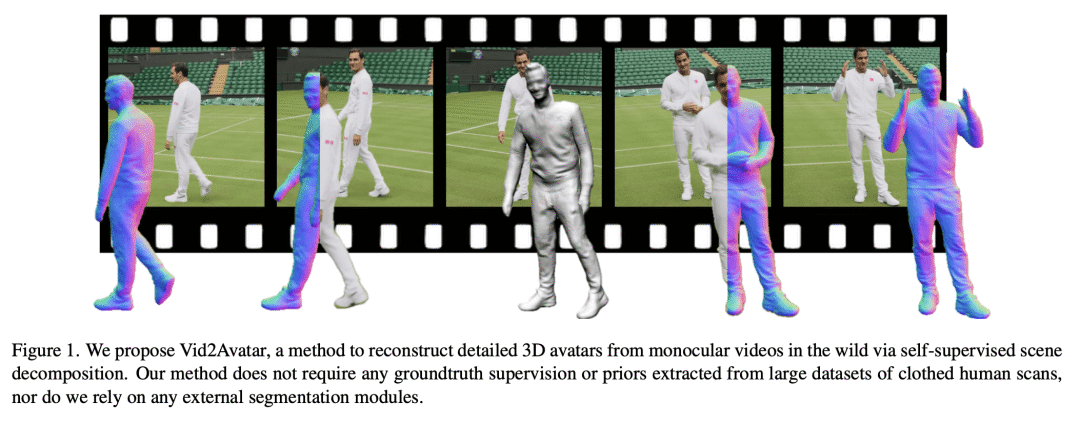

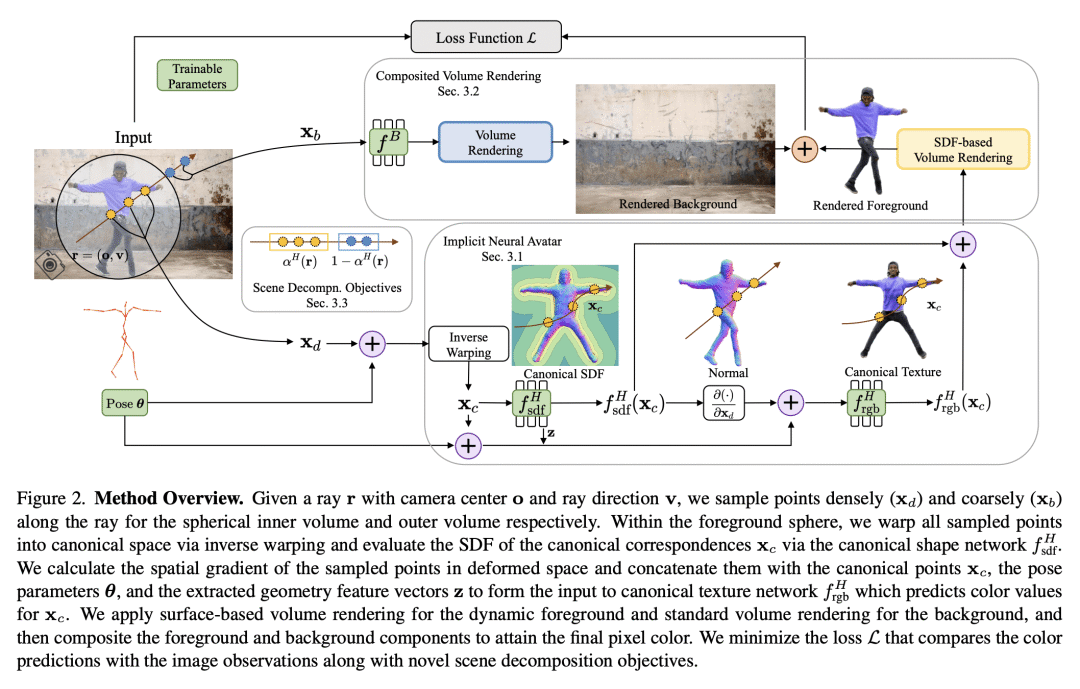

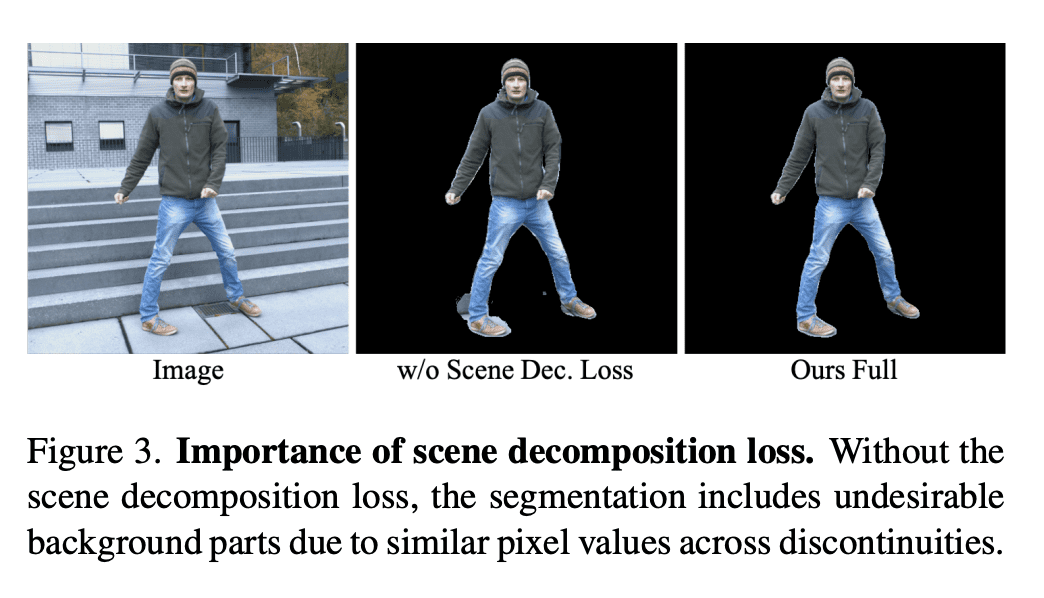

[CV] Vid2Avatar: 3D Avatar Reconstruction from Videos in the Wild via Self-supervised Scene Decomposition

C Guo, T Jiang, X Chen, J Song, O Hilliges

[ETH Zurich]

Vid2Avatar: 基于自监督场景分解的真实场景视频3D化身重建

要点:

-

Vid2Avatar通过自监督场景分解,从真实场景单目视频中重建详细的 3D 化身; -

不需要来自人工扫描的大型数据集或外部分割模块的真实数据监督或先验; -

制定了一个基于新场景分解目标的全局优化,以共同优化背景场、典型人类形状和外观以及人体姿态估计的参数; -

Vid2Avatar 从单目视频中实现了鲁棒和高保真的人体重建。

一句话总结:

Vid2Avatar 是一种自监督方法,用于从真实场景单目视频重建详细的3D化身,不需要真实数据监督或外部分割模块。

We present Vid2Avatar, a method to learn human avatars from monocular in-the-wild videos. Reconstructing humans that move naturally from monocular in-the-wild videos is difficult. Solving it requires accurately separating humans from arbitrary backgrounds. Moreover, it requires reconstructing detailed 3D surface from short video sequences, making it even more challenging. Despite these challenges, our method does not require any groundtruth supervision or priors extracted from large datasets of clothed human scans, nor do we rely on any external segmentation modules. Instead, it solves the tasks of scene decomposition and surface reconstruction directly in 3D by modeling both the human and the background in the scene jointly, parameterized via two separate neural fields. Specifically, we define a temporally consistent human representation in canonical space and formulate a global optimization over the background model, the canonical human shape and texture, and per-frame human pose parameters. A coarse-to-fine sampling strategy for volume rendering and novel objectives are introduced for a clean separation of dynamic human and static background, yielding detailed and robust 3D human geometry reconstructions. We evaluate our methods on publicly available datasets and show improvements over prior art.

https://arxiv.org/abs/2302.11566

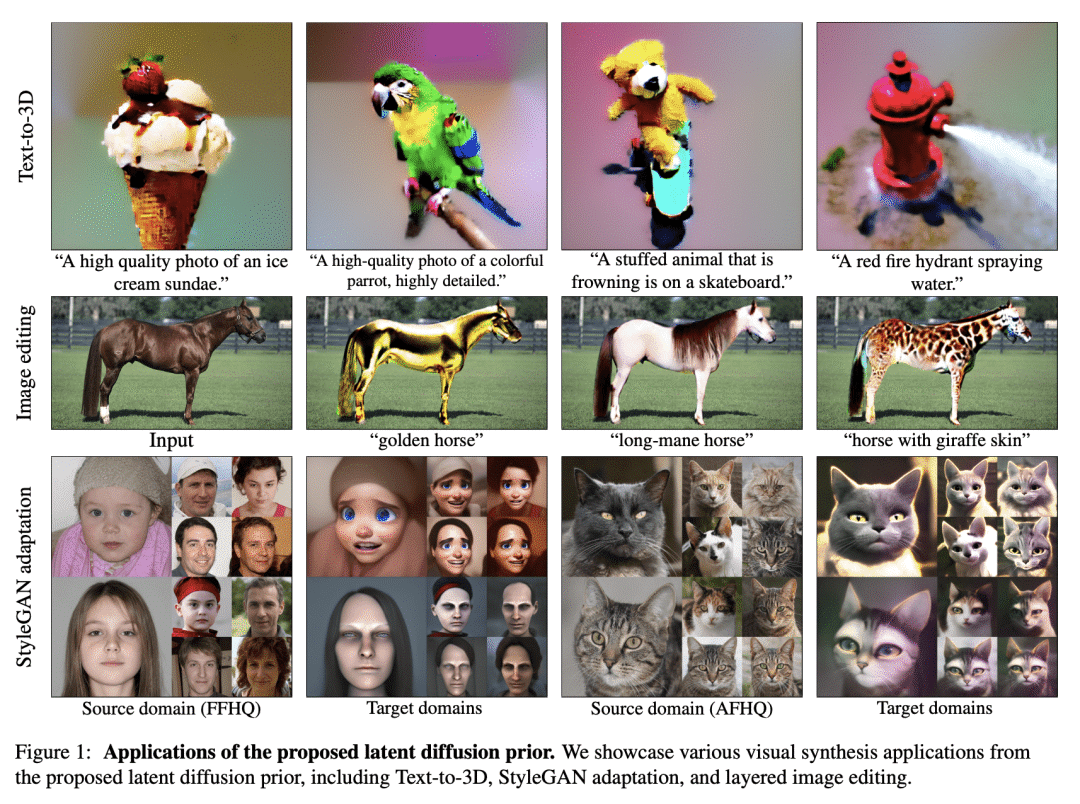

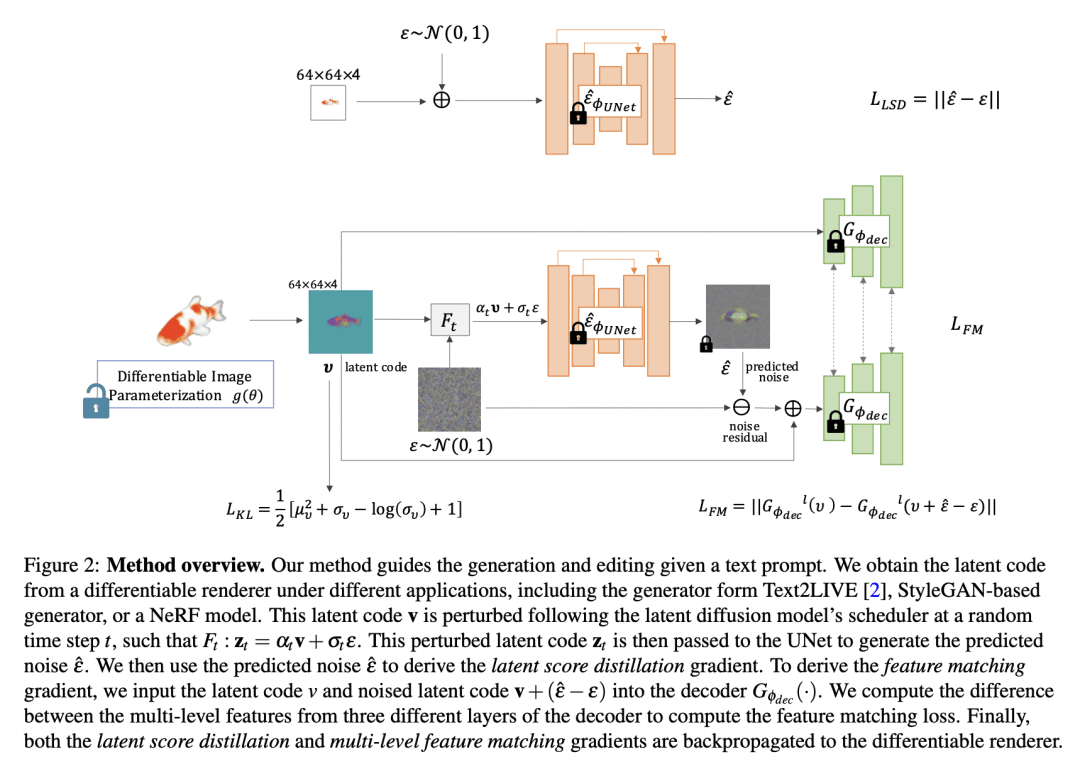

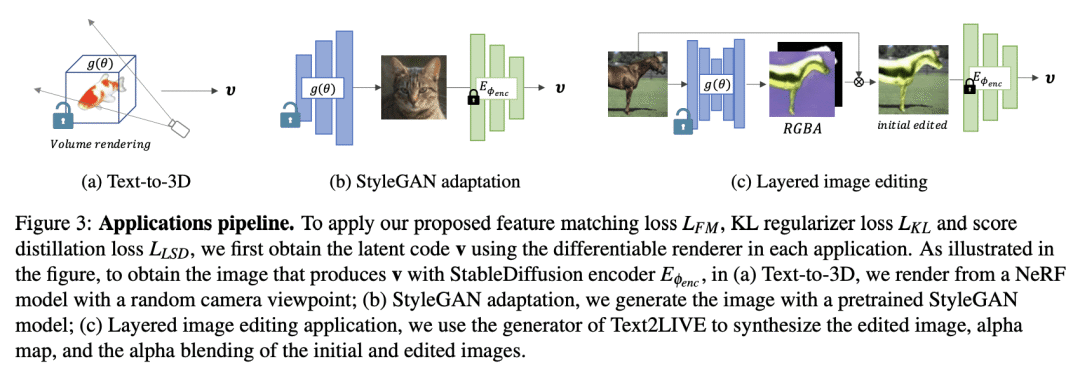

[CV] Text-driven Visual Synthesis with Latent Diffusion Prior

T Liao, S Ge, Y Xu, Y Lee, B AlBahar, J Huang

[University of Maryland]

基于潜扩散先验的文本驱动视觉合成

要点:

-

将扩散模型用来作为视觉合成任务的先验; -

提出一种特征匹配损失,以从解码器中提取详细信息来指导基于文本的视觉合成任务;

3。 提出一种 KL 损失来正则化优化潜空间,稳定优化过程; -

广泛的实验评估表明,在文本到3D、StyleGAN自适应和分层图像编辑任务上,具有很强的基线竞争结果。

一句话总结:

提出了一种框架,将扩散模型作为视觉合成任务的先验,与强基线相比,提高了质量。

There has been tremendous progress in large-scale text-to-image synthesis driven by diffusion models enabling versatile downstream applications such as 3D object synthesis from texts, image editing, and customized generation. We present a generic approach using latent diffusion models as powerful image priors for various visual synthesis tasks. Existing methods that utilize such priors fail to use these models’ full capabilities. To improve this, our core ideas are 1) a feature matching loss between features from different layers of the decoder to provide detailed guidance and 2) a KL divergence loss to regularize the predicted latent features and stabilize the training. We demonstrate the efficacy of our approach on three different applications, text-to-3D, StyleGAN adaptation, and layered image editing. Extensive results show our method compares favorably against baselines.

https://arxiv.org/abs/2302.08510

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง