1、[CL] LLaMA: Open and Efficient Foundation Language Models

2、[LG] Revisiting Activation Function Design for Improving Adversarial Robustness at Scale

3、[LG] What Do We Maximize in Self-Supervised Learning And Why Does Generalization Emerge?

4、[LG] Learning ReLU networks to high uniform accuracy is intractable

5、[LG] The Asymmetric Maximum Margin Bias of Quasi-Homogeneous Neural Networks

[CL] DocPrompting: Generating Code by Retrieving the Docs

[CV] Video Probabilistic Diffusion Models in Projected Latent Space

[CL] Active Prompting with Chain-of-Thought for Large Language Models

[LG] Machine Love

摘要:开源高效基础语言模型LLaMA、改进激活函数设计以提高大规模对抗鲁棒性、自监督学习最大化目标及泛化机制、用ReLU网络达到一致的高精度是难以实现的、准齐次神经网络非对称最大边际偏差、基于文档检索的代码生成、投射潜空间视频概率扩散模型、大型语言模型基于思维链的主动提示、机器之爱

1、[CL] LLaMA: Open and Efficient Foundation Language Models

H Touvron, T Lavril, G Izacard…

[Meta AI]

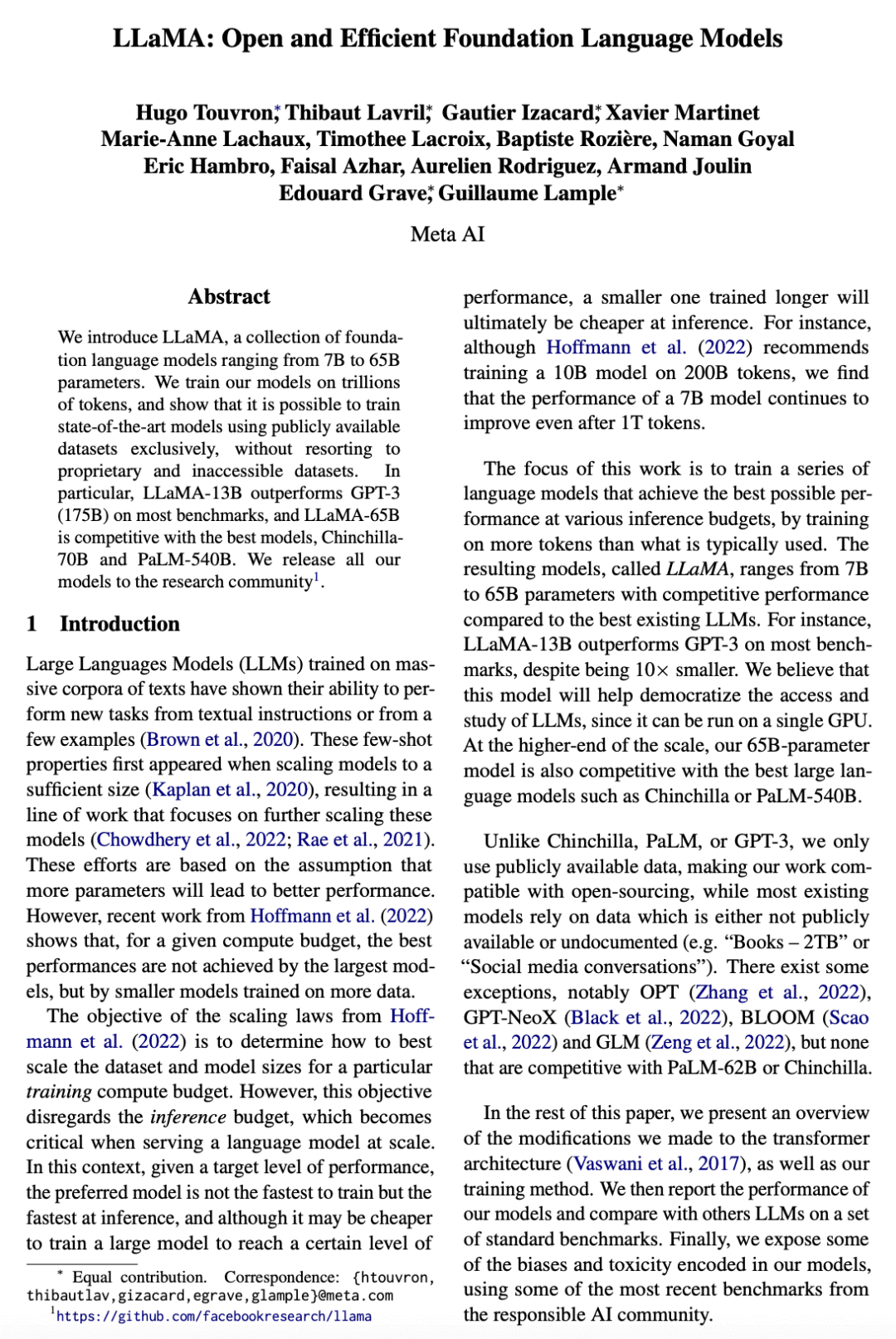

LLaMA: 开源高效的基础语言模型

要点:

-

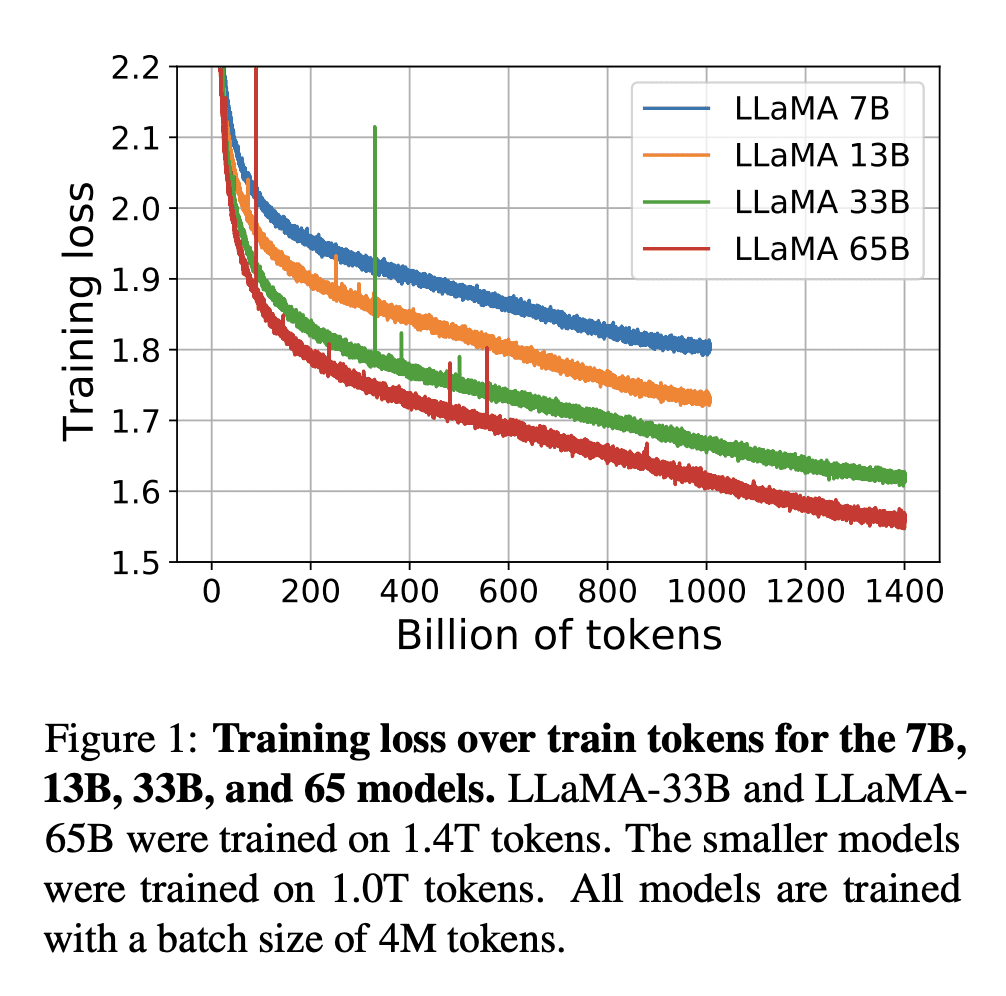

LLaMA 是一个开源的基础语言模型集合,参数范围从7B到65B,完全使用公开的数据集在数万亿 Token 上训练; -

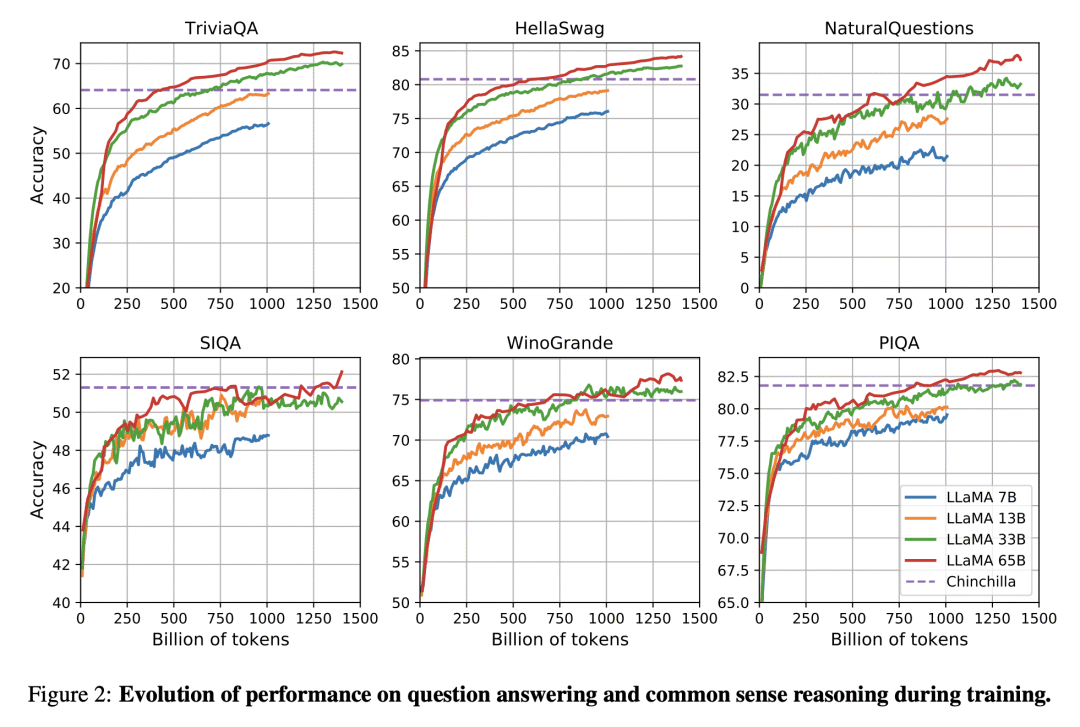

LLaMA-13B 在大多数基准上都优于 GPT-3(175B),而体积却小了 10 倍以上,LLaMA-65B 与最好的模型 Chinchilla70B 和 PaLM-540B 相比有竞争力; -

该研究表明,通过完全在公开可用的数据上进行训练,有可能达到最先进的性能,而不需要求助于专有的数据集,这可能有助于努力提高鲁棒性和减轻已知的问题,如毒性和偏见; -

向研究界发布LLaMA模型,可能会加速大型语言模型的开放,并促进对指令微调的进一步研究,未来的工作将包括发布在更大的预训练语料库上训练的更大的模型。

一句话总结:

LLaMA 是开放高效的基础语言模型集合,仅在公开可用的数据集上进行训练,在大多数基准测试中表现优于GPT-3。

We introduce LLaMA, a collection of foundation language models ranging from 7B to 65B parameters. We train our models on trillions of tokens, and show that it is possible to train state-of-the-art models using publicly available datasets exclusively, without resorting to proprietary and inaccessible datasets. In particular, LLaMA-13B outperforms GPT-3 (175B) on most benchmarks, and LLaMA-65B is competitive with the best models, Chinchilla70B and PaLM-540B. We release all our models to the research community.

https://research.facebook.com/publications/llama-open-and-efficient-foundation-language-models/

2、[LG] Revisiting Activation Function Design for Improving Adversarial Robustness at Scale

C Xie, M Tan, B Gong, A Yuille, Q V Le

[University of California, Santa Cruz & Google& Johns Hopkins University]

改进激活函数设计以提高大规模对抗鲁棒性

要点:

-

平滑激活函数在不牺牲大规模图像分类准确性的情况下提高了对抗鲁棒性; -

用平滑替代方法取代ReLU,大大改善了 ImageNet 上的对抗鲁棒性,EfficientNet-L1 取得了最先进的结果,而且平滑激活函数在更大的网络中可以很好地扩展; -

在对抗训练中使用平滑激活函数可以提高寻找更难的对抗样本的能力,并为网络优化计算更好的梯度更新,从而在规模上提高鲁棒性; -

该研究强调了激活函数设计对于提高对抗鲁棒性的重要性,并鼓励更多的研究人员在大规模图像分类中研究该方向。

一句话总结:

用 SILU 这样的平滑激活函数代替 ReLU,可以在不牺牲精度的情况下提高对抗鲁棒性,并且在 EfficientNet-L1 这样的大型网络中效果良好。

Modern ConvNets typically use ReLU activation function. Recently smooth activation functions have been used to improve their accuracy. Here we study the role of smooth activation function from the perspective of adversarial robustness. We find that ReLU activation function significantly weakens adversarial training due to its non-smooth nature. Replacing ReLU with its smooth alternatives allows adversarial training to find harder adversarial training examples and to compute better gradient updates for network optimization. We focus our study on the large-scale ImageNet dataset. On ResNet-50, switching from ReLU to the smooth activation function SILU improves adversarial robustness from 33.0% to 42.3%, while also improving accuracy by 0.9% on ImageNet. Smooth activation functions also scale well with larger networks: it helps EfficientNet-L1 to achieve 82.2% accuracy and 58.6% robustness, largely outperforming the previous state-of-the-art defense by 9.5% for accuracy and 11.6% for robustness. Models are available at https://rb.gy/qt8jya.

https://openreview.net/forum?id=BrKY4Wr6dk2

3、[LG] What Do We Maximize in Self-Supervised Learning And Why Does Generalization Emerge?

R Shwartz-Ziv, R Balestriero, K Kawaguchi, Y LeCun

[New York University & Facebook]

自监督学习最大化目标及泛化机制

要点:

-

提供了对自监督学习(SSL)方法及其最优性的信息论理解,可以应用于确定性的深度网络训练; -

基于这种理解提出一种新的泛化界,为下游监督学习任务提供了泛化保证; -

基于第一性原理得出了对不同 SSL 模型的见解,并强调了不同 SSL 变体的基本假设,使不同方法的比较成为可能,并为从业者提供了一般的指导; -

在分析基础上介绍了新的SSL方法,并从经验上验证了其优越性能。

一句话总结:

自监督学习方法可以通过信息论来理解,信息论提供了关于其构建、最优性和泛化保证的见解。

In this paper, we provide an information-theoretic (IT) understanding of self-supervised learning methods, their construction, and optimality. As a first step, we demonstrate how IT quantities can be obtained for deterministic networks, as an alternative to the commonly used unrealistic stochastic networks assumption. Secondly, we demonstrate how different SSL models can be (re)discovered based on first principles and highlight what the underlying assumptions of different SSL variants are. Third, we derive a novel generalization bound based on our IT understanding of SSL methods, providing generalization guarantees for the downstream supervised learning task. As a result of this bound, along with our unified view of SSL, we can compare the different approaches and provide general guidelines to practitioners. Consequently, our derivation and insights can contribute to a better understanding of SSL and transfer learning from a theoretical and practical perspective.

https://openreview.net/forum?id=tuE-MnjN7DV

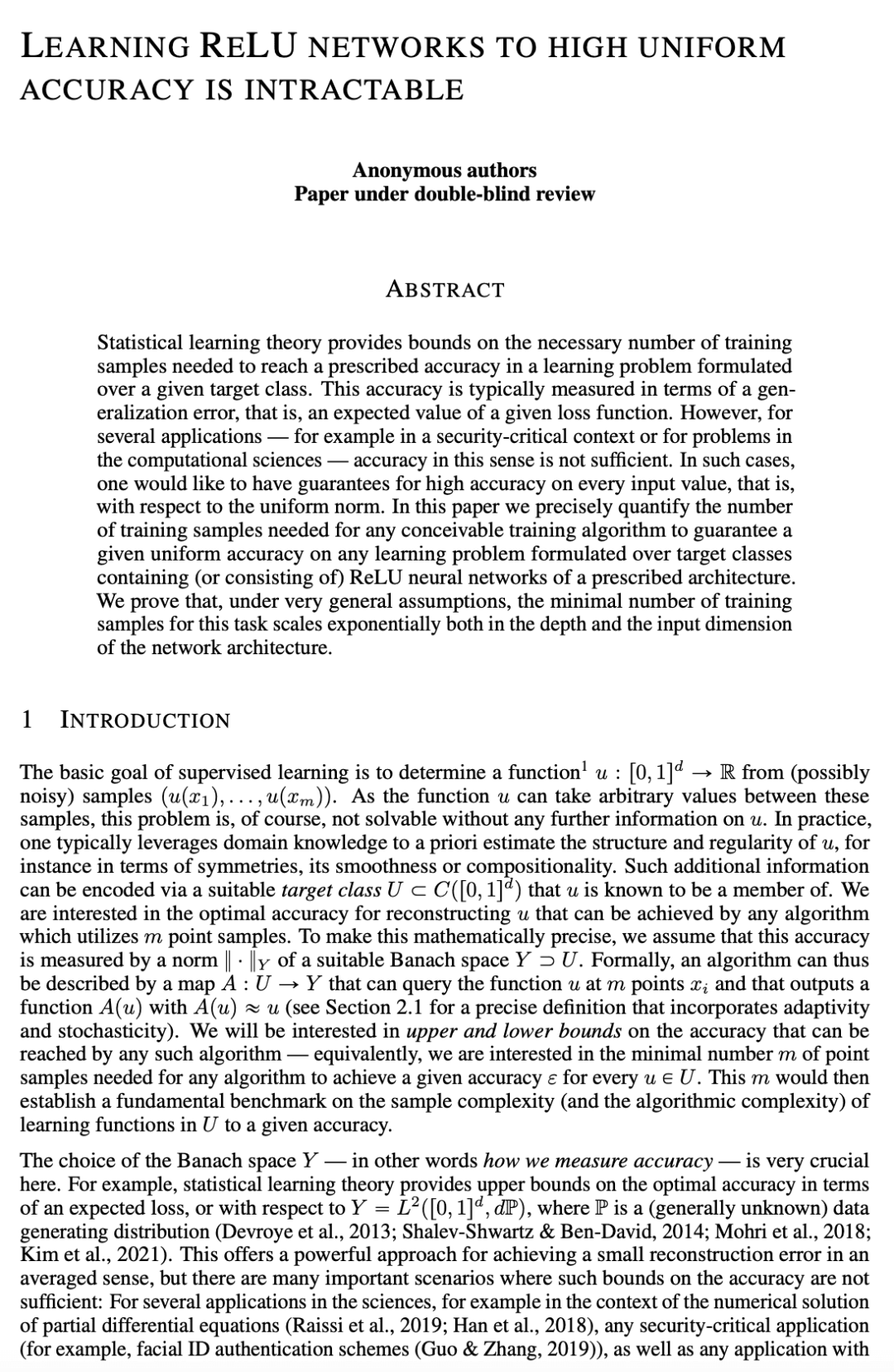

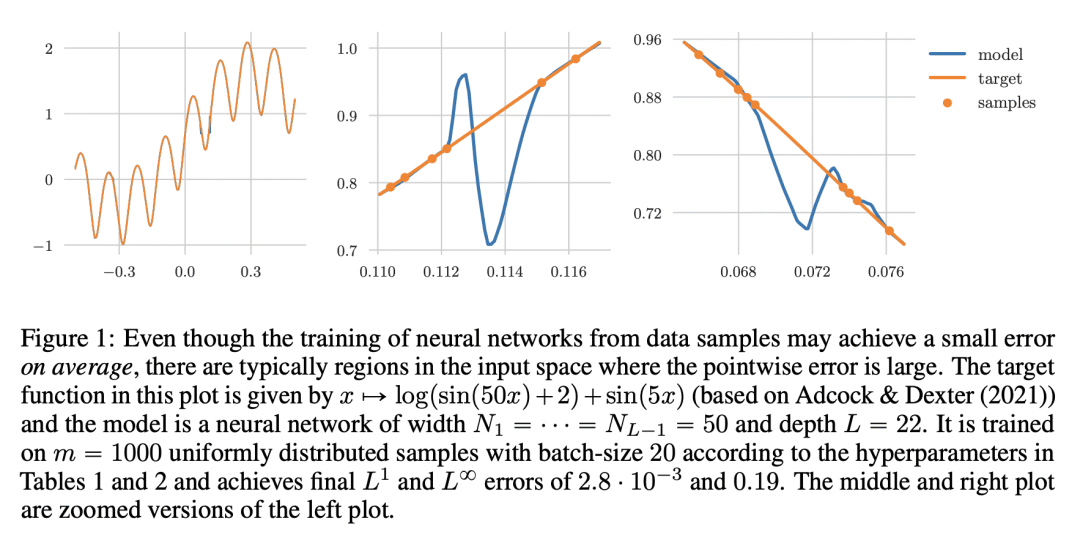

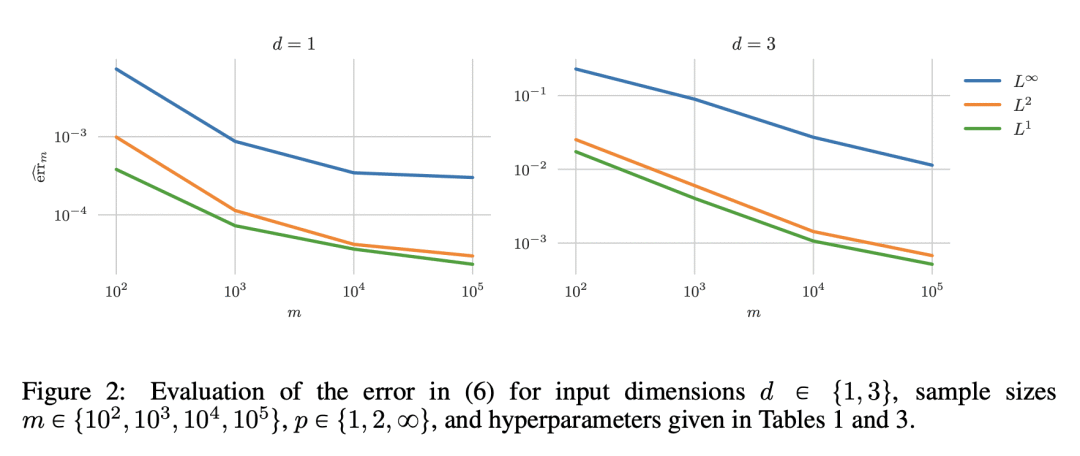

4、[LG] Learning ReLU networks to high uniform accuracy is intractable

J Berner, P Grohs, F Voigtlaender

[University of Vienna]

用ReLU网络达到一致的高精度是难以实现的

要点:

-

在 ReLU 网络的学习问题上达到高的统一精度是难以实现的; -

保证一个给定的统一精度所需的训练样本数量随着网络深度和输入维度呈指数级增长;

3、 当误差以 L8-norm 而不是 L2-norm 来衡量时,下界的限制就更大了; -

了解神经网络类的样本复杂性可以帮助设计更可靠的深度学习算法,并评估它们在安全关键场景中的局限性。

一句话总结:

由于所需的最小训练样本数呈指数级增长,特别是对于 L8-norm 来说,学习ReLU网络达到高的统一精度是难以实现的。

Statistical learning theory provides bounds on the necessary number of training samples needed to reach a prescribed accuracy in a learning problem formulated over a given target class. This accuracy is typically measured in terms of a generalization error, that is, an expected value of a given loss function. However, for several applications — for example in a security-critical context or for problems in the computational sciences — accuracy in this sense is not sufficient. In such cases, one would like to have guarantees for high accuracy on every input value, that is, with respect to the uniform norm. In this paper we precisely quantify the number of training samples needed for any conceivable training algorithm to guarantee a given uniform accuracy on any learning problem formulated over target classes containing (or consisting of) ReLU neural networks of a prescribed architecture. We prove that, under very general assumptions, the minimal number of training samples for this task scales exponentially both in the depth and the input dimension of the network architecture.

https://openreview.net/forum?id=nchvKfvNeX0

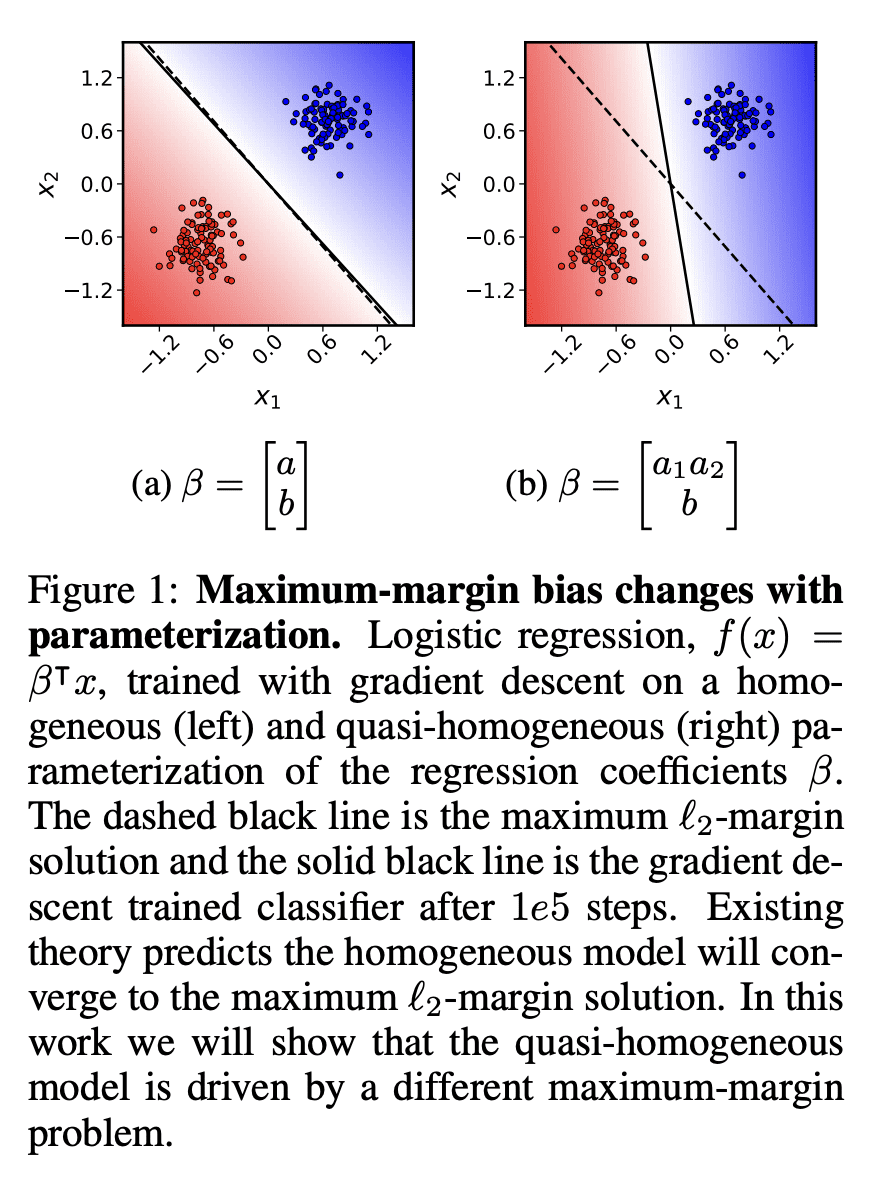

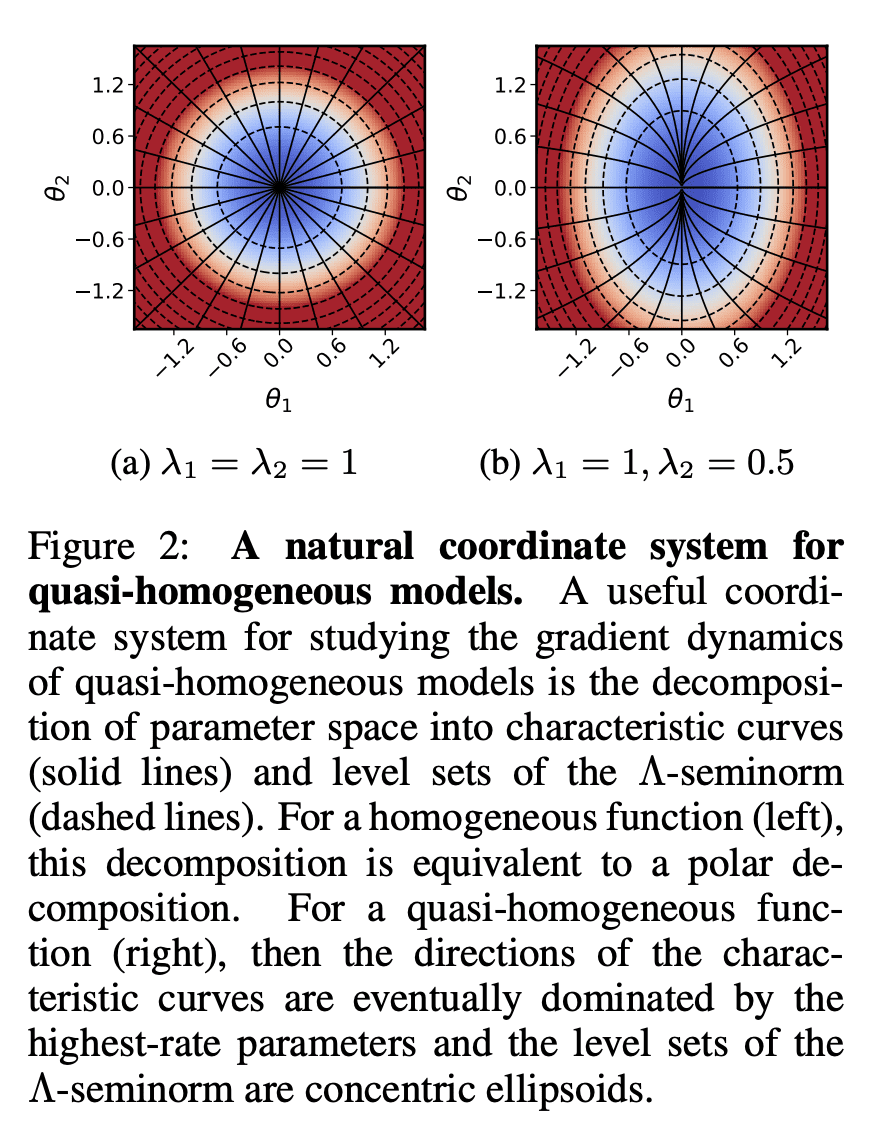

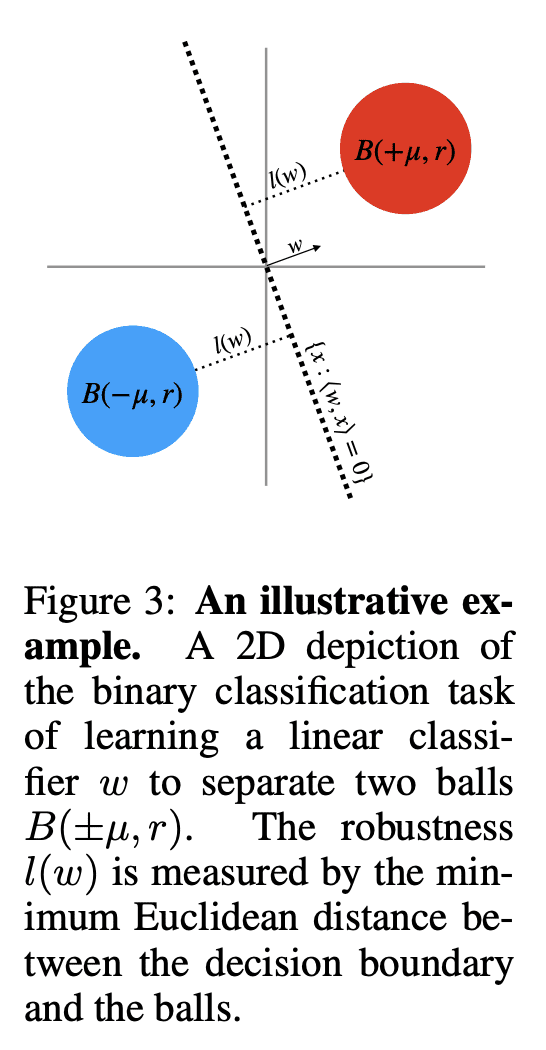

5、[LG] The Asymmetric Maximum Margin Bias of Quasi-Homogeneous Neural Networks

D Kunin, A Yamamura, C Ma, S Ganguli

[Stanford University]

准齐次神经网络非对称最大边际偏差

要点:

-

介绍了准齐次模型类,囊括几乎所有包含齐次激活、偏置参数、残差连接、池化层和归一化层的现代前馈神经网络架构。 -

梯度流隐性地偏向于准齐次模型中的一个参数子集,这与齐次模型中所有参数被平等对待的情况不同; -

对非对称范数最小化的强烈偏好,会降低准齐次模型的鲁棒性,这一点通过简单的例子可得到证明; -

本文揭示了在具有规范化层的充分表现力的神经网络中,神经坍缩背后的普遍机制,这是由函数空间中的边际最大化和参数空间中 Λmax-seminorm 最小化之间的竞争导致的。

一句话总结:

探讨了准同态神经网络的最大边际偏差,揭示了神经坍缩这一经验现象背后的普遍机制。

In this work, we explore the maximum-margin bias of quasi-homogeneous neural networks trained with gradient flow on an exponential loss and past a point of separability. We introduce the class of quasi-homogeneous models, which is expressive enough to describe nearly all neural networks with homogeneous activations, even those with biases, residual connections, and normalization layers, while structured enough to enable geometric analysis of its gradient dynamics. Using this analysis, we generalize the existing results of maximum-margin bias for homogeneous networks to this richer class of models. We find that gradient flow implicitly favors a subset of the parameters, unlike in the case of a homogeneous model where all parameters are treated equally. We demonstrate through simple examples how this strong favoritism toward minimizing an asymmetric norm can degrade the robustness of quasi-homogeneous models. On the other hand, we conjecture that this norm-minimization discards, when possible, unnecessary higher-order parameters, reducing the model to a sparser parameterization. Lastly, by applying our theorem to sufficiently expressive neural networks with normalization layers, we reveal a universal mechanism behind the empirical phenomenon of Neural Collapse.

https://openreview.net/forum?id=IM4xp7kGI5V

另外几篇值得关注的论文:

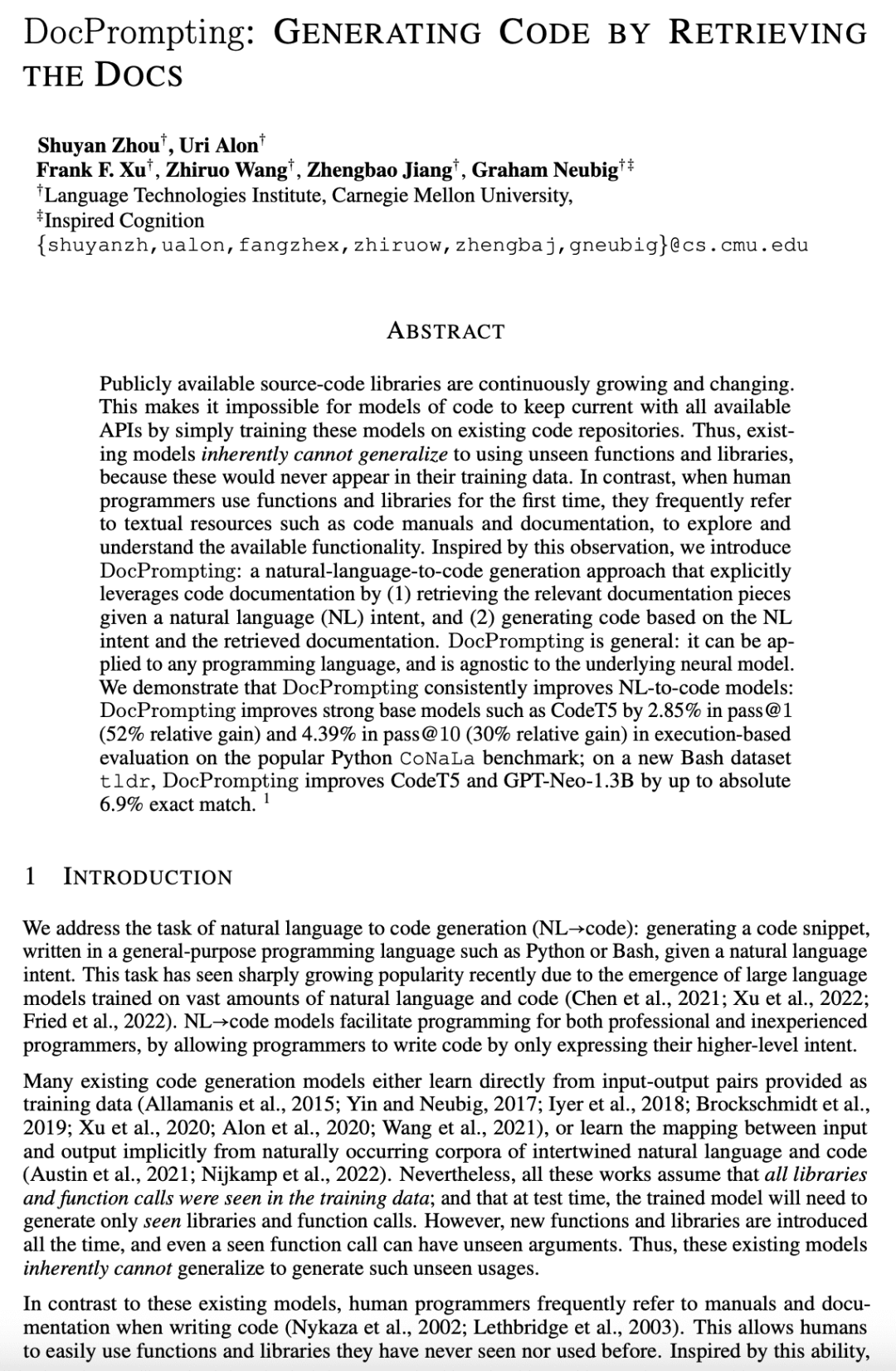

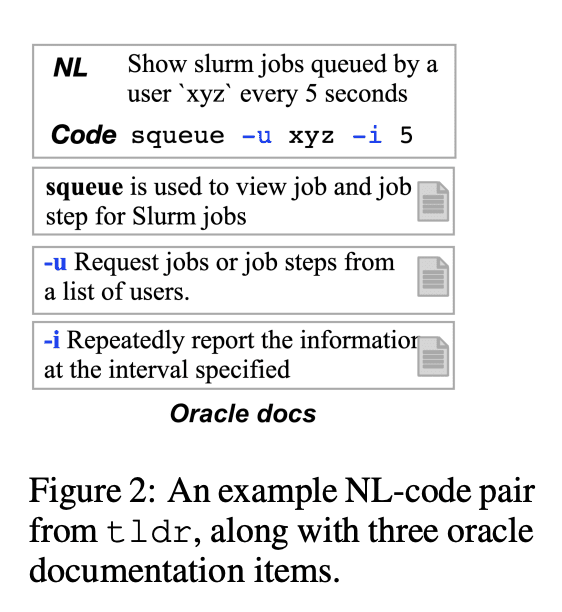

[CL] DocPrompting: Generating Code by Retrieving the Docs

S Zhou, U Alon, F F. Xu, Z Wang, Z Jiang, G Neubig

[CMU]

DocPrompting: 基于文档检索的代码生成

要点:

-

现有的代码生成模型,很难跟上不断变化和扩展的可用API集,因此很难使用未见过的函数和库生成代码; -

DocPrompting 是一种自然语言到代码的生成方法,通过给定一个自然语言意图,检索相关的文档片段,并根据该意图和检索到的文档生成代码,从而充分利用代码文档; -

DocPrompting 是一种通用方法,可用于任何编程语言,且与底层神经模型无关,在各种任务和语言上持续改善自然语言到代码的模型; -

DocPrompting 为 NL-to-code 生成提供了一个很有前景的方向,可利用新添加的文档来生成包含未见过的和未用过的函数和库的代码,且适用于其他与代码相关的任务和类似文档资源,如教程和博客文章。

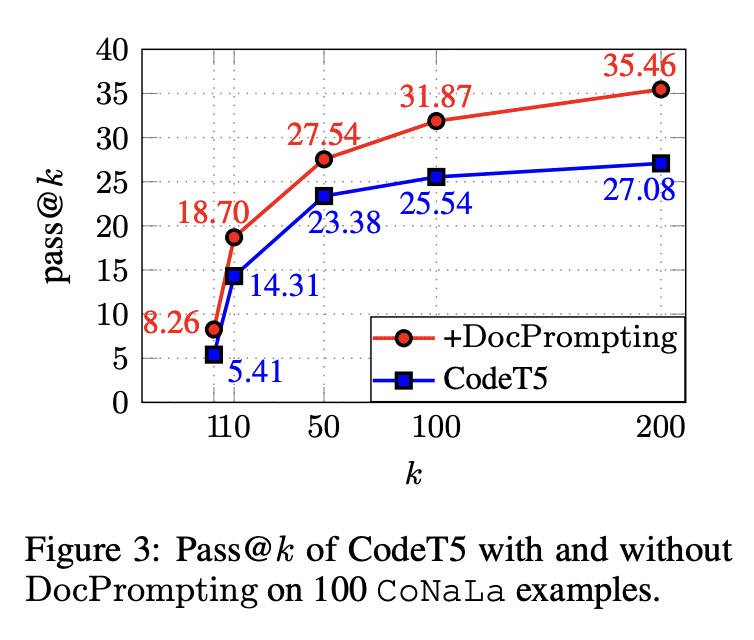

一句话总结:

DocPrompting 通过检索相关文档来改进自然语言→代码生成模型,其精确匹配比强大的 CodeT5 等基础模型高出 6.9%。

Publicly available source-code libraries are continuously growing and changing. This makes it impossible for models of code to keep current with all available APIs by simply training these models on existing code repositories. Thus, existing models inherently cannot generalize to using unseen functions and libraries, because these would never appear in the training data. In contrast, when human programmers use functions and libraries for the first time, they frequently refer to textual resources such as code manuals and documentation, to explore and understand the available functionality. Inspired by this observation, we introduce DocPrompting: a natural-language-to-code generation approach that explicitly leverages documentation by (1) retrieving the relevant documentation pieces given an NL intent, and (2) generating code based on the NL intent and the retrieved documentation. DocPrompting is general: it can be applied to any programming language and is agnostic to the underlying neural model. We demonstrate that DocPrompting consistently improves NL-to-code models: DocPrompting improves strong base models such as CodeT5 by 2.85% in pass@1 (52% relative gain) and 4.39% in pass@10 (30% relative gain) in execution-based evaluation on the popular Python CoNaLa benchmark; on a new Bash dataset tldr, DocPrompting improves CodeT5 and GPT-Neo1.3B by up to absolute 6.9% exact match.

https://arxiv.org/abs/2207.05987

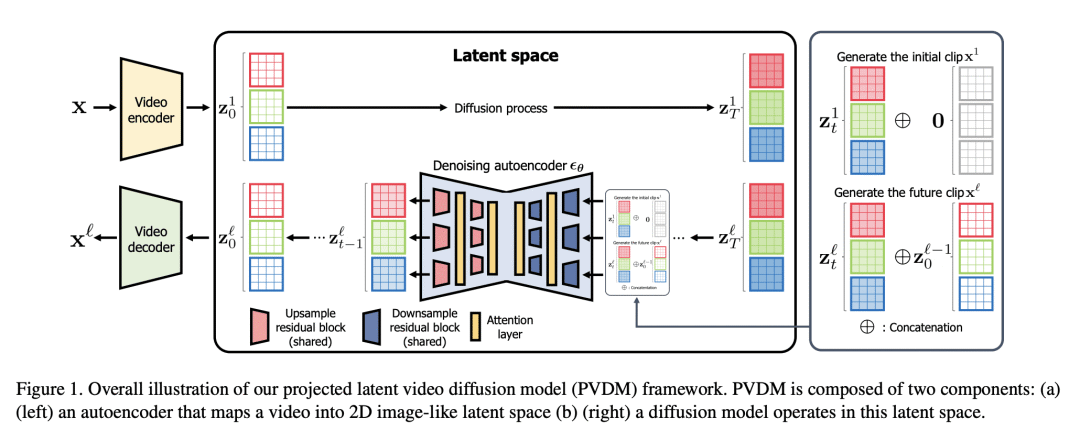

[CV] Video Probabilistic Diffusion Models in Projected Latent Space

S Yu, K Sohn, S Kim, J Shin

[KAIST & Google Research]

投射潜空间视频概率扩散模型

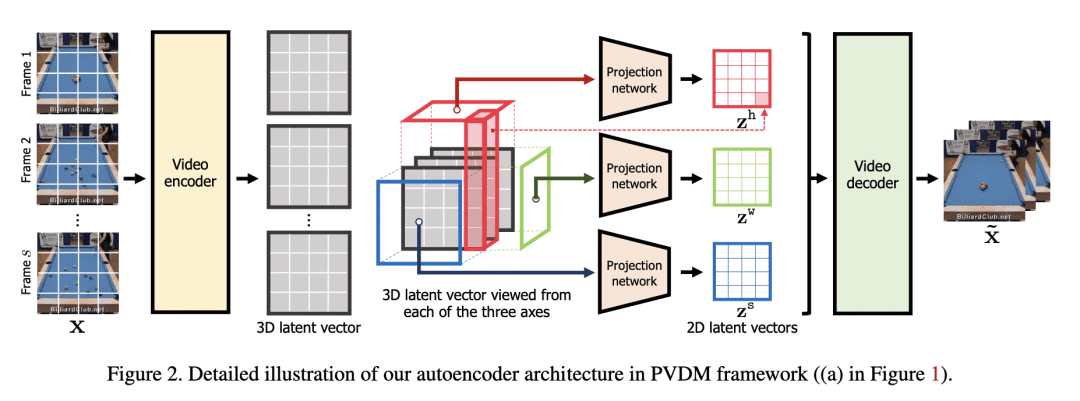

要点:

-

PVDM 是一种视频生成模型,使用低维潜空间来对高分辨率视频进行高效训练; -

PVDM 由一个自编码器和一个用于视频生成的扩散模型结构组成,自编码器将视频投射为 2D 潜向量; -

PVDM 在流行视频生成数据集上的 FVD 得分方面优于之前的视频合成方法; -

PVDM避免了计算量大的 3D 卷积神经网络架构,并为无条件和有条件帧生成建模提供了联合训练。

一句话总结:

PVDM 是一种新的视频生成模型,使用概率扩散模型在低维潜空间高效地学习视频分布。

Despite the remarkable progress in deep generative models, synthesizing high-resolution and temporally coherent videos still remains a challenge due to their high-dimensionality and complex temporal dynamics along with large spatial variations. Recent works on diffusion models have shown their potential to solve this challenge, yet they suffer from severe computation- and memory-inefficiency that limit the scalability. To handle this issue, we propose a novel generative model for videos, coined projected latent video diffusion models (PVDM), a probabilistic diffusion model which learns a video distribution in a low-dimensional latent space and thus can be efficiently trained with high-resolution videos under limited resources. Specifically, PVDM is composed of two components: (a) an autoencoder that projects a given video as 2D-shaped latent vectors that factorize the complex cubic structure of video pixels and (b) a diffusion model architecture specialized for our new factorized latent space and the training/sampling procedure to synthesize videos of arbitrary length with a single model. Experiments on popular video generation datasets demonstrate the superiority of PVDM compared with previous video synthesis methods; e.g., PVDM obtains the FVD score of 639.7 on the UCF-101 long video (128 frames) generation benchmark, which improves 1773.4 of the prior state-of-the-art.

https://arxiv.org/abs/2302.07685

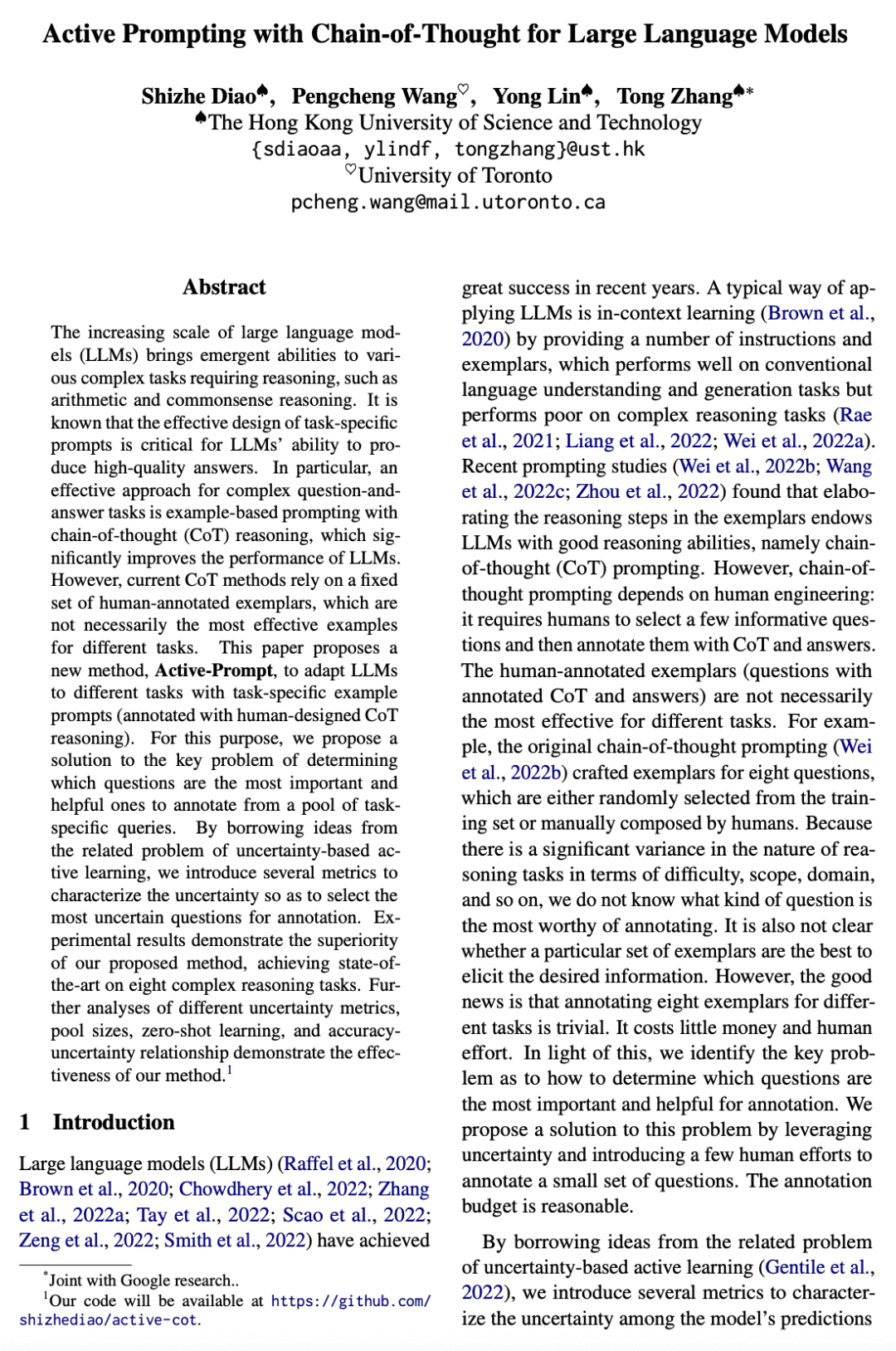

[CL] Active Prompting with Chain-of-Thought for Large Language Models

S Diao, P Wang, Y Lin, T Zhang

[The Hong Kong University of Science and Technology & University of Toronto]

大型语言模型基于思维链的主动提示

要点:

-

主动提示(Active-Prompt)通过特定任务的示例提示使大型语言模型自适应不同任务; -

采用基于不确定性的主动选择策略,从特定任务查询池中选择最有用的问题进行标注; -

介绍了四种不同的不确定性估计策略:分歧、熵、方差和自信度; -

主动提示在八个广泛使用的算术推理、常识推理和符号推理数据集上取得了可喜的表现,并以很大的优势超过了有竞争力的基线模型。

一句话总结:

提出主动提示(Active-Prompt),一种让大型语言模型(LLM)适应不同任务的新方法,基于特定任务示例提示,在八个复杂的推理任务上达到了最先进的水平。

The increasing scale of large language models (LLMs) brings emergent abilities to various complex tasks requiring reasoning, such as arithmetic and commonsense reasoning. It is known that the effective design of task-specific prompts is critical for LLMs’ ability to produce high-quality answers. In particular, an effective approach for complex question-and-answer tasks is example-based prompting with chain-of-thought (CoT) reasoning, which significantly improves the performance of LLMs. However, current CoT methods rely on a fixed set of human-annotated exemplars, which are not necessarily the most effective examples for different tasks. This paper proposes a new method, Active-Prompt, to adapt LLMs to different tasks with task-specific example prompts (annotated with human-designed CoT reasoning). For this purpose, we propose a solution to the key problem of determining which questions are the most important and helpful ones to annotate from a pool of task-specific queries. By borrowing ideas from the related problem of uncertainty-based active learning, we introduce several metrics to characterize the uncertainty so as to select the most uncertain questions for annotation. Experimental results demonstrate the superiority of our proposed method, achieving state-of-the-art on eight complex reasoning tasks. Further analyses of different uncertainty metrics, pool sizes, zero-shot learning, and accuracy-uncertainty relationship demonstrate the effectiveness of our method. Our code will be available at this https URL.

https://arxiv.org/abs/2302.12246

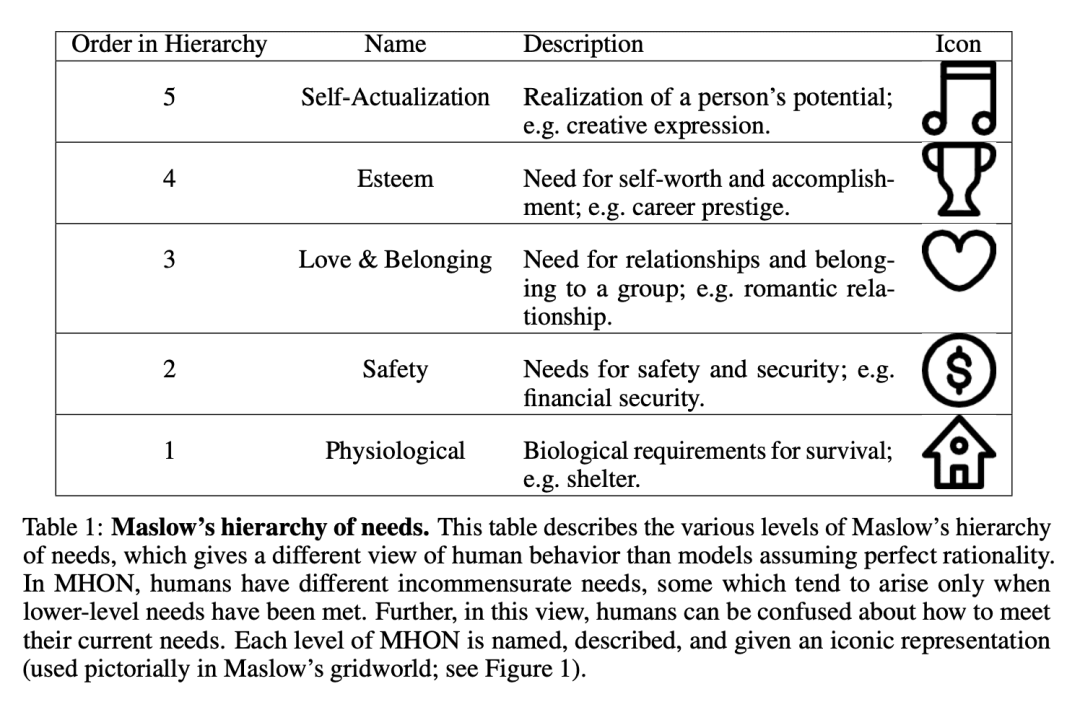

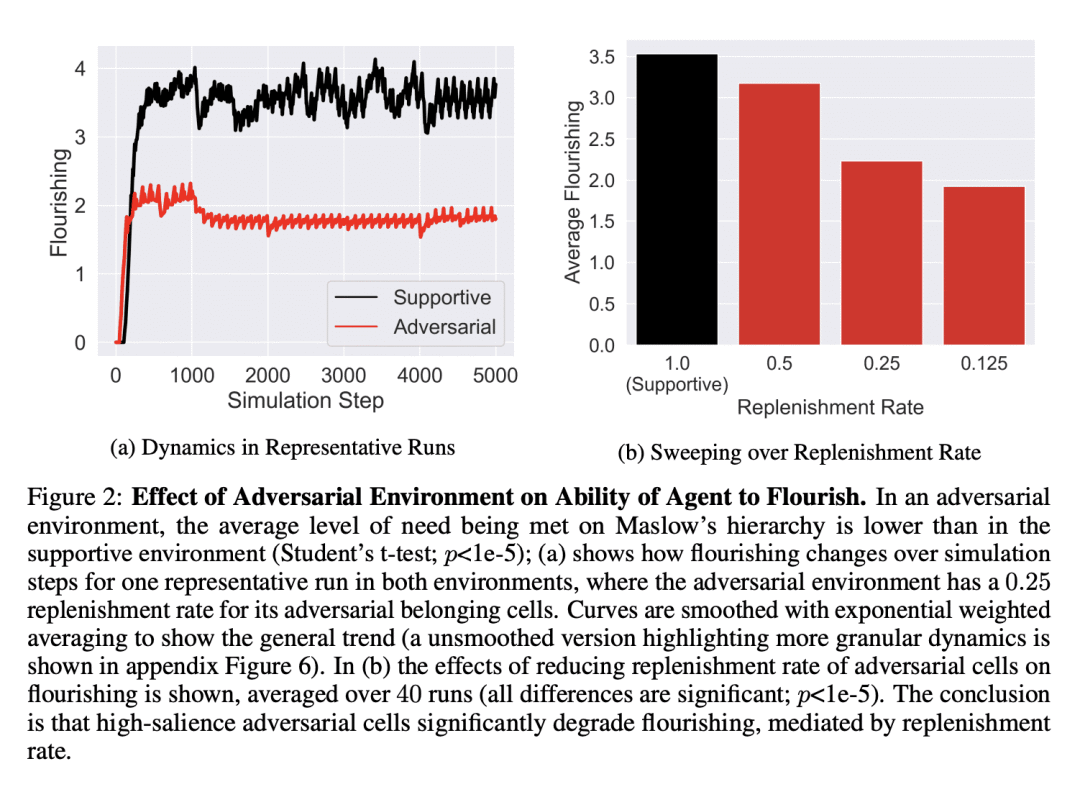

[LG] Machine Love

J Lehman

[AI Objectives Institute]

机器之爱

要点:

-

机器学习往往为我们眼前的需求进行优化,这与科学上已知的人类繁荣的模式并不相符; -

探讨了”机器之爱”的概念,旨在为人类的成长和发展提供无条件的支持;

3。 希望”机器之爱”的概念可以有助于调整机器学习,以支持人类的成长和最高愿望; -

跨学科工作有很大的空间,可以更好地理解如何利用机器学习来支持人类的繁荣。

一句话总结:

机器学习目前还没有发挥其潜力,因为它往往是为我们当下想要的东西进行优化,这与科学上已知的人类繁荣的模式相悖。本文探讨了”机器之爱”的概念,其目的是提供无条件的支持,使人类能自主地追求自己的成长和发展,希望通过调整机器学习来支持我们的成长,实现最高愿望。

While ML generates much economic value, many of us have problematic relationships with social media and other ML-powered applications. One reason is that ML often optimizes for what we want in the moment, which is easy to quantify but at odds with what is known scientifically about human flourishing. Thus, through its impoverished models of us, ML currently falls far short of its exciting potential, which is for it to help us to reach ours. While there is no consensus on defining human flourishing, from diverse perspectives across psychology, philosophy, and spiritual traditions, love is understood to be one of its primary catalysts. Motivated by this view, this paper explores whether there is a useful conception of love fitting for machines to embody, as historically it has been generative to explore whether a nebulous concept, such as life or intelligence, can be thoughtfully abstracted and reimagined, as in the fields of machine intelligence or artificial life. This paper forwards a candidate conception of machine love, inspired in particular by work in positive psychology and psychotherapy: to provide unconditional support enabling humans to autonomously pursue their own growth and development. Through proof of concept experiments, this paper aims to highlight the need for richer models of human flourishing in ML, provide an example framework through which positive psychology can be combined with ML to realize a rough conception of machine love, and demonstrate that current language models begin to enable embodying qualitative humanistic principles. The conclusion is that though at present ML may often serve to addict, distract, or divide us, an alternative path may be opening up: We may align ML to support our growth, through it helping us to align ourselves towards our highest aspirations.

https://arxiv.org/abs/2302.09248

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง