作者:O Patashnik, D Garibi, I Azuri, H Averbuch-Elor, D Cohen-Or

[Tel-Aviv University]

总结:

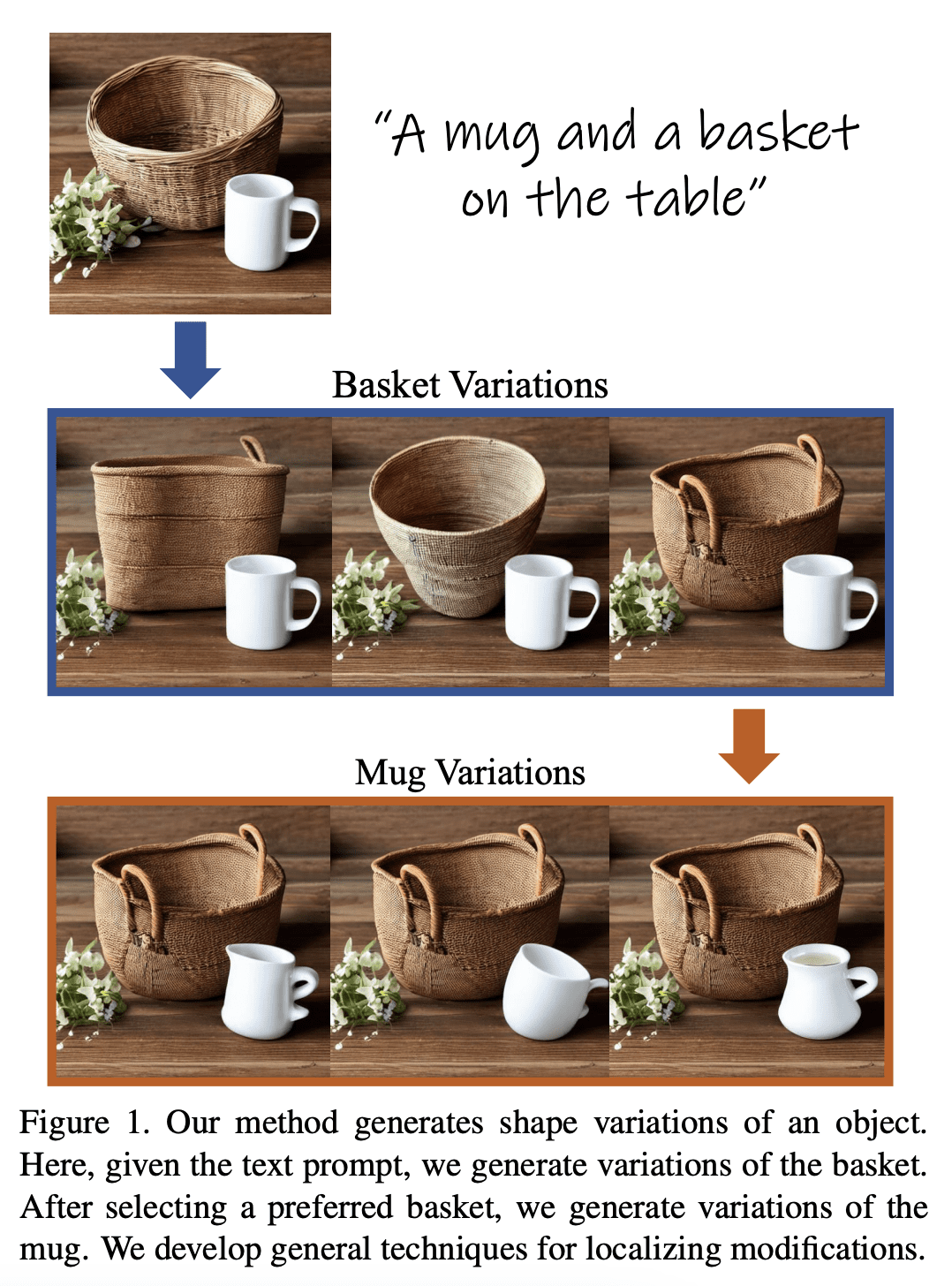

提出了一种技术,可以控制图像中特定对象的形状变化,解决了生成图像时无法独立控制对象形状的问题。

要点:

- 提出一种生成特定物体形状变化的图像集合的技术,使得用户可以进行对象级别的形状探索。

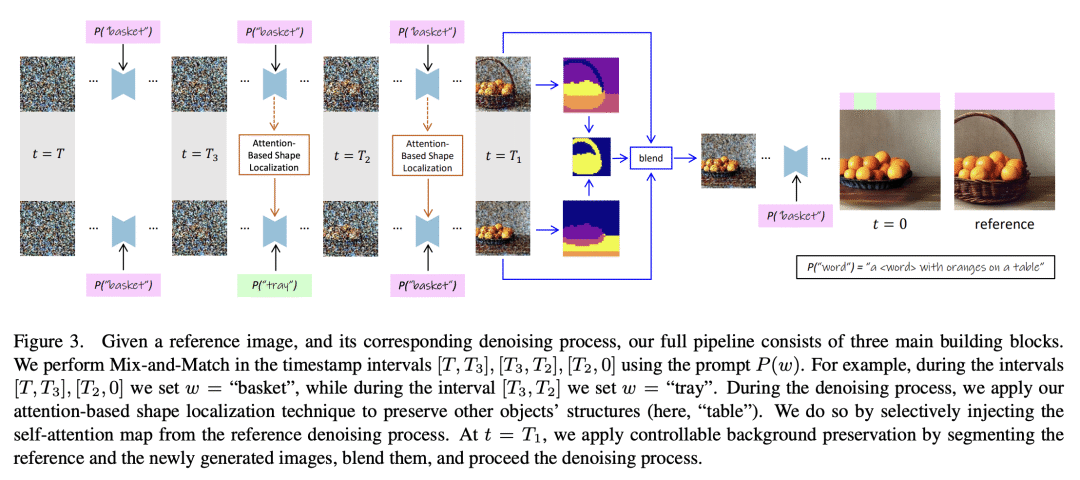

- 提出一种混合提示技术来生成形状变化,通过自注意力映射注入技术和交叉注意力层实现图像空间操作的定位。

- 证明了定位技术在生成物体变化方面的有效性,并且可以超越这一范围。

- 展示了自注意力图中的几何信息可以用于图像分割,从而可以指导背景的保留。

https://arxiv.org/abs/2303.11306

Text-to-image models give rise to workflows which often begin with an exploration step, where users sift through a large collection of generated images. The global nature of the text-to-image generation process prevents users from narrowing their exploration to a particular object in the image. In this paper, we present a technique to generate a collection of images that depicts variations in the shape of a specific object, enabling an object-level shape exploration process. Creating plausible variations is challenging as it requires control over the shape of the generated object while respecting its semantics. A particular challenge when generating object variations is accurately localizing the manipulation applied over the object’s shape. We introduce a prompt-mixing technique that switches between prompts along the denoising process to attain a variety of shape choices. To localize the image-space operation, we present two techniques that use the self-attention layers in conjunction with the cross-attention layers. Moreover, we show that these localization techniques are general and effective beyond the scope of generating object variations. Extensive results and comparisons demonstrate the effectiveness of our method in generating object variations, and the competence of our localization techniques.

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง