文本表示在RAG流程中扮演着十分重要的角色。

我们在《大模型落地的一些前沿观点:兼看知识图谱增强大模型问答的几个方案及CEVAL榜单评测启发》(地址:https://mp.weixin.qq.com/s/bgR8cjeACLN0TCLjRN8jNQ)中有讲过在文档解析缓解,有比如专门可以用来识别数学公式的开源项目:Nougat: https://facebookresearch.github.io/nougat/ 。

因为数学公式和表格在 markdown 里都可以用纯文本表示,其输入是单页 pdf 转成的图片,输出是这页pdf对应的 markdown(MMD,Mathpix MD)格式的纯文本序列。

其在训练数据收集阶段,根据PDF文件中的分页符拆分Markdown格式,收集来自arxiv、PubMed Central等平台的科学论文PDF数据集,以及LaTeX源代码,共超过800万页,具体来说,研究人员页面栅格化为图像以创建最终的配对数据集。

今天,我们再来看看一个pdf转markdown的项目(先说结论,不支持中文),对其基本实现流程(其中的几个模块和参考项目)以及如何进行评估进行介绍,供大家一起参考。

709篇原创内容

公众号

一 、Markdown格式转换工具Marker实现流程

Marker:将PDF、EPUB和MOBI文档转换成Markdown格式的工具。地址:github.com/VikParuchuri/marker,

其特性在于:针对书籍和科学论文等多种PDF文档进行优化支持,移除页眉、页脚和其他冗余元素。转换大多数公式为Latex格式,格式化代码块和表格。

其基本实现流程如下:

首先,提取文本,必要时进行OCR(启发式、Tesseract)

其次,检测页面布局(布局分割器、列检测器)

相关的工具有:https://huggingface.co/vikp/layout_segmenter、https://huggingface.co/vikp/column_detector

例如:

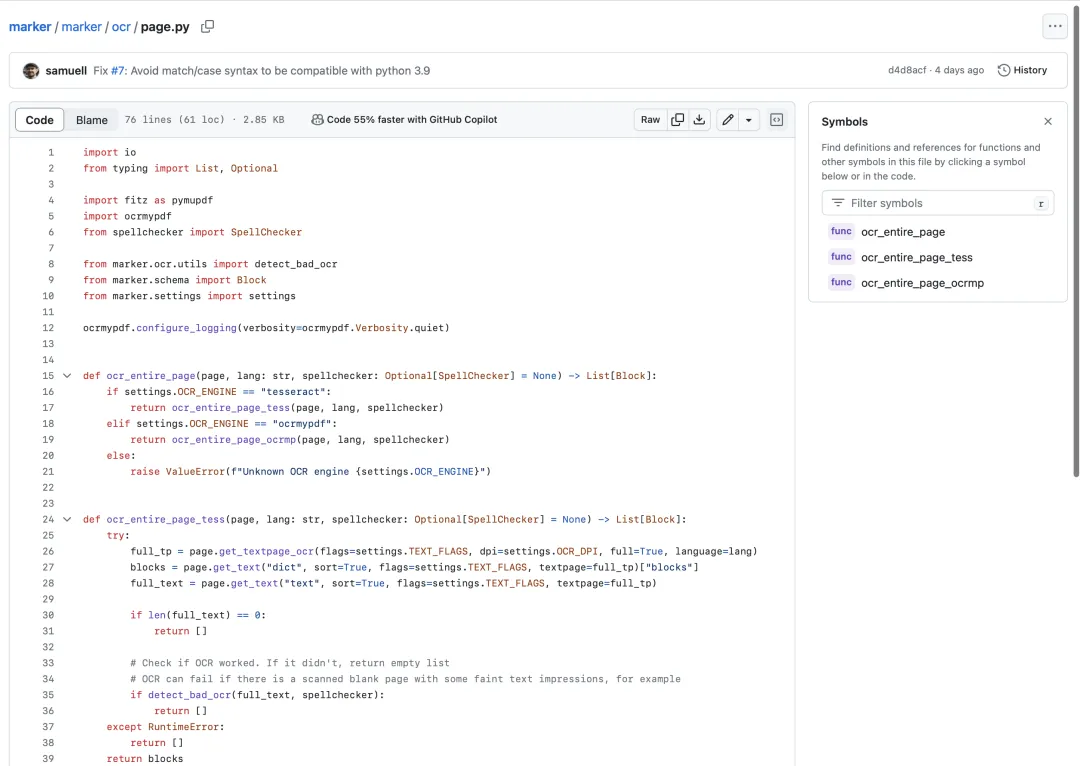

https://github.com/VikParuchuri/marker/blob/master/marker/ocr/page.py

然后,清理并格式化每个区块(启发式方法、nougat)

相关的工具有:https://huggingface.co/facebook/nougat-base

例如:

https://github.com/VikParuchuri/marker/blob/master/marker/cleaners

最后合并区块并对完整文本进行后处理(启发式方法、pdf_postprocessor)

相关的工具有:https://huggingface.co/vikp/pdf_postprocessor_t5

例如:

https://github.com/VikParuchuri/marker/blob/master/marker/postprocessors/editor.py

具体转换流程代码:

def convert_single_pdf(

fname: str,

model_lst: List,

max_pages=None,

metadata: Optional[Dict]=None,

parallel_factor: int = 1

) -> Tuple[str, Dict]:

lang = settings.DEFAULT_LANG

if metadata:

lang = metadata.get("language", settings.DEFAULT_LANG)

# Use tesseract language if available,使用tesseract进行ocr识别

tess_lang = settings.TESSERACT_LANGUAGES.get(lang, "eng")

spell_lang = settings.SPELLCHECK_LANGUAGES.get(lang, None)

if "eng" not in tess_lang:

tess_lang = f"eng+{tess_lang}"

# Output metadata,使用pymupdf进行pdf内容读取

out_meta = {"language": lang}

filetype = find_filetype(fname)

if filetype == "other":

return "", out_meta

out_meta["filetype"] = filetype

doc = pymupdf.open(fname, filetype=filetype)

if filetype != "pdf":

conv = doc.convert_to_pdf()

doc = pymupdf.open("pdf", conv)

blocks, toc, ocr_stats = get_text_blocks(

doc,

tess_lang,

spell_lang,

max_pages=max_pages,

parallel=parallel_factor * settings.OCR_PARALLEL_WORKERS

)

out_meta["toc"] = toc

out_meta["pages"] = len(blocks)

out_meta["ocr_stats"] = ocr_stats

if len([b for p in blocks for b in p.blocks]) == 0:

print(f"Could not extract any text blocks for {fname}")

return "", out_meta

# Unpack models from list,对文本块进行识别

nougat_model, layoutlm_model, order_model, edit_model = model_lst

block_types = detect_document_block_types(

doc,

blocks,

layoutlm_model,

batch_size=settings.LAYOUT_BATCH_SIZE * parallel_factor

)

# Find headers and footers,找到页眉页脚

bad_span_ids = filter_header_footer(blocks)

out_meta["block_stats"] = {"header_footer": len(bad_span_ids)}

annotate_spans(blocks, block_types)

# Dump debug data if flags are set

dump_bbox_debug_data(doc, blocks)

blocks = order_blocks(

doc,

blocks,

order_model,

batch_size=settings.ORDERER_BATCH_SIZE * parallel_factor

)

# Fix code blocks,处理code模块

code_block_count = identify_code_blocks(blocks)

out_meta["block_stats"]["code"] = code_block_count

indent_blocks(blocks)

# Fix table blocks,处理表格模块

merge_table_blocks(blocks)

table_count = create_new_tables(blocks)

out_meta["block_stats"]["table"] = table_count

for page in blocks:

for block in page.blocks:

block.filter_spans(bad_span_ids)

block.filter_bad_span_types()

filtered, eq_stats = replace_equations(

doc,

blocks,

block_types,

nougat_model,

batch_size=settings.NOUGAT_BATCH_SIZE * parallel_factor

)

out_meta["block_stats"]["equations"] = eq_stats

# Copy to avoid changing original data

merged_lines = merge_spans(filtered)

text_blocks = merge_lines(merged_lines, filtered)

text_blocks = filter_common_titles(text_blocks)

full_text = get_full_text(text_blocks)

# Handle empty blocks being joined

full_text = re.sub(r'\n{3,}', '\n\n', full_text)

full_text = re.sub(r'(\n\s){3,}', '\n\n', full_text)

# Replace bullet characters with a -

full_text = replace_bullets(full_text)

# Postprocess text with editor model,对文本进行编辑优化

full_text, edit_stats = edit_full_text(

full_text,

edit_model,

batch_size=settings.EDITOR_BATCH_SIZE * parallel_factor

)

out_meta["postprocess_stats"] = {"edit": edit_stats}

return full_text, out_meta二、Markdown格式转换工具Marker如何进行评估

同样的,我们来看看,如何对其进行评估,地址https://github.com/VikParuchuri/marker/blob/master/marker/benchmark/scoring.py中对该过程进行了描述:

import math

from rapidfuzz import fuzz, distance

import re

CHUNK_MIN_CHARS = 25

"""先对文本进行tokenizer"""

def tokenize(text):

# Combined pattern

pattern = r'([^\w\s\d\'])|([\w\']+)|(\d+)|(\n+)|( +)'

result = re.findall(pattern, text)

# Flatten the result and filter out empty strings

flattened_result = [item for sublist in result for item in sublist if item]

return flattened_result

""对文本进行切片"""

def chunk_text(text):

chunks = text.split("\n")

chunks = [c for c in chunks if c.strip() and len(c) > CHUNK_MIN_CHARS]

return chunks

"""计算chunk之间的重合度"""

def overlap_score(hypothesis_chunks, reference_chunks):

length_modifier = len(hypothesis_chunks) / len(reference_chunks)

search_distance = max(len(reference_chunks) // 5, 10)

chunk_scores = []

chunk_weights = []

for i, hyp_chunk in enumerate(hypothesis_chunks):

max_score = 0

chunk_weight = 1

i_offset = int(i * length_modifier)

chunk_range = range(max(0, i_offset-search_distance), min(len(reference_chunks), i_offset+search_distance))

for j in chunk_range:

ref_chunk = reference_chunks[j]

score = fuzz.ratio(hyp_chunk, ref_chunk, score_cutoff=30) / 100

if score > max_score:

max_score = score

chunk_weight = math.sqrt(len(ref_chunk))

chunk_scores.append(max_score)

chunk_weights.append(chunk_weight)

chunk_scores = [chunk_scores[i] * chunk_weights[i] for i in range(len(chunk_scores))]

return chunk_scores, chunk_weights

"""对得分进行归一化"""

def score_text(hypothesis, reference):

# Returns a 0-1 alignment score

hypothesis_chunks = chunk_text(hypothesis)

reference_chunks = chunk_text(reference)

chunk_scores, chunk_weights = overlap_score(hypothesis_chunks, reference_chunks)

return sum(chunk_scores) / sum(chunk_weights)

总结

不过,该工具也存在的问题,只支持与英语类似的语言(西班牙语、法语、德语、俄语等)。不支持中文、日文、韩文等。这块需要自行进行针对性的中文修改。

参考文献

1、github.com/VikParuchuri/marker

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง