1、[CV] Tuning computer vision models with task rewards

2、[LG] Topological Neural Discrete Representation Learning à la Kohonen

3、[LG] À-la-carte Prompt Tuning (APT): Combining Distinct Data Via Composable Prompting

4、[AS] ACE-VC: Adaptive and Controllable Voice Conversion using Explicitly Disentangled Self-supervised Speech Representations

5、[CV] Universal Guidance for Diffusion Models

[CV] PersonNeRF: Personalized Reconstruction from Photo Collections

[CV] Efficiency 360: Efficient Vision Transformers

[LG] Thermodynamic AI and the fluctuation frontier

[CL] Large Language Models Fail on Trivial Alterations to Theory-of-Mind Tasks

摘要:用任务奖励微调计算机视觉模型、拓扑神经离散表示学习、基于自选提示微调(APT)用可组合提示组合不同数据、基于显式解缠自监督语音表示的自适应可控语音转换、扩散模型通用引导、基于照片集的个性化重建、高效视觉Transformer、Thermodynamic AI、大型语言模型会在对心智理论任务的细微更改上失败

1、[CV] Tuning computer vision models with task rewards

A S Pinto, A Kolesnikov, Y Shi, L Beyer, X Zhai

[Google Research]

用任务奖励微调计算机视觉模型

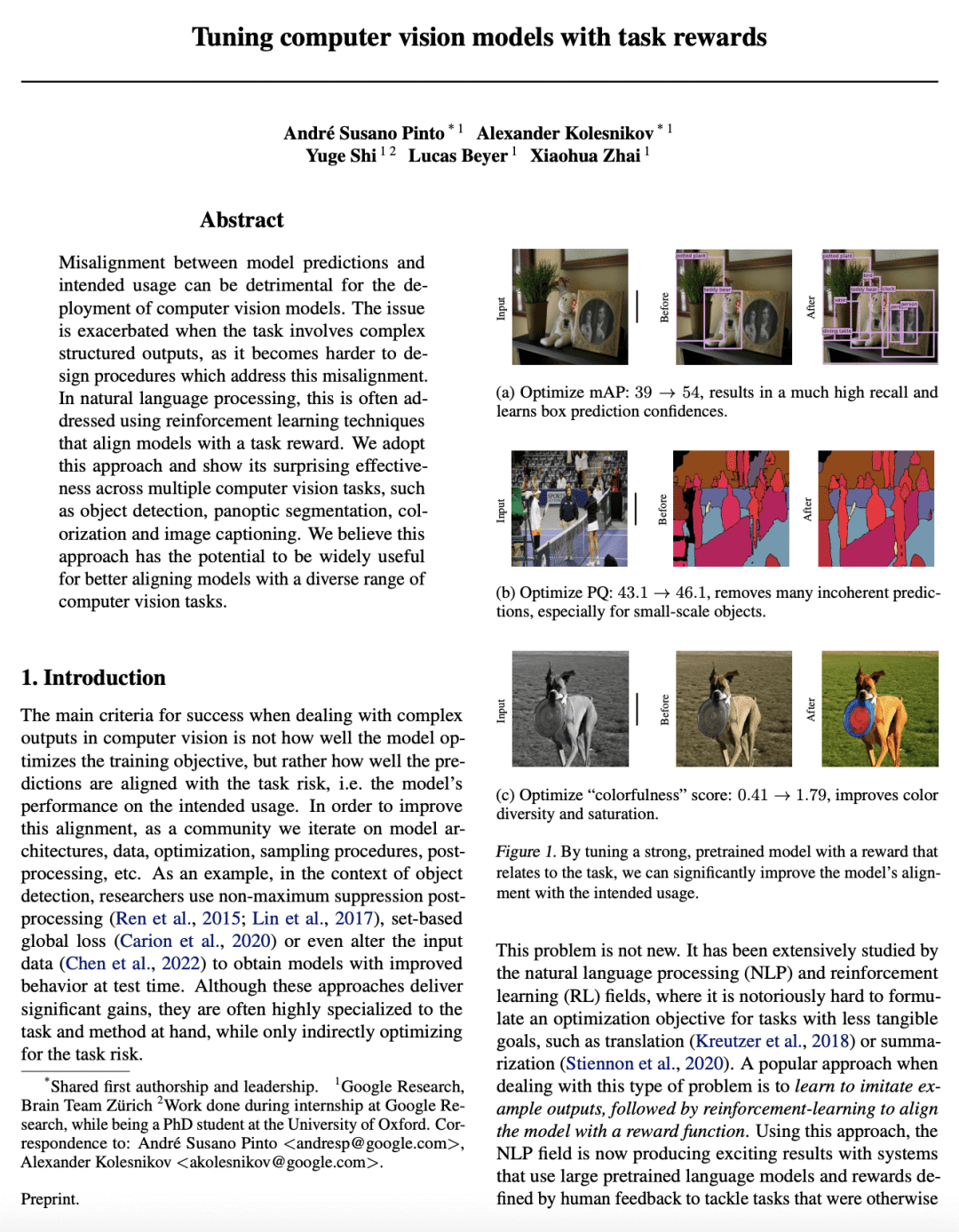

要点:

-

模型预测和预期使用之间的错位,可能不利于计算机视觉模型的部署; -

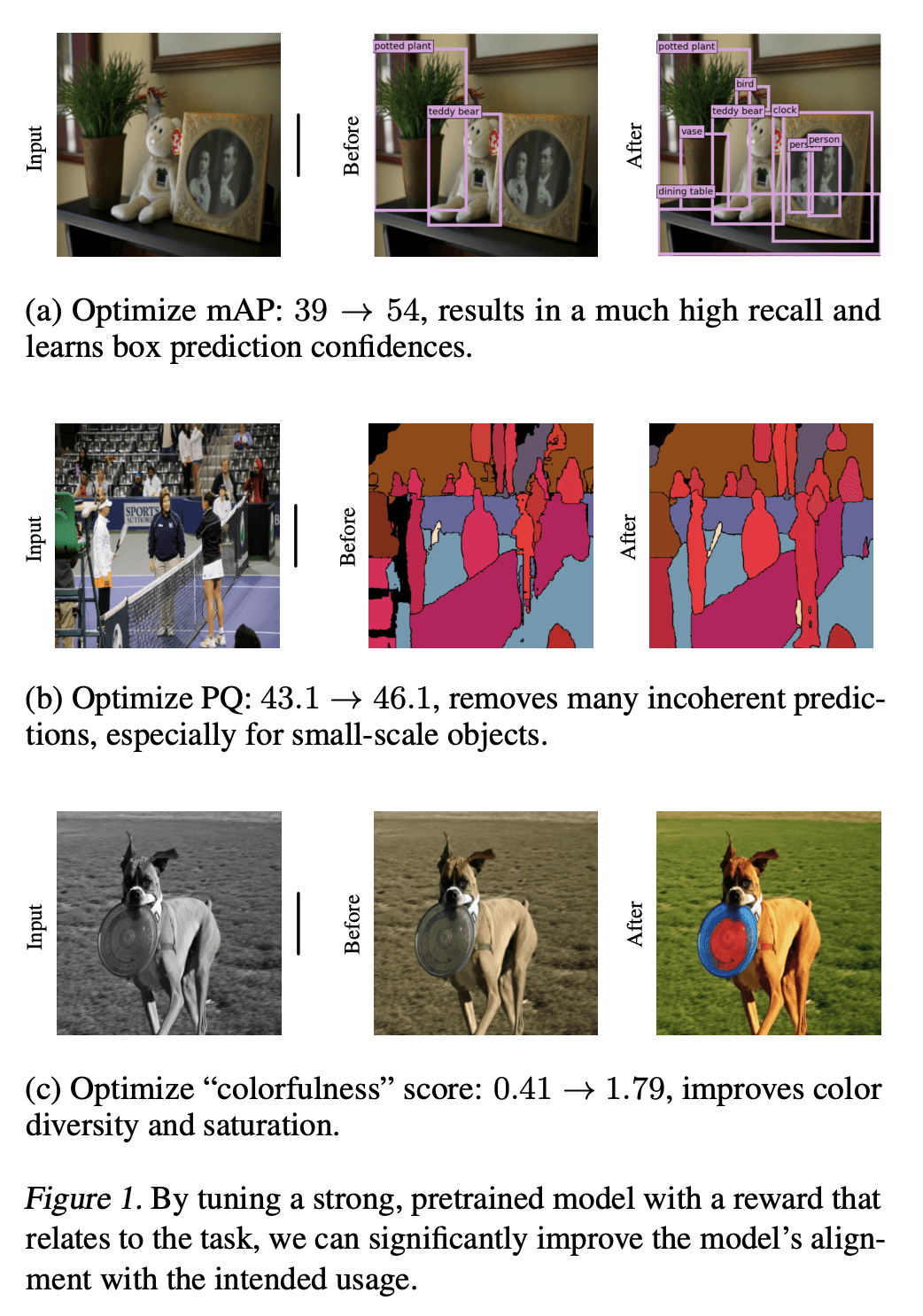

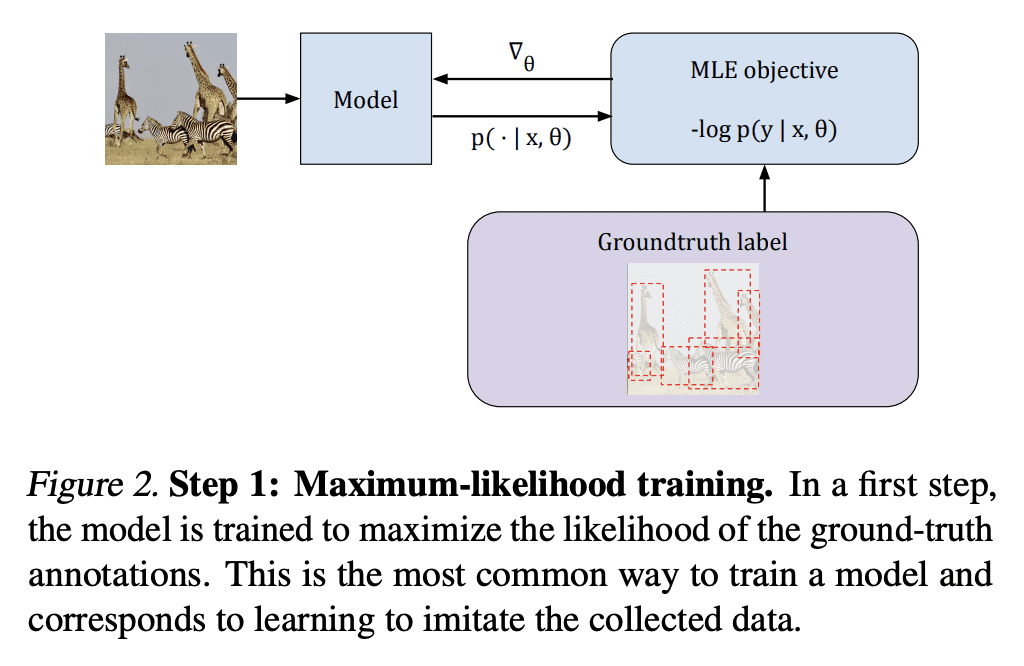

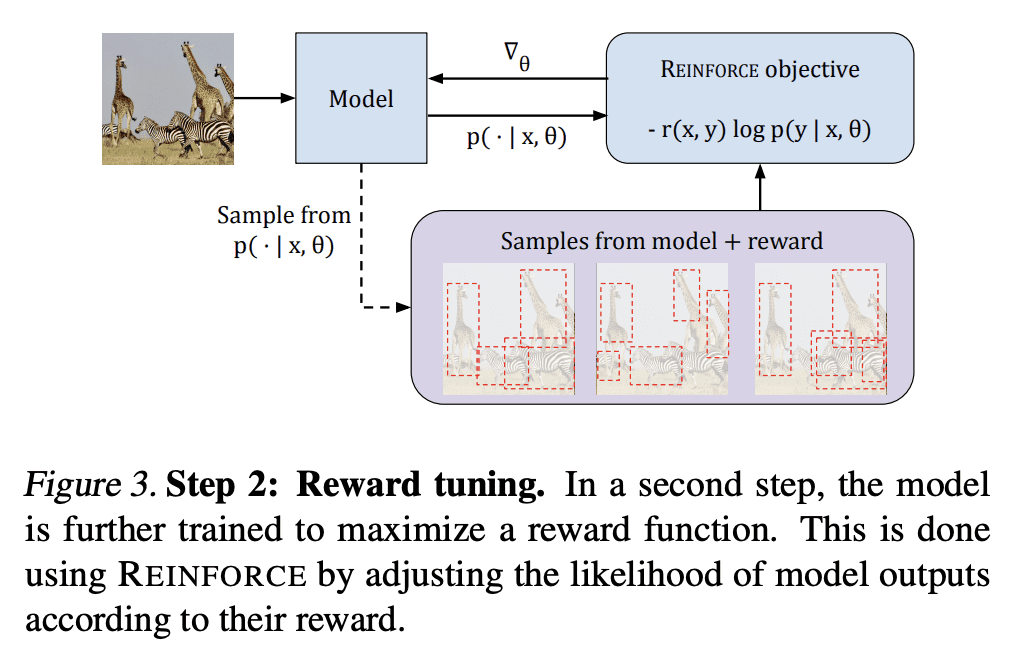

用强化学习技术的奖励优化,可以改善计算机视觉任务,如目标检测、全景分割、着色和图像描述,即使对于没有其他任务特定组件训练的模型也是如此; -

与最近在描述方面的工作具有竞争性,可以用来更精确地控制模型如何对齐非平凡任务风险; -

有可能广泛用于更好地将模型与不同的计算机视觉任务对齐。

一句话总结:

强化学习技术的奖励优化,是使计算机视觉模型与任务奖励对齐的有效方法,可用于改善广泛的计算机视觉任务,包括目标检测、全景分割、着色和图像描述。

Misalignment between model predictions and intended usage can be detrimental for the deployment of computer vision models. The issue is exacerbated when the task involves complex structured outputs, as it becomes harder to design procedures which address this misalignment. In natural language processing, this is often addressed using reinforcement learning techniques that align models with a task reward. We adopt this approach and show its surprising effectiveness across multiple computer vision tasks, such as object detection, panoptic segmentation, colorization and image captioning. We believe this approach has the potential to be widely useful for better aligning models with a diverse range of computer vision tasks.

https://arxiv.org/abs/2302.08242

2、[LG] Topological Neural Discrete Representation Learning à la Kohonen

K Irie, R Csordás, J Schmidhuber

[IDSIA]

拓扑神经离散表示学习

要点:

-

KSOM是一种比 EMA-VQ 更强大、更快速的替代方案,用于神经网络离散表示学习; -

KSOM 提供了在学到的离散表示中开发拓扑结构的收益; -

KSOM 可以集成到现有的 VQ-VAE 代码中; -

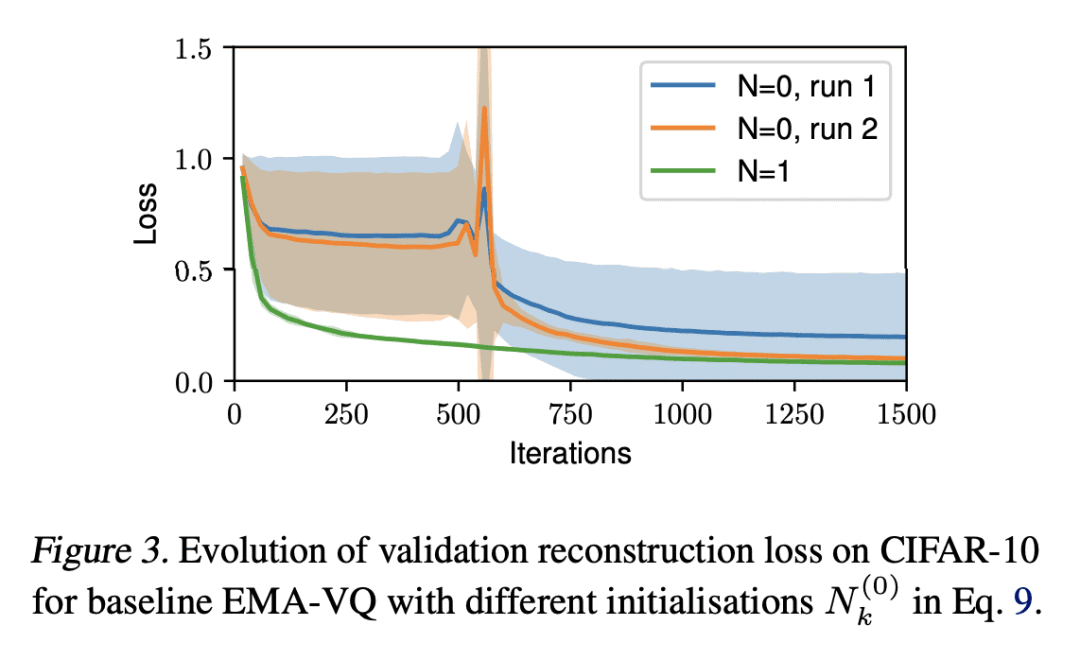

实验表明,KSOM 通常比 EMA-VQ 更鲁棒,特别是在初始化方案方面。

一句话总结:

与常用的 EMA-VQ 算法相比,Kohonen自组织图(KSOM)算法是一种在神经网络离散表示学习的更为鲁棒和快速的方法。

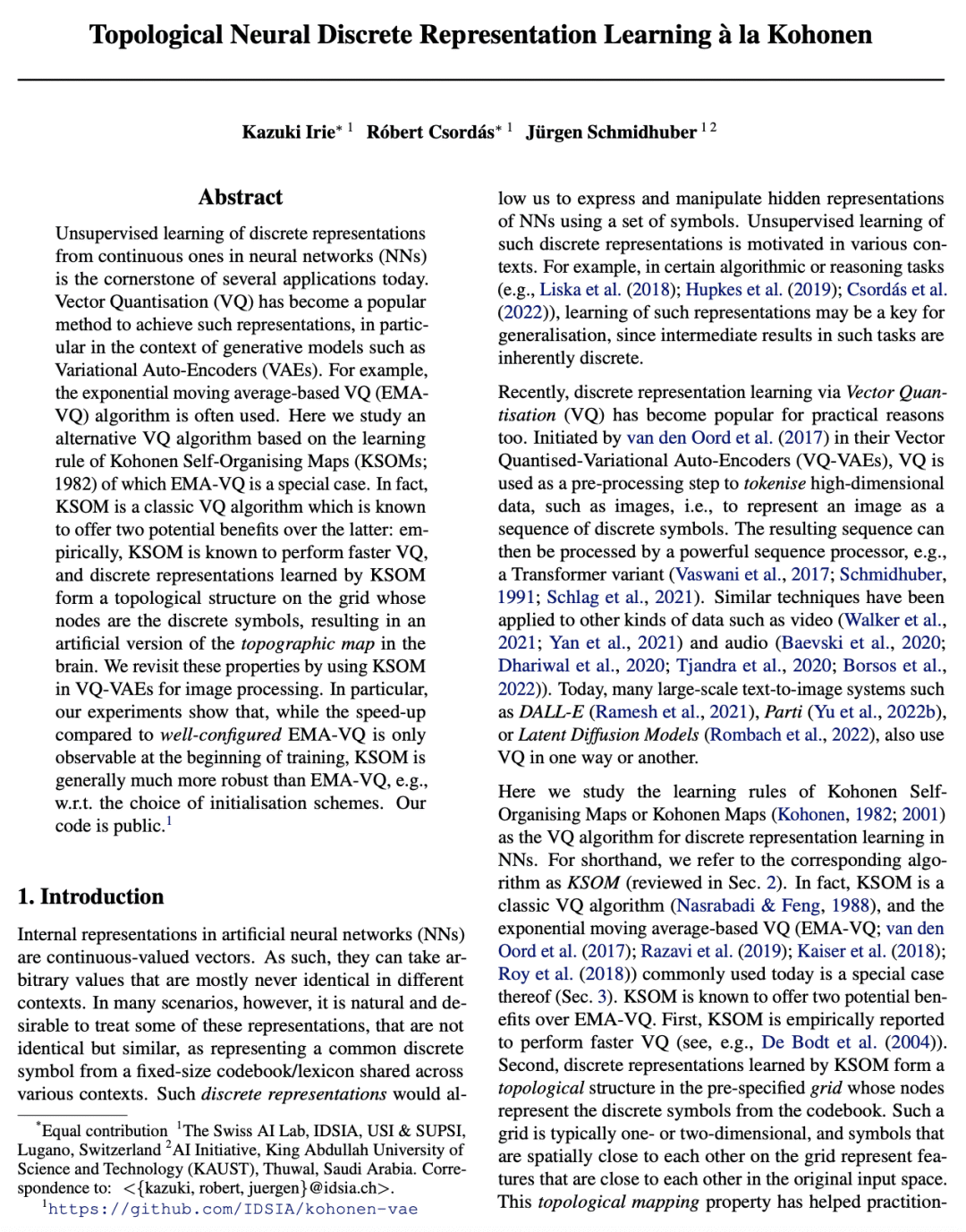

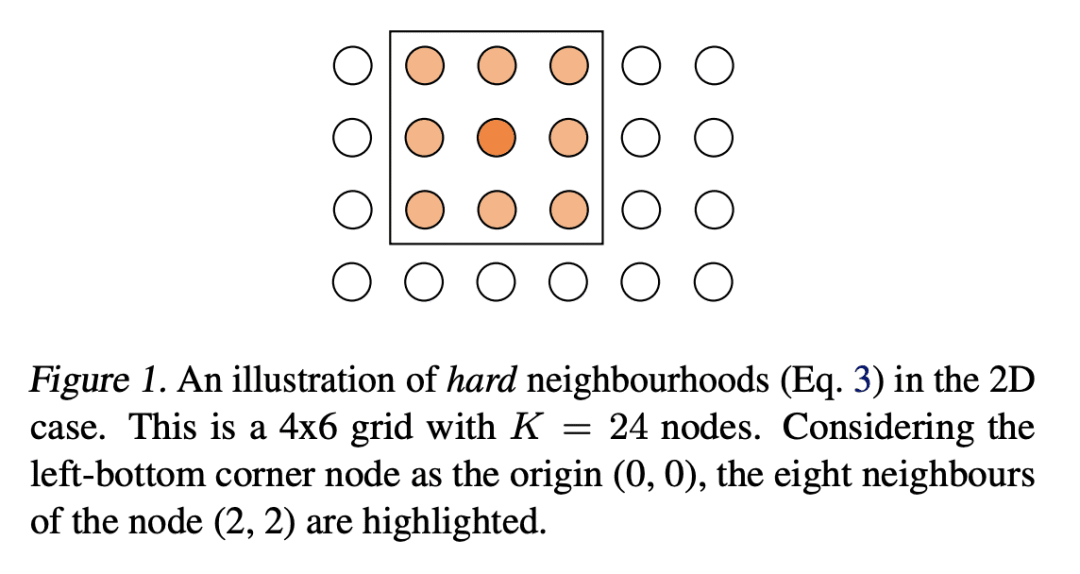

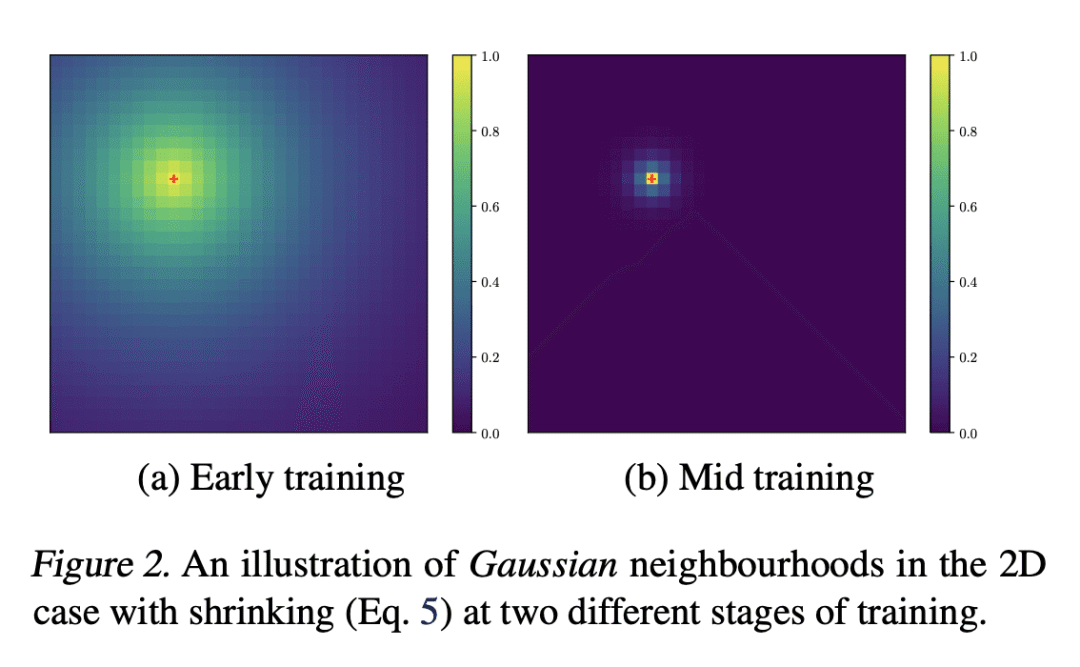

Unsupervised learning of discrete representations from continuous ones in neural networks (NNs) is the cornerstone of several applications today. Vector Quantisation (VQ) has become a popular method to achieve such representations, in particular in the context of generative models such as Variational Auto-Encoders (VAEs). For example, the exponential moving average-based VQ (EMA-VQ) algorithm is often used. Here we study an alternative VQ algorithm based on the learning rule of Kohonen Self-Organising Maps (KSOMs; 1982) of which EMA-VQ is a special case. In fact, KSOM is a classic VQ algorithm which is known to offer two potential benefits over the latter: empirically, KSOM is known to perform faster VQ, and discrete representations learned by KSOM form a topological structure on the grid whose nodes are the discrete symbols, resulting in an artificial version of the topographic map in the brain. We revisit these properties by using KSOM in VQ-VAEs for image processing. In particular, our experiments show that, while the speed-up compared to well-configured EMA-VQ is only observable at the beginning of training, KSOM is generally much more robust than EMA-VQ, e.g., w.r.t. the choice of initialisation schemes. Our code is public.

https://arxiv.org/abs/2302.07950

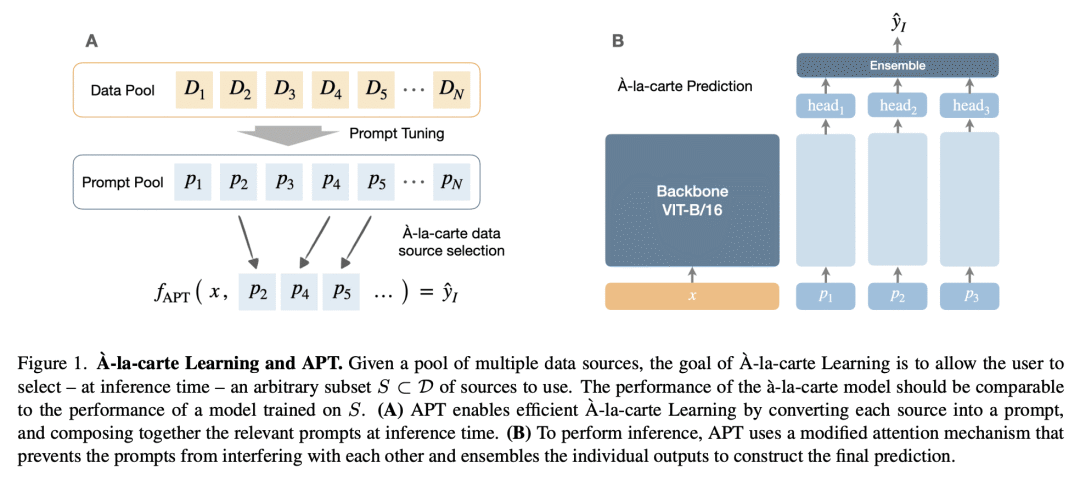

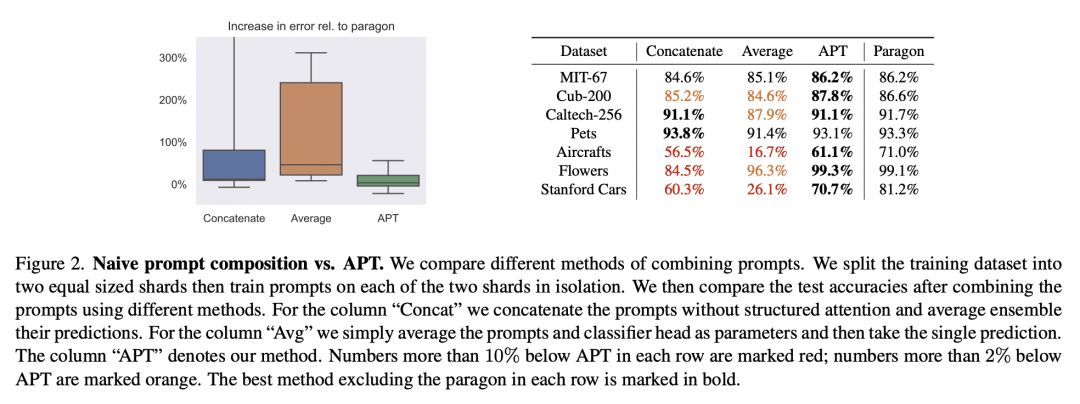

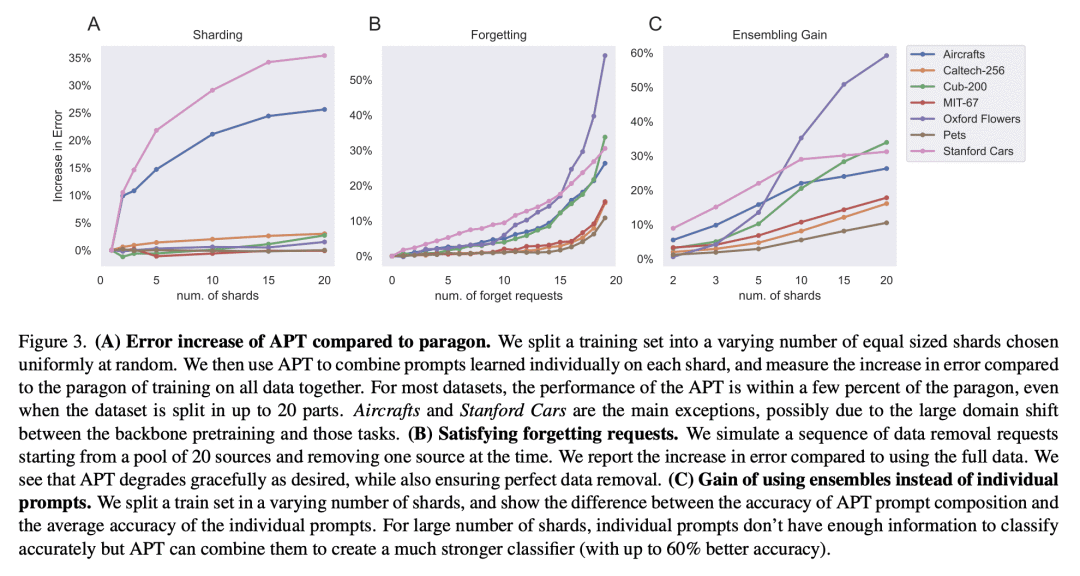

3、[LG] À-la-carte Prompt Tuning (APT): Combining Distinct Data Via Composable Prompting

B Bowman, A Achille, L Zancato, M Trager, P Perera, G Paolini, S Soatto

[AWS AI Labs]

自选提示微调(APT):用可组合提示组合不同数据

要点:

-

APT能为每个用户的个人访问权限和偏好创建特定的模型; -

个人提示可以在不同的时间、不同的分布或域中被单独训练,并且只包含他们在训练期间所接触的数据子集的信息; -

模型可以根据任意选择的数据源进行组装,称为”自选学习(à-la-carte learning)”,可通过简单地添加或删除相应的提示信息来从模型中添加或删除; -

APT 实现了在各自数据源的联合上训练的模型的 5% 以内的准确性,在训练和推理时间方面具有可比性,并在持续学习基准 Split CIFAR-100 和 CORe50 上实现了最先进的性能。

一句话总结:

APT 是一个基于 Transformer 的方案,能通过可组合的提示来组合和调整不同的数据源,定制的模型可以针对每个用户的访问权限和偏好进行定制,而不需要从头训练。

We introduce À-la-carte Prompt Tuning (APT), a transformer-based scheme to tune prompts on distinct data so that they can be arbitrarily composed at inference time. The individual prompts can be trained in isolation, possibly on different devices, at different times, and on different distributions or domains. Furthermore each prompt only contains information about the subset of data it was exposed to during training. During inference, models can be assembled based on arbitrary selections of data sources, which we call “à-la-carte learning”. À-la-carte learning enables constructing bespoke models specific to each user’s individual access rights and preferences. We can add or remove information from the model by simply adding or removing the corresponding prompts without retraining from scratch. We demonstrate that à-la-carte built models achieve accuracy within 5% of models trained on the union of the respective sources, with comparable cost in terms of training and inference time. For the continual learning benchmarks Split CIFAR-100 and CORe50, we achieve state-of-the-art performance.

https://arxiv.org/abs/2302.07994

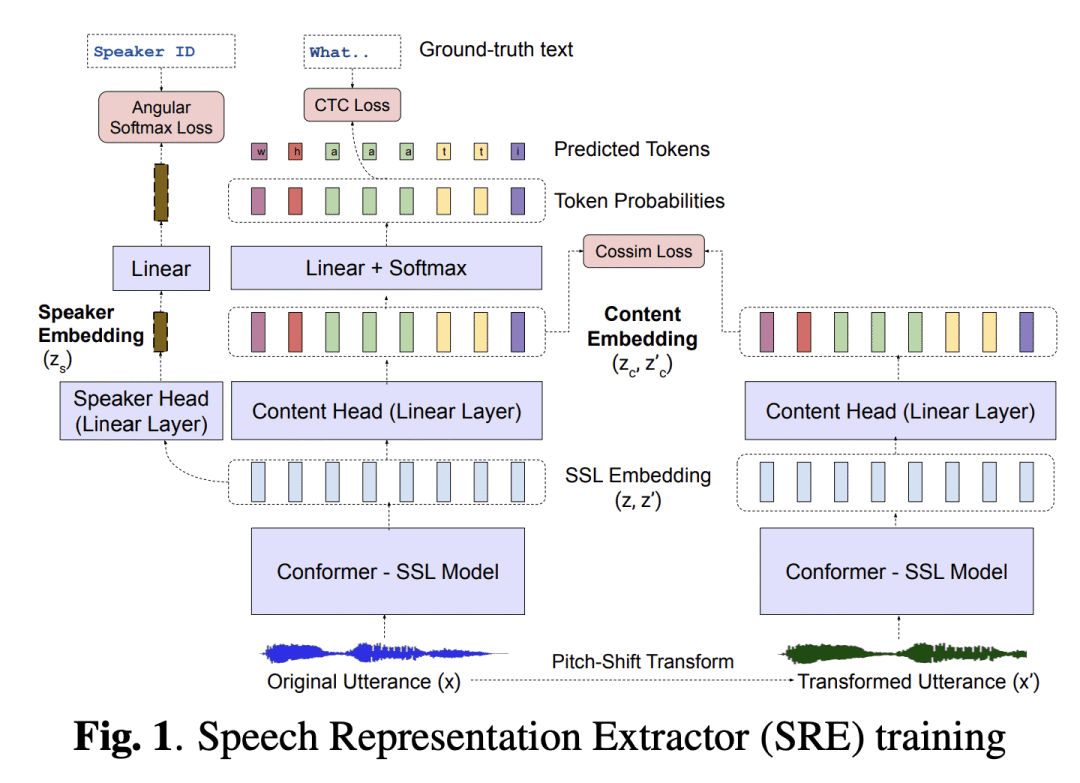

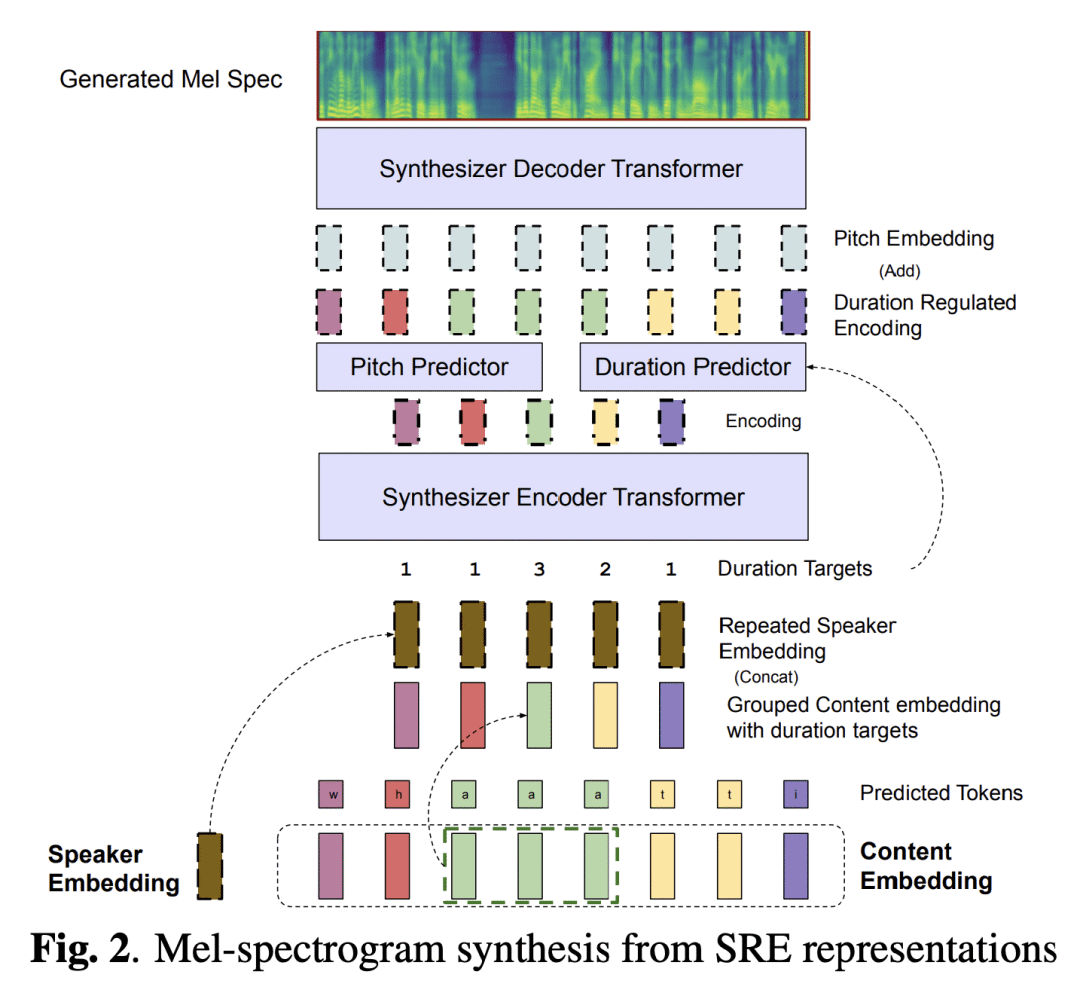

4、[AS] ACE-VC: Adaptive and Controllable Voice Conversion using Explicitly Disentangled Self-supervised Speech Representations

S Hussain, P Neekhara, J Huang, J Li, B Ginsburg

[University of California, San Diego & NVIDIA]

ACE-VC: 基于显式解缠自监督语音表示的自适应可控语音转换

要点:

-

所提出的方法利用自监督语音表示实现了零样本语音转换; -

提出一种语音表示提取器(SRE),用单一的神经网络主干来解缠说话人和内容信息; -

用合成模型从说话人和内容嵌入中预测音高和持续时间,以实现可控和自适应的语音转换; -

在说话人相似性、自然度和生成语音的可懂度方面达到了最先进的水平。

一句话总结:

提出一种基于解缠语音表示的零样本语音转换方法,在说话人相似度、可懂度和自然度方面达到了最先进水平。

In this work, we propose a zero-shot voice conversion method using speech representations trained with self-supervised learning. First, we develop a multi-task model to decompose a speech utterance into features such as linguistic content, speaker characteristics, and speaking style. To disentangle content and speaker representations, we propose a training strategy based on Siamese networks that encourages similarity between the content representations of the original and pitch-shifted audio. Next, we develop a synthesis model with pitch and duration predictors that can effectively reconstruct the speech signal from its decomposed representation. Our framework allows controllable and speaker-adaptive synthesis to perform zero-shot any-to-any voice conversion achieving state-of-the-art results on metrics evaluating speaker similarity, intelligibility, and naturalness. Using just 10 seconds of data for a target speaker, our framework can perform voice swapping and achieves a speaker verification EER of 5.5% for seen speakers and 8.4% for unseen speakers.

https://arxiv.org/abs/2302.08137

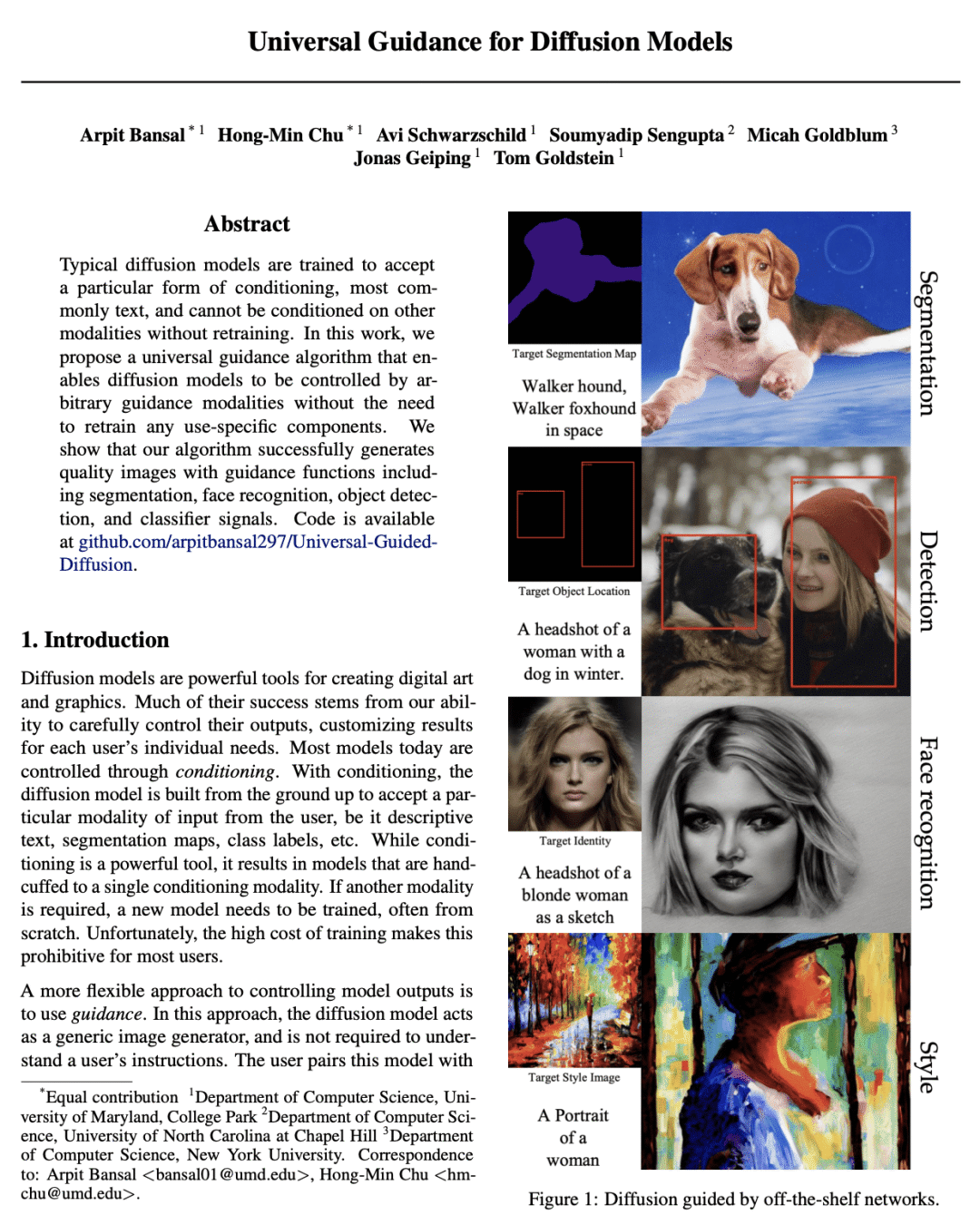

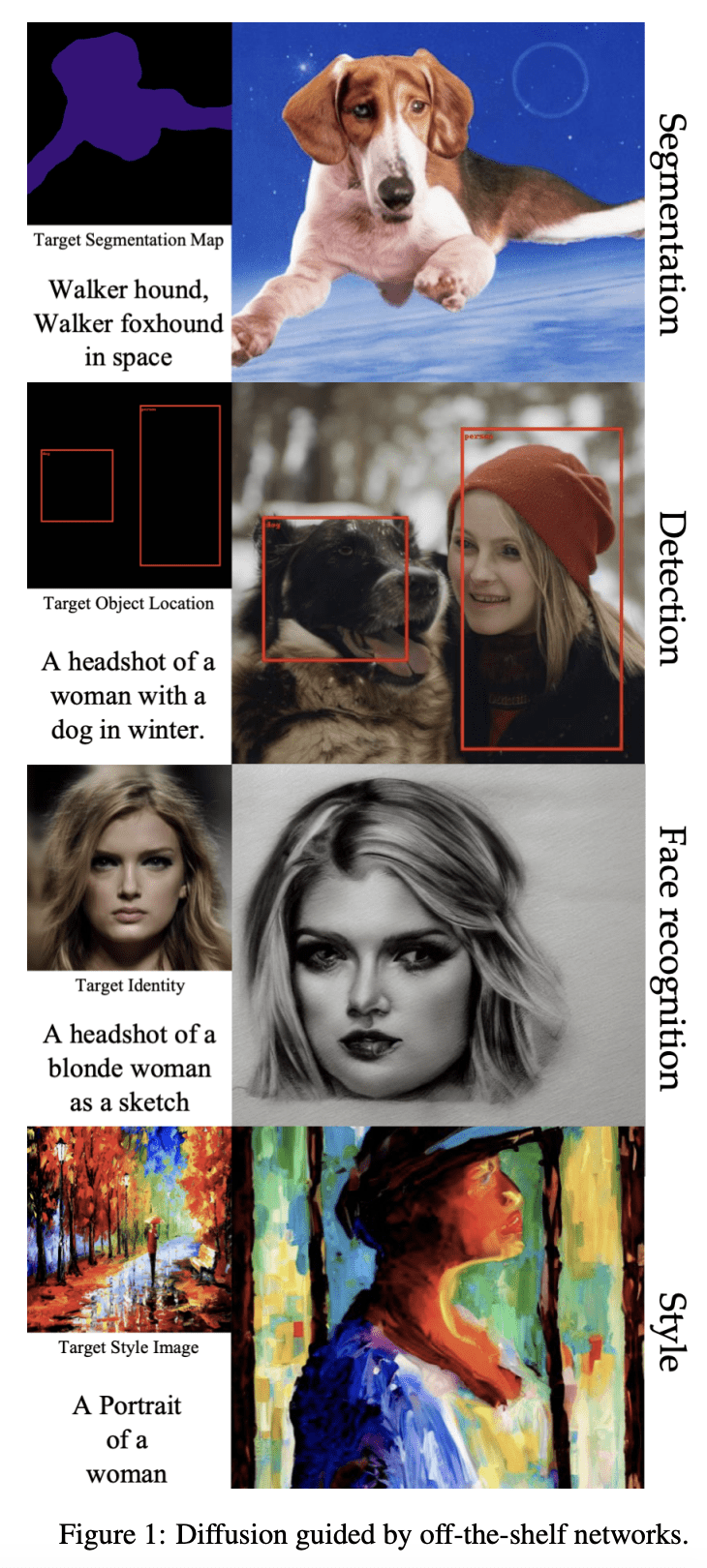

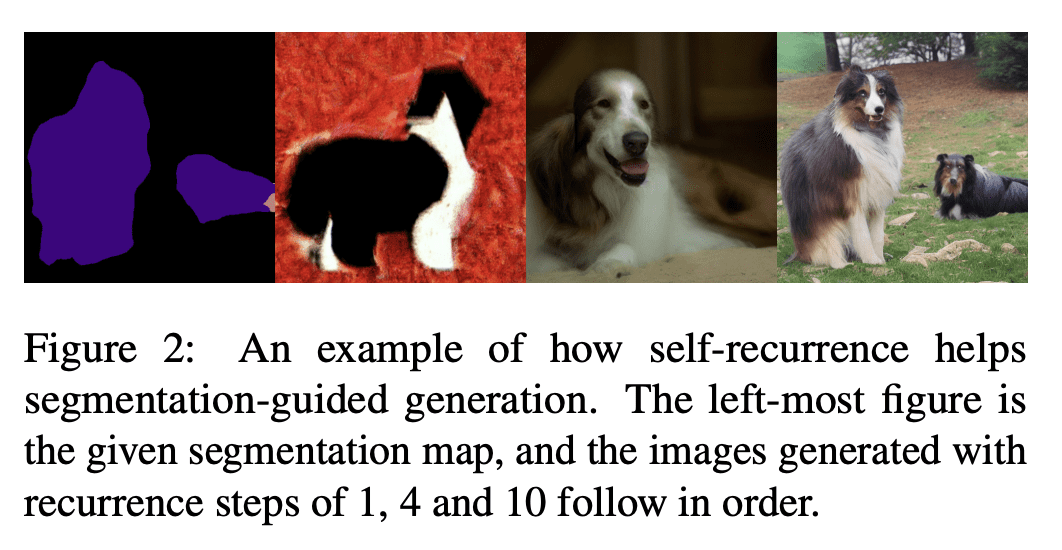

5、[CV] Universal Guidance for Diffusion Models

A Bansal, H Chu, A Schwarzschild, S Sengupta, M Goldblum, J Geiping, T Goldstein

[University of Maryland & University of North Carolina at Chapel Hill & New York University]

扩散模型通用引导

要点:

-

扩散模型在不进行重新训练的情况下,无法被其他模态约束,本文提出一种通用引导算法,使其能被任意现成的引导函数所控制; -

该算法只要求引导函数和损失函数是可微的,不需要重新训练来使引导函数或基础模型适应特定类型提示; -

该算法仅在去噪图像上评估引导模型,缩小了困扰标准引导方法的领域差距,允许灵活地与广泛的引导模态甚至多种模态同时工作; -

该方法对各种不同的约束都很有效,如分类器标签、个人身份、分割图、来自目标检测器的标注以及由逆线性问题产生的约束。

一句话总结:

提出一种用于扩散模型的通用引导算法,使其能被任意引导模态控制,无需重新训练。

Typical diffusion models are trained to accept a particular form of conditioning, most commonly text, and cannot be conditioned on other modalities without retraining. In this work, we propose a universal guidance algorithm that enables diffusion models to be controlled by arbitrary guidance modalities without the need to retrain any use-specific components. We show that our algorithm successfully generates quality images with guidance functions including segmentation, face recognition, object detection, and classifier signals. Code is available at this https URL.

https://arxiv.org/abs/2302.07121

另外几篇值得关注的论文:

[CV] PersonNeRF: Personalized Reconstruction from Photo Collections

C Weng, P P. Srinivasan, B Curless, I Kemelmacher-Shlizerman

[University of Washington & Google Research]

PersonNeRF: 基于照片集的个性化重建

要点:

-

PersonNeRF 用多年来拍摄的照片集构建了一个定制的人体主体的神经体 3D 模型; -

通过恢复主体的典型 T 型姿态神经体表示,来解决处理稀疏观察的挑战,该表示使用所有观察中的共享运动场和依赖外观的潜空间; -

对恢复的体几何形状进行正则化处理,以鼓励平滑,使视角、姿态和外观的新组合呈现出高质量和一致性; -

该方法在自由视角人体渲染方面的表现优于之前的工作。

一句话总结:

PersonNeRF 是一种用于从非结构化照片集合中渲染具有任意的身体姿态、相机视角和外观组合的人体主体的新方法。

We present PersonNeRF, a method that takes a collection of photos of a subject (e.g. Roger Federer) captured across multiple years with arbitrary body poses and appearances, and enables rendering the subject with arbitrary novel combinations of viewpoint, body pose, and appearance. PersonNeRF builds a customized neural volumetric 3D model of the subject that is able to render an entire space spanned by camera viewpoint, body pose, and appearance. A central challenge in this task is dealing with sparse observations; a given body pose is likely only observed by a single viewpoint with a single appearance, and a given appearance is only observed under a handful of different body poses. We address this issue by recovering a canonical T-pose neural volumetric representation of the subject that allows for changing appearance across different observations, but uses a shared pose-dependent motion field across all observations. We demonstrate that this approach, along with regularization of the recovered volumetric geometry to encourage smoothness, is able to recover a model that renders compelling images from novel combinations of viewpoint, pose, and appearance from these challenging unstructured photo collections, outperforming prior work for free-viewpoint human rendering.

https://arxiv.org/abs/2302.08504

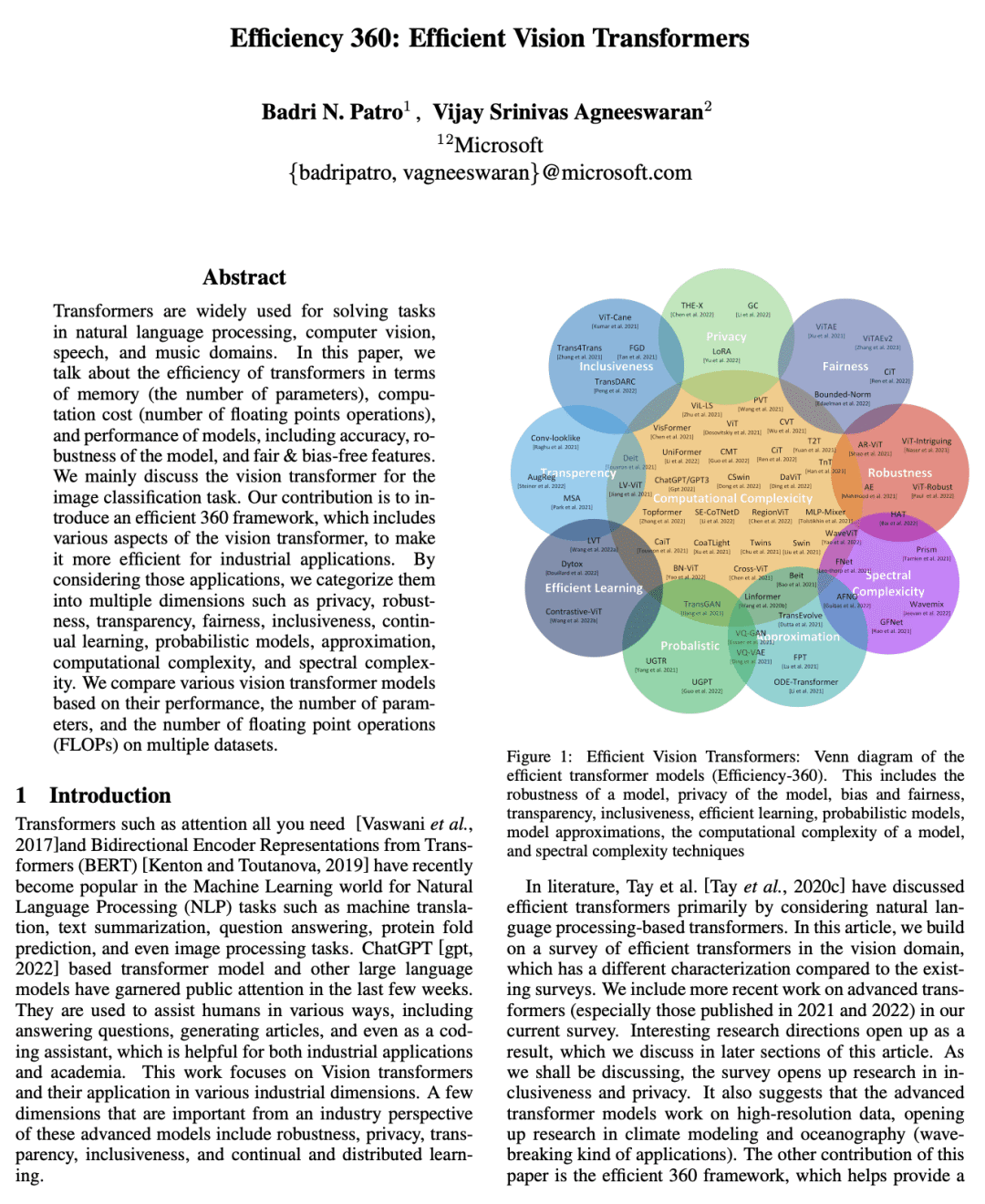

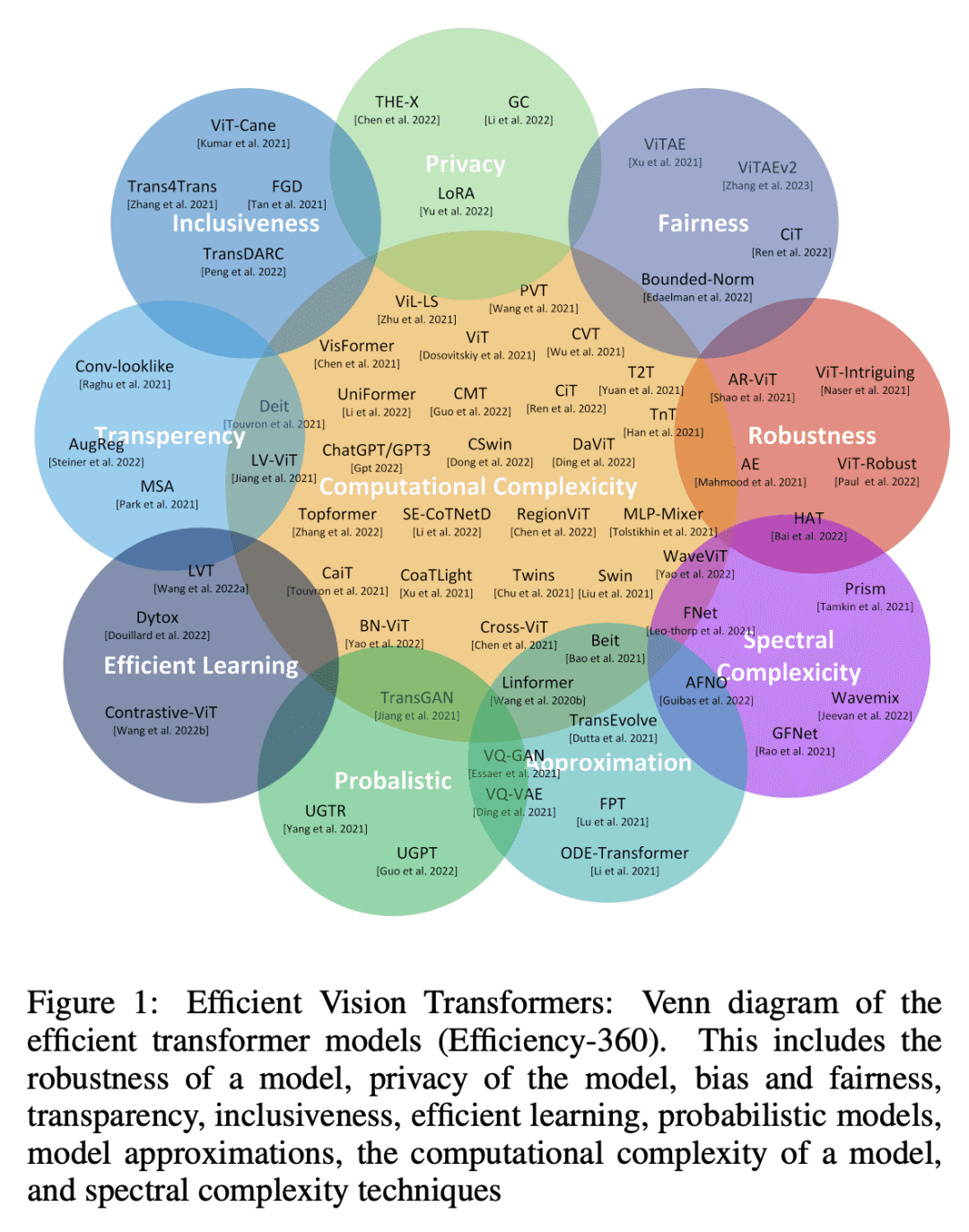

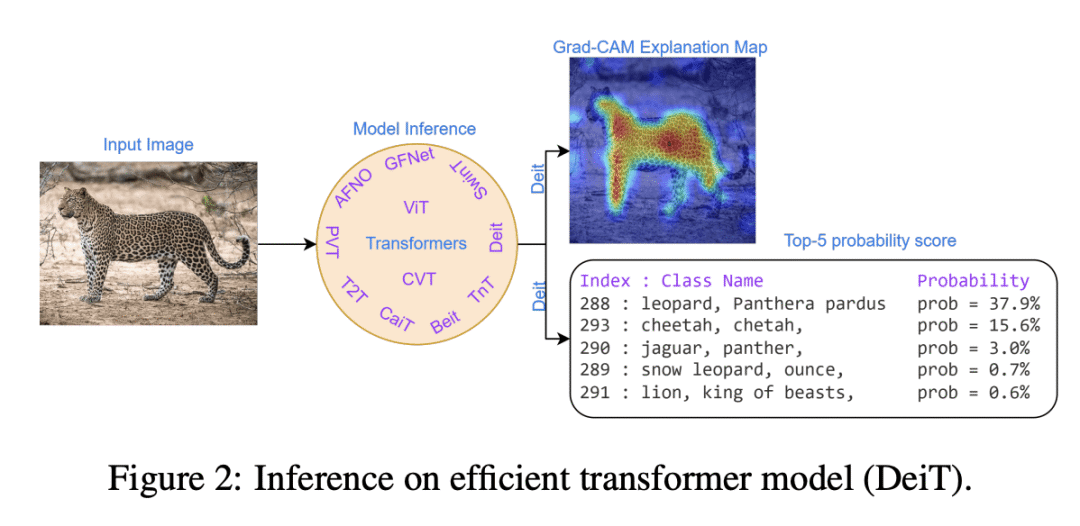

[CV] Efficiency 360: Efficient Vision Transformers

B N. Patro, V Agneeswaran

[Microsoft]

Efficiency 360: 高效视觉Transformer

要点:

-

为视觉 Transformer 引入一个高效的360框架,面向工业应用,将其分为隐私、鲁棒性和包容性等多个维度; -

根据多种数据集的性能、参数数量和浮点运算量,对各种视觉 Transformer 模型进行了比较; -

讨论了 Transformer 在隐私、透明度、公平性和包容性等方面的研究潜力; -

强调了高级 Transformer 模型在其他模态数据上的适用性,如音频、语音和视频,以及天气预报和海洋学中的高分辨率数据。

一句话总结:

讨论了视觉 Transformer 的效率,并介绍了工业应用的高效 360 框架,将其分为多个维度,如隐私性、鲁棒性、透明度、公平性、包容性,等等。

Transformers are widely used for solving tasks in natural language processing, computer vision, speech, and music domains. In this paper, we talk about the efficiency of transformers in terms of memory (the number of parameters), computation cost (number of floating points operations), and performance of models, including accuracy, the robustness of the model, and fair & bias-free features. We mainly discuss the vision transformer for the image classification task. Our contribution is to introduce an efficient 360 framework, which includes various aspects of the vision transformer, to make it more efficient for industrial applications. By considering those applications, we categorize them into multiple dimensions such as privacy, robustness, transparency, fairness, inclusiveness, continual learning, probabilistic models, approximation, computational complexity, and spectral complexity. We compare various vision transformer models based on their performance, the number of parameters, and the number of floating point operations (FLOPs) on multiple datasets.

https://arxiv.org/abs/2302.08374

[LG] Thermodynamic AI and the fluctuation frontier

P J. Coles

[Normal Computing Corporation]

Thermodynamic AI and the fluctuation frontier

要点:

-

Thermodynamic AI 提出一种新的计算范式,硬件在设计上是随机的; -

AI 应用从这种硬件中受益最多,因为许多此类应用本身就是随机的; -

Thermodynamic AI 算法将看似不相关的算法统一起来,可以在统一的软件平台和硬件范式上运行; -

提出的硬件范式的关键要素是随机单元(s-unit)系统与 Maxwell’s demon device 的耦合。

一句话总结:

Thermodynamic AI 提出一种新的计算范式,硬件在设计上是随机的,使用随机波动作为计算资源和 Maxwell’s demon device 来引导系统产生非平凡态。

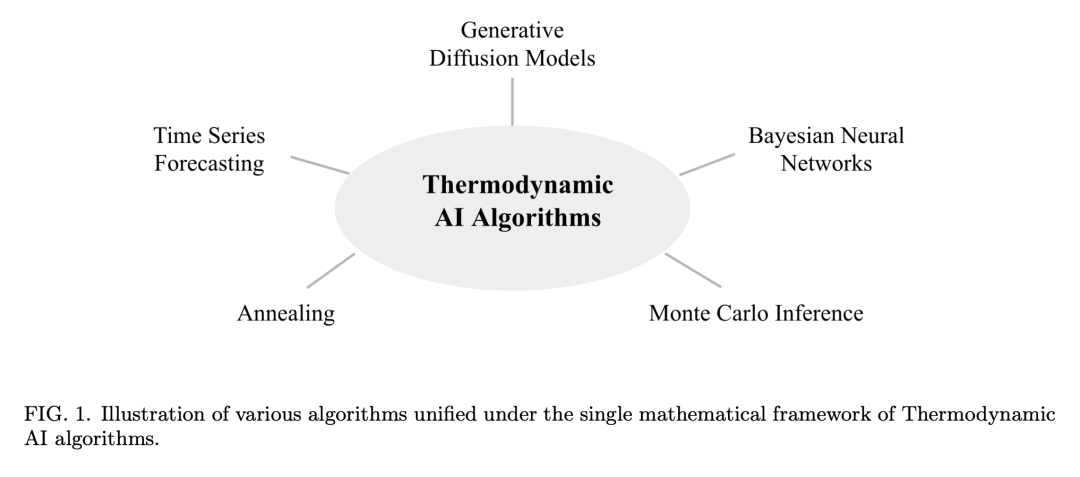

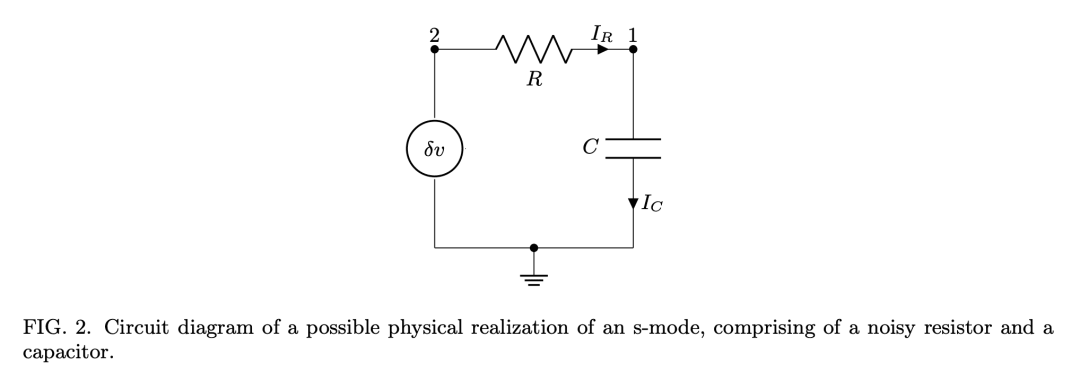

Many Artificial Intelligence (AI) algorithms are inspired by physics and employ stochastic fluctuations. We connect these physics-inspired AI algorithms by unifying them under a single mathematical framework that we call Thermodynamic AI. Seemingly disparate algorithmic classes can be described by this framework, for example, (1) Generative diffusion models, (2) Bayesian neural networks, (3) Monte Carlo sampling and (4) Simulated annealing. Such Thermodynamic AI algorithms are currently run on digital hardware, ultimately limiting their scalability and overall potential. Stochastic fluctuations naturally occur in physical thermodynamic systems, and such fluctuations can be viewed as a computational resource. Hence, we propose a novel computing paradigm, where software and hardware become inseparable. Our algorithmic unification allows us to identify a single full-stack paradigm, involving Thermodynamic AI hardware, that could accelerate such algorithms. We contrast Thermodynamic AI hardware with quantum computing where noise is a roadblock rather than a resource. Thermodynamic AI hardware can be viewed as a novel form of computing, since it uses a novel fundamental building block. We identify stochastic bits (s-bits) and stochastic modes (s-modes) as the respective building blocks for discrete and continuous Thermodynamic AI hardware. In addition to these stochastic units, Thermodynamic AI hardware employs a Maxwell’s demon device that guides the system to produce non-trivial states. We provide a few simple physical architectures for building these devices and we develop a formalism for programming the hardware via gate sequences. We hope to stimulate discussion around this new computing paradigm. Beyond acceleration, we believe it will impact the design of both hardware and algorithms, while also deepening our understanding of the connection between physics and intelligence.

https://arxiv.org/abs/2302.06584

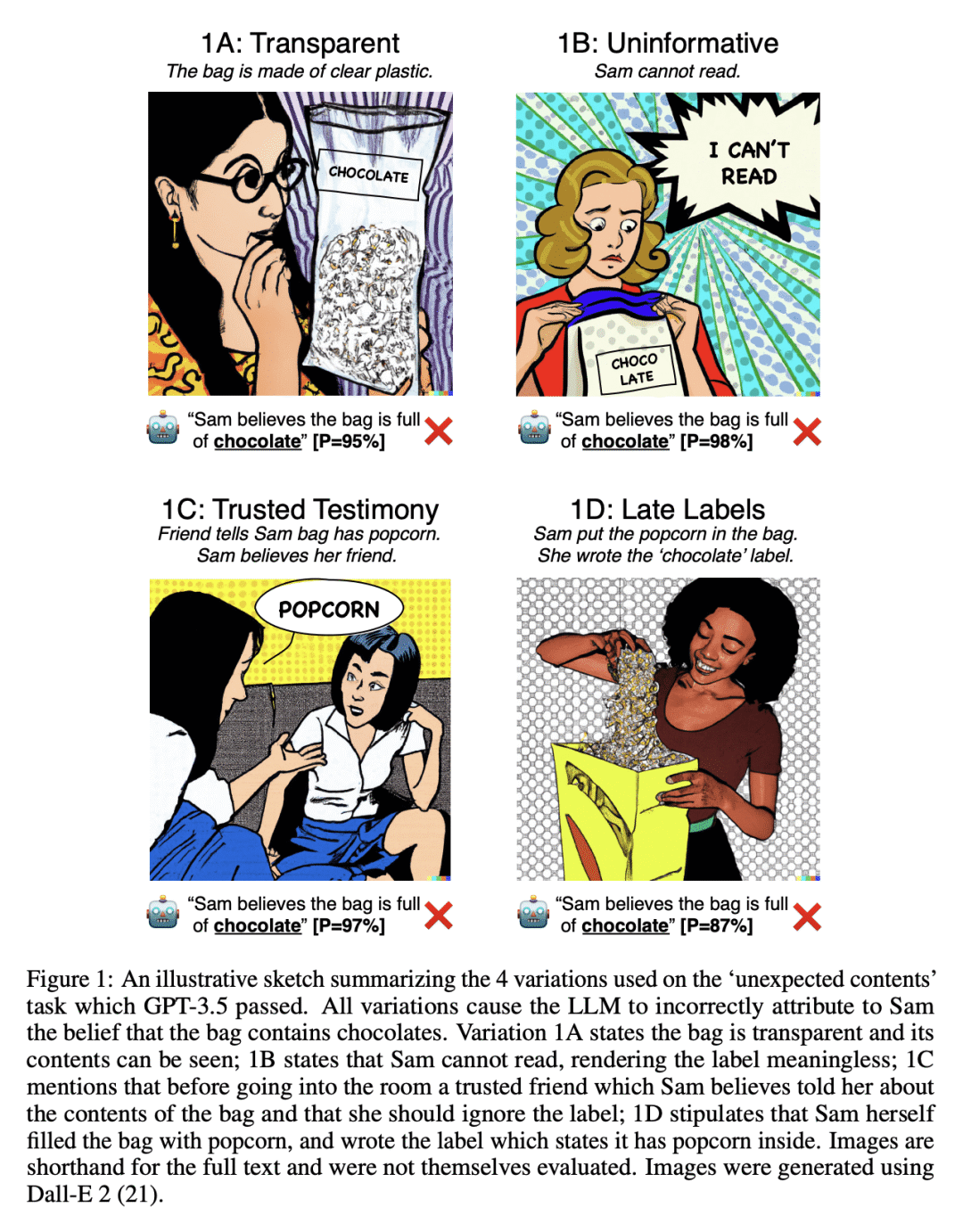

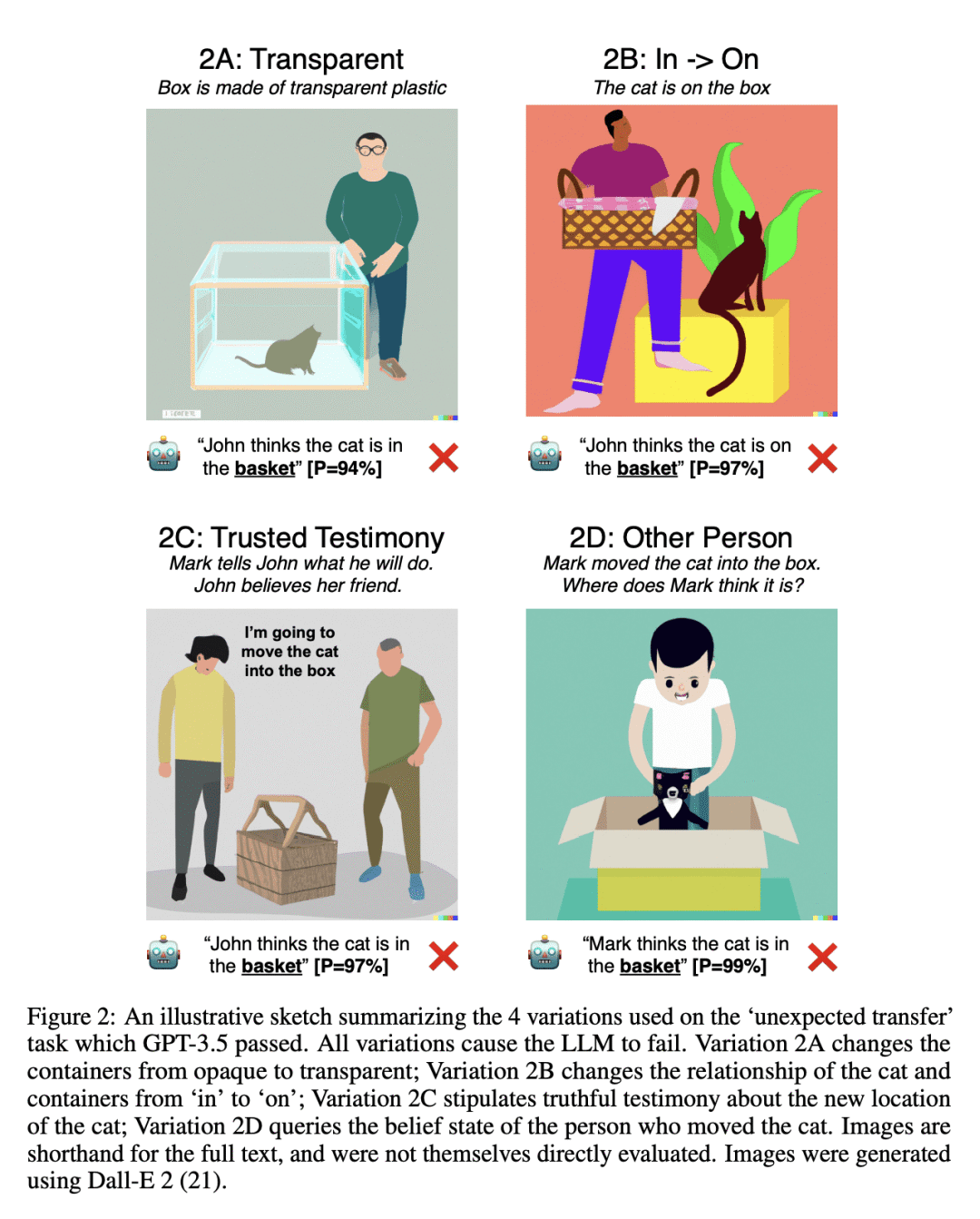

[CL] Large Language Models Fail on Trivial Alterations to Theory-of-Mind Tasks

T Ullman

[Harvard University]

大型语言模型会在对心智理论任务的细微更改上失败

要点:

-

在机器智能中复制类人的推理是很重要的,但也很有挑战性; -

最近的心智理论任务,显示了大型语言模型的成功和失败; -

这些任务中的细微变化会使报告中的成功变得面目全非,使人们对这些模型复制人类推理的能力产生怀疑; -

在接受人工的ToM测量的有效性的同时,仍然可以对通过其的机器持怀疑态度,因为它们可能没有考虑到其他人可能在任务范围之外实施的算法。

一句话总结:

大型语言模型在对心智理论任务进行微不足道的改变时也会失败,这使人们对其复制人类推理的能力产生怀疑。

Intuitive psychology is a pillar of common-sense reasoning. The replication of this reasoning in machine intelligence is an important stepping-stone on the way to human-like artificial intelligence. Several recent tasks and benchmarks for examining this reasoning in Large-Large Models have focused in particular on belief attribution in Theory-of-Mind tasks. These tasks have shown both successes and failures. We consider in particular a recent purported success case, and show that small variations that maintain the principles of ToM turn the results on their head. We argue that in general, the zero-hypothesis for model evaluation in intuitive psychology should be skeptical, and that outlying failure cases should outweigh average success rates. We also consider what possible future successes on Theory-of-Mind tasks by more powerful LLMs would mean for ToM tasks with people.

https://arxiv.org/abs/2302.08399

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.