1、[LG] G-Signatures: Global Graph Propagation With Randomized Signatures

2、[LG] Transformers are Sample-Efficient World Models

3、[LG] Consistent Diffusion Models: Mitigating Sampling Drift by Learning to be Consistent

4、[CL] Massively Multilingual Shallow Fusion with Large Language Models

5、[CL] Pretraining Language Models with Human Preferences

[LG] Score-based Diffusion Models in Function Space

[CV] PRedItOR: Text Guided Image Editing with Diffusion Prior

[LG] MiDi: Mixed Graph and 3D Denoising Diffusion for Molecule Generation

[LG] Post-Episodic Reinforcement Learning Inference

摘要:基于随机签名的全局图传播、Transformers是样本高效的世界模型、一致扩散模型、基于大型语言模型的大规模多语言浅融合、基于人工偏好预训练语言模型、函数空间的分数扩散模型、基于扩散先验的文本引导图像编辑、混合图和3D去噪扩散在分子生成中的应用、偶发后强化学习推理

1、[LG] G-Signatures: Global Graph Propagation With Randomized Signatures

B Schäfl, L Gruber, J Brandstetter, S Hochreiter

[Johannes Kepler University Linz & Microsoft Research]

G-Signatures: 基于随机签名的全局图传播

要点:

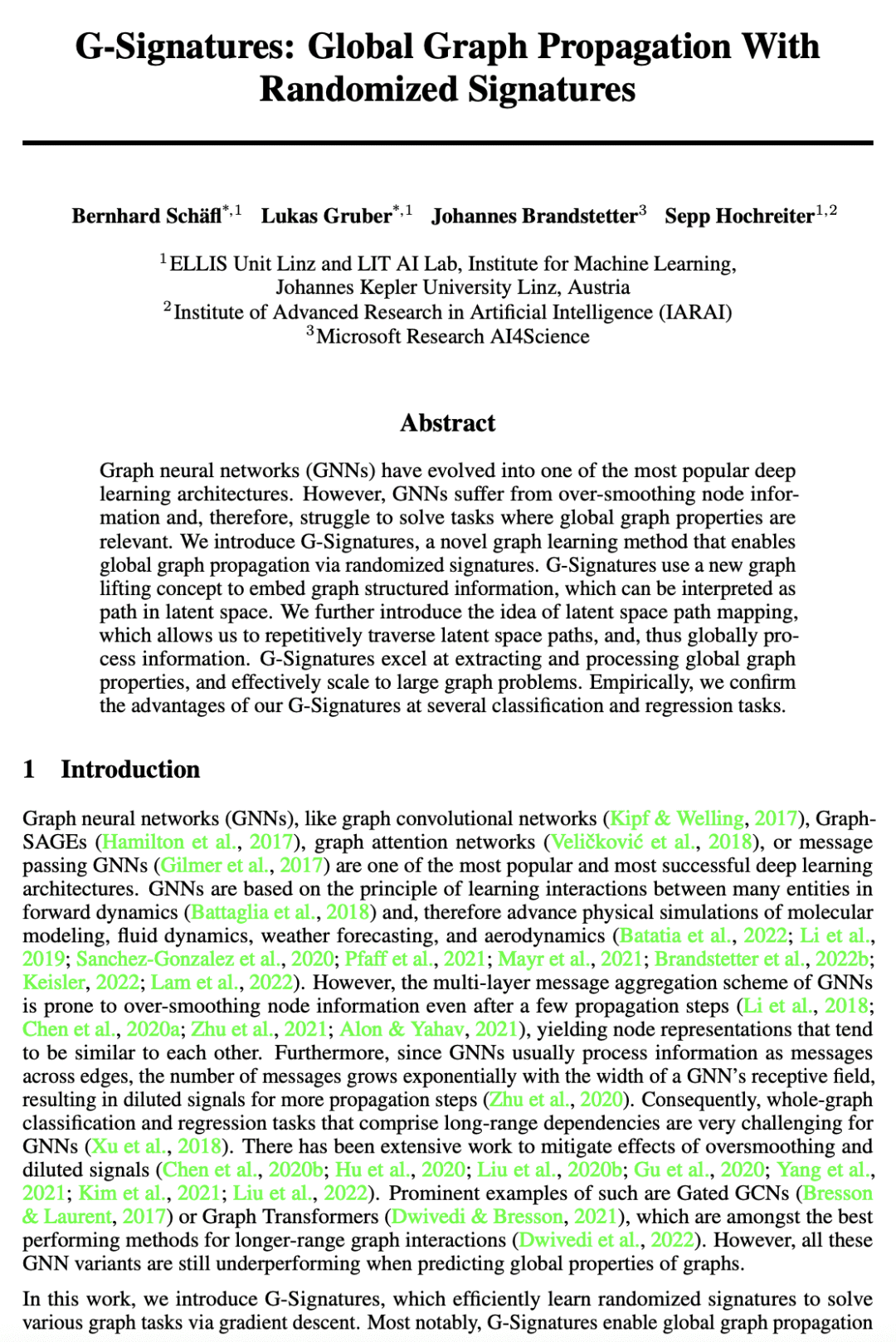

-

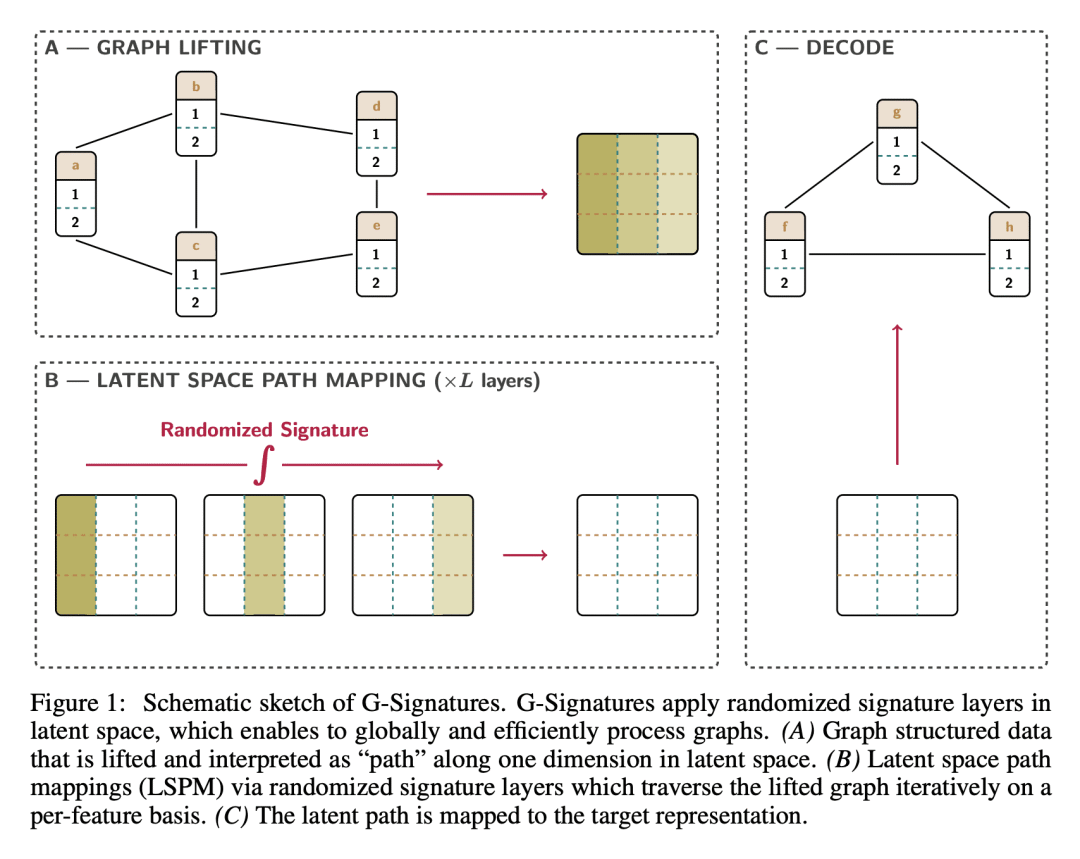

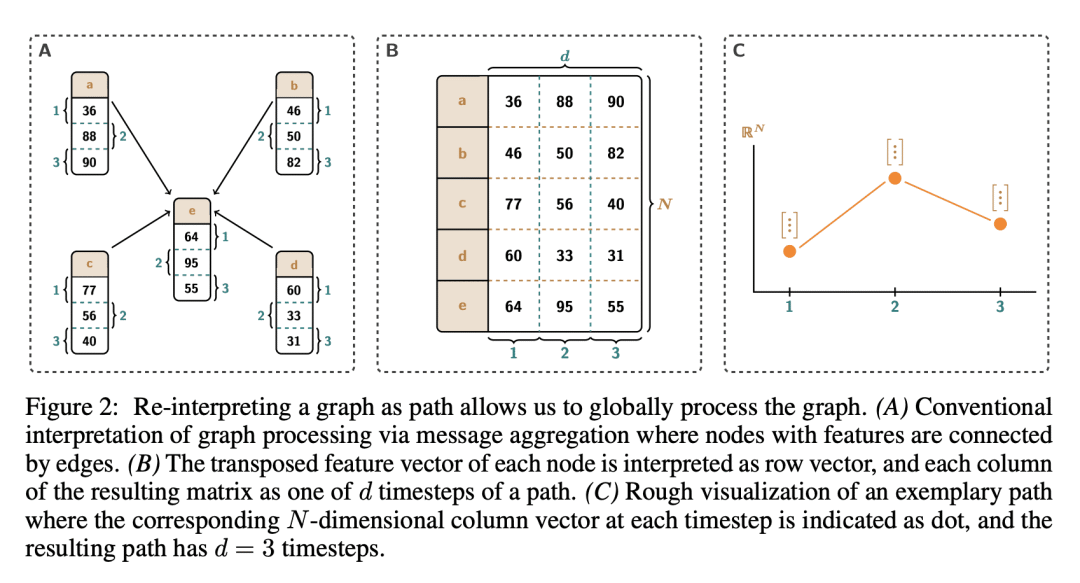

G-Signatures 是一种新的图学习方法,通过随机签名进行全局图传播; -

引入图提升和潜空间路径映射的概念,将图结构化数据视为潜空间路径; -

G-Signatures 在提取全局图属性方面表现出色,内存和计算预算大幅减少; -

实验表明,G-Signatures 在一些分类和回归任务中显示出优势。

一句话总结:

G-Signatures 是一种新的图学习方法,通过随机签名实现全局图传播,可以高效、可扩展地解决大型图问题。

Graph neural networks (GNNs) have evolved into one of the most popular deep learning architectures. However, GNNs suffer from over-smoothing node information and, therefore, struggle to solve tasks where global graph properties are relevant. We introduce G-Signatures, a novel graph learning method that enables global graph propagation via randomized signatures. G-Signatures use a new graph lifting concept to embed graph structured information, which can be interpreted as path in latent space. We further introduce the idea of latent space path mapping, which allows us to repetitively traverse latent space paths, and, thus globally process information. G-Signatures excel at extracting and processing global graph properties, and effectively scale to large graph problems. Empirically, we confirm the advantages of our G-Signatures at several classification and regression tasks.

https://arxiv.org/abs/2302.08811

2、[LG] Transformers are Sample-Efficient World Models

V Micheli, E Alonso, F Fleuret

[University of Geneva]

Transformers是样本高效的世界模型

要点:

-

深度强化学习智能体的样本效率低下,限制了它们在现实世界中的应用; -

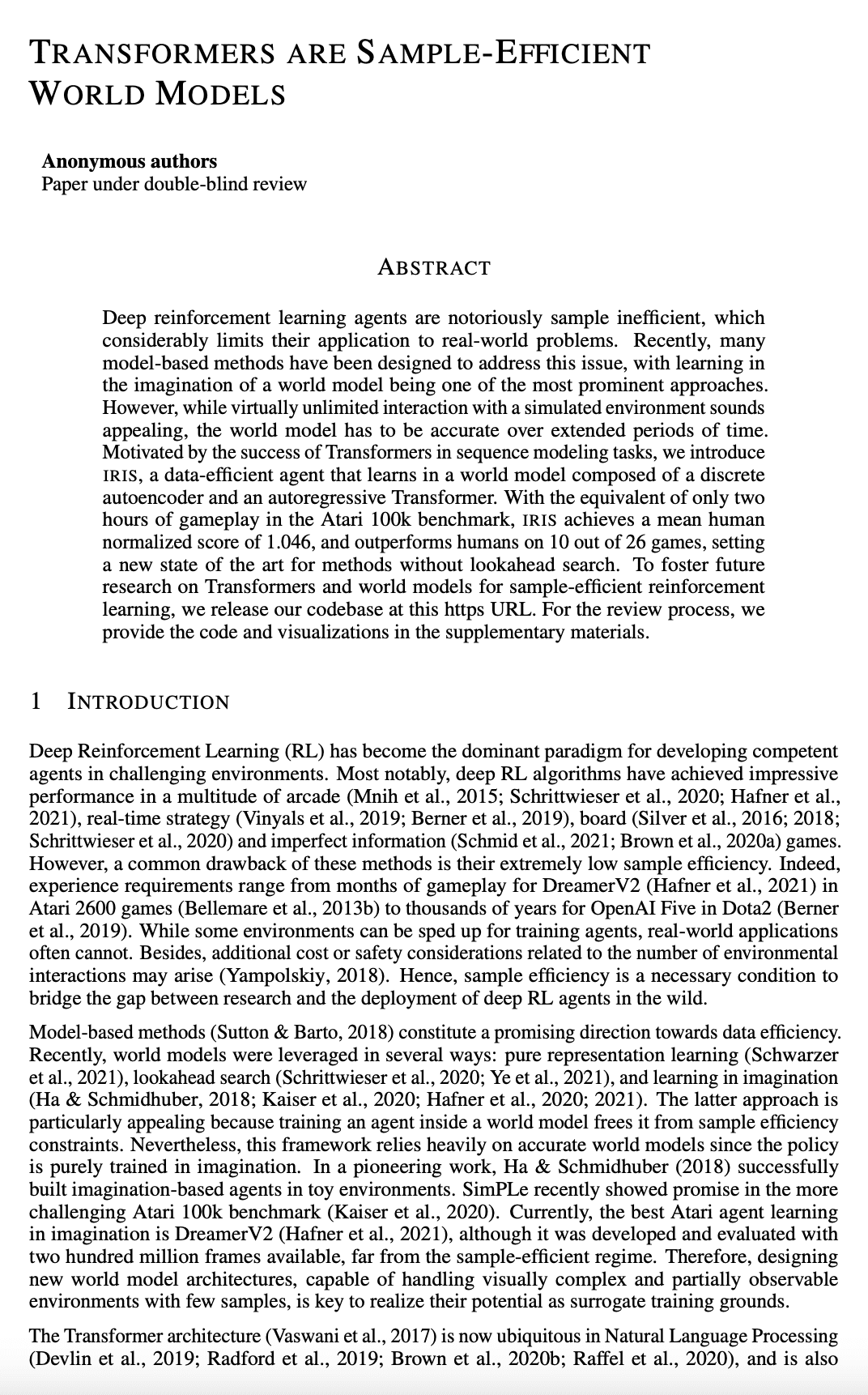

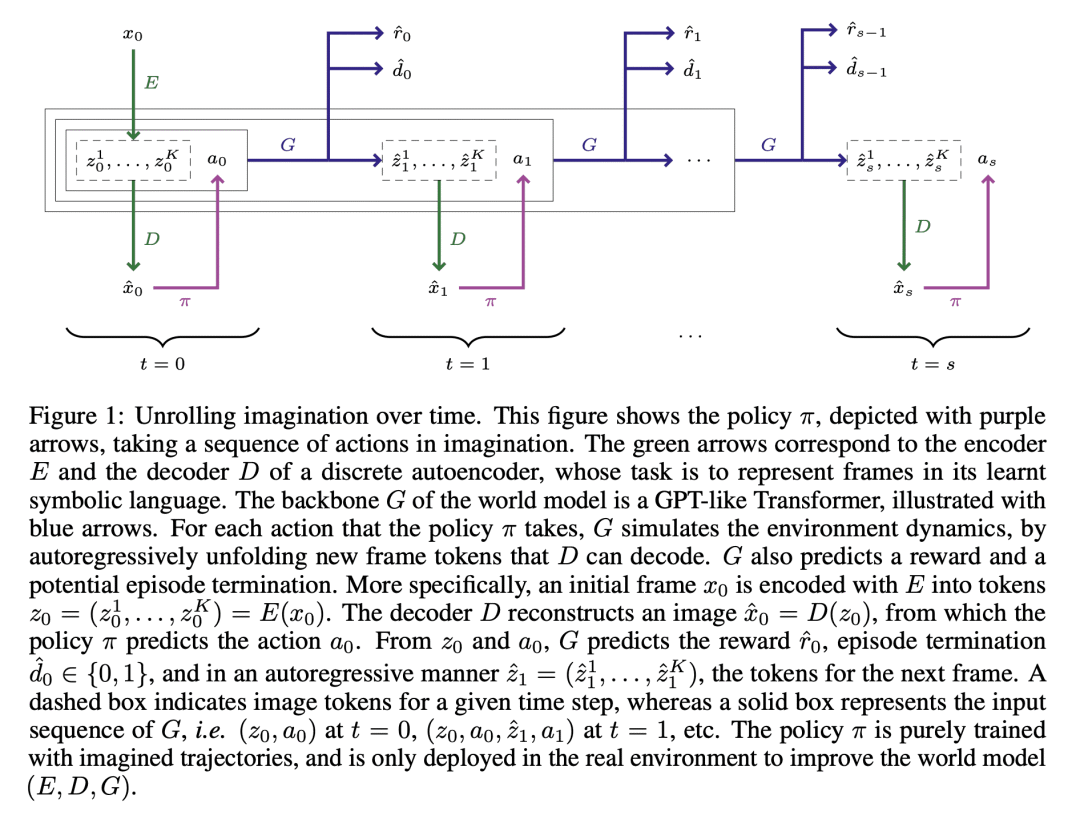

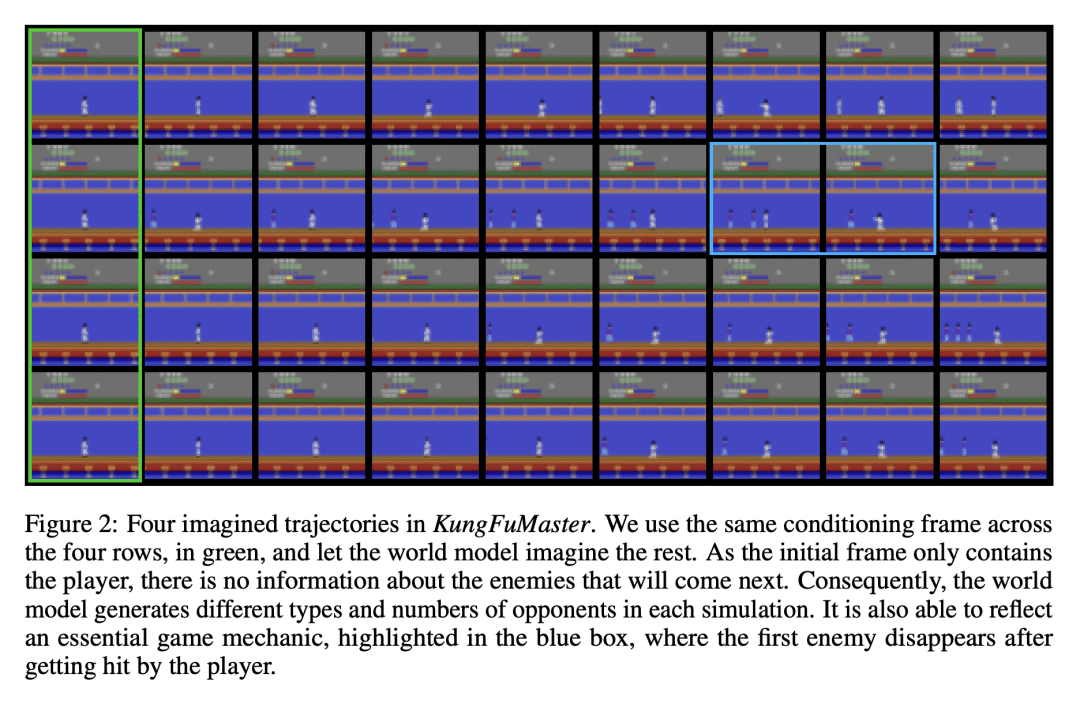

IRIS 是一个数据高效的智能体,在一个由离散自编码器和自回归 Transformer 组成的世界模型中学习; -

IRIS 实现了 1.046 的人工归一化平均分,在 Atari 100k 基准的 26 个游戏中,有 10 个超过了人类; -

IRIS 为有效解决复杂环境问题开辟了一条新的道路。

一句话总结:

IRIS 是一个在由离散自编码器和自回归 Transformer 组成的世界模型的想象中训练的智能体,在 Atari 100k 基准中为没有前瞻搜索的方法设定了新的最先进水平。

Deep reinforcement learning agents are notoriously sample inefficient, which considerably limits their application to real-world problems. Recently, many model-based methods have been designed to address this issue, with learning in the imagination of a world model being one of the most prominent approaches. However, while virtually unlimited interaction with a simulated environment sounds appealing, the world model has to be accurate over extended periods of time. Motivated by the success of Transformers in sequence modeling tasks, we introduce IRIS, a data-efficient agent that learns in a world model composed of a discrete autoencoder and an autoregressive Transformer. With the equivalent of only two hours of gameplay in the Atari 100k benchmark, IRIS achieves a mean human normalized score of 1.046, and outperforms humans on 10 out of 26 games, setting a new state of the art for methods without lookahead search. To foster future research on Transformers and world models for sample-efficient reinforcement learning, we release our codebase at this https URL. For the review process, we provide the code and visualizations in the supplementary materials.

https://openreview.net/forum?id=vhFu1Acb0xb

3、[LG] Consistent Diffusion Models: Mitigating Sampling Drift by Learning to be Consistent

G Daras, Y Dagan, A G. Dimakis, C Daskalakis

[University of Texas at Austin & UC Berkeley & MIT]

一致扩散模型: 通过一致性学习减轻采样漂移

要点:

-

基于去噪分数匹配(DSM)标准训练目标仅被设计为对扩散模型中的非漂移数据进行优化; -

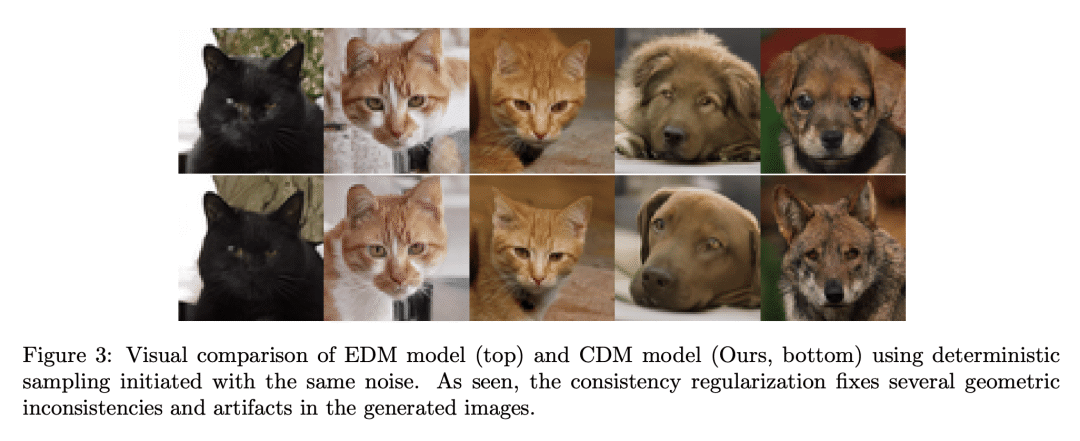

强制执行一致性属性,即模型对其自身产生的数据的预测在不同时期是一致的,这就减少了在之前经验工作中观察到的采样漂移; -

一致性属性意味着是从一些扩散过程的反向采样,如果去噪器是一致的,并且隐得分函数是一个保守域,得分函数是一组扩散过程的得分函数; -

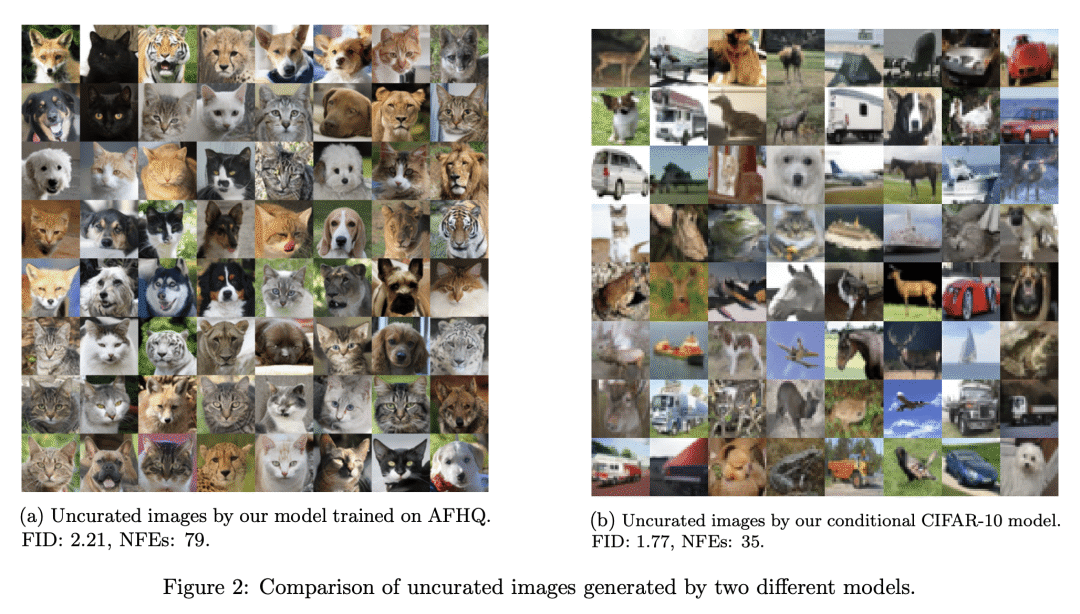

所提出的目标在 CIFAR-10 中取得了最先进的结果,在 AFHQ 和 FFHQ 中取得了基线改进。

一句话总结:

提出一个新的训练目标,在扩散模型中强制执行去噪器的一致性,实现了 CIFAR-10 中的条件和无条件生成的最先进结果,以及 AFHQ 和 FFHQ 的基线改进。

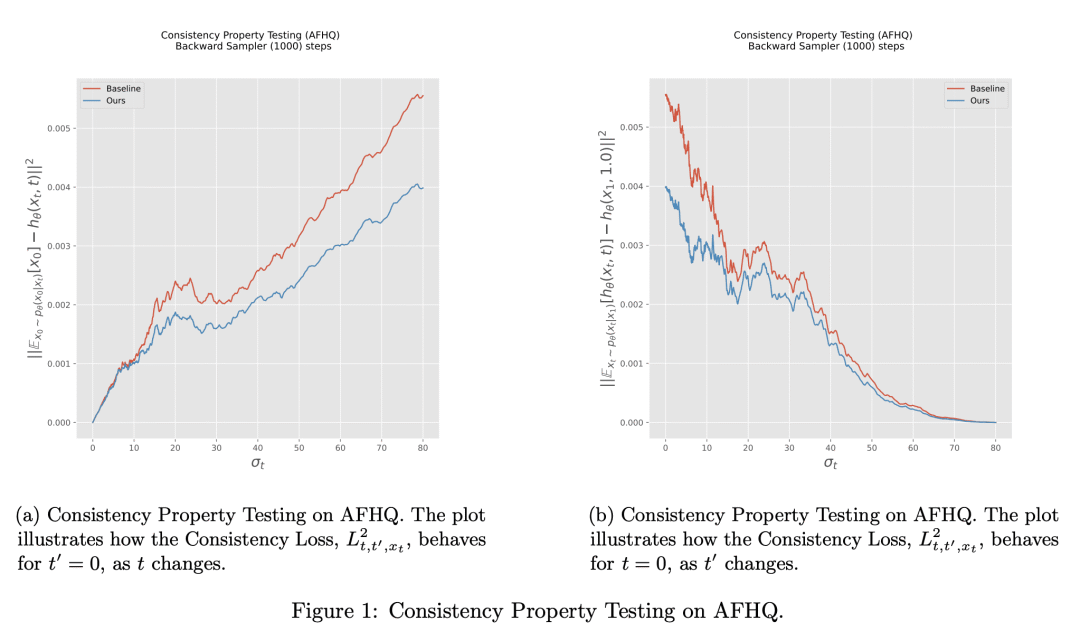

Imperfect score-matching leads to a shift between the training and the sampling distribution of diffusion models. Due to the recursive nature of the generation process, errors in previous steps yield sampling iterates that drift away from the training distribution. Yet, the standard training objective via Denoising Score Matching (DSM) is only designed to optimize over non-drifted data. To train on drifted data, we propose to enforce a emph{consistency} property which states that predictions of the model on its own generated data are consistent across time. Theoretically, we show that if the score is learned perfectly on some non-drifted points (via DSM) and if the consistency property is enforced everywhere, then the score is learned accurately everywhere. Empirically we show that our novel training objective yields state-of-the-art results for conditional and unconditional generation in CIFAR-10 and baseline improvements in AFHQ and FFHQ. We open-source our code and models: this https URL

https://arxiv.org/abs/2302.09057

4、[CL] Massively Multilingual Shallow Fusion with Large Language Models

K Hu, T N. Sainath, B Li, N Du, Y Huang, A M. Dai, Y Zhang, R Cabrera, Z Chen, T Strohman

[Google]

基于大型语言模型的大规模多语言浅融合

要点:

-

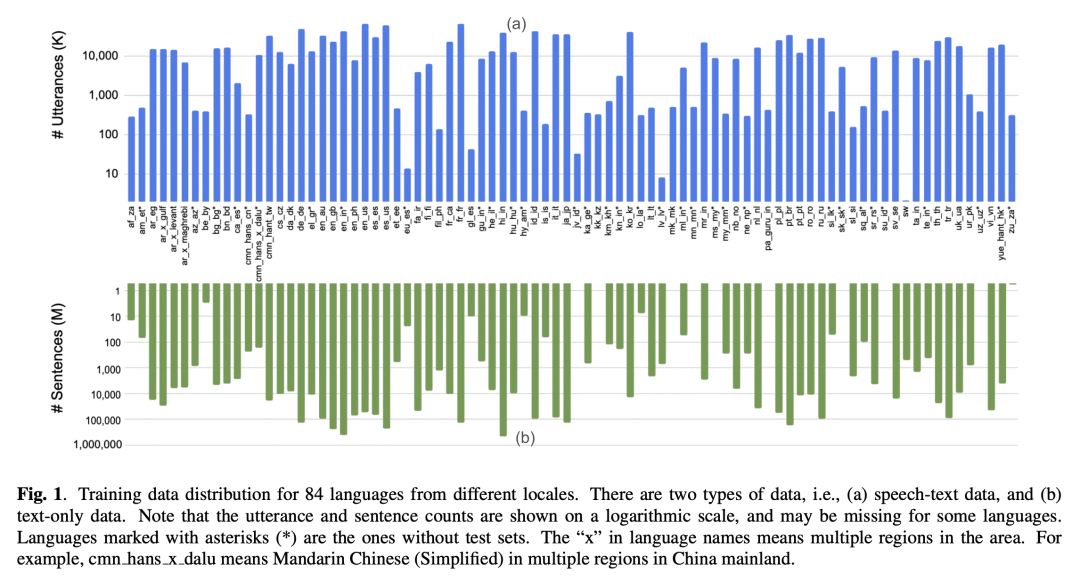

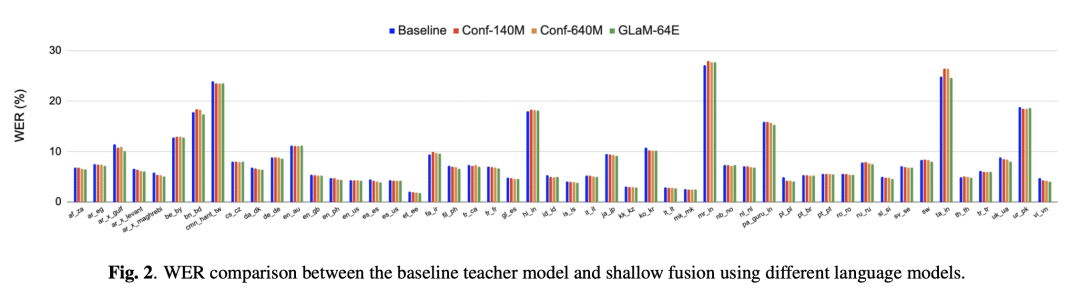

相对于类似计算的稠密型语言模型,GLaM 将英语长尾测试集的误码率降低了4.4%; -

在多语言浅层融合任务中,GLaM 改善了50种语言中 41 种语言的误码率,平均降低了 3.85%,最大相对降低了 10%。 -

与基线模型相比,GLaM 在 43 种语言中实现了平均 5.53% 的误码率降低; -

尽管 GLaM 模型很大(1.9GB),但由于其 MoE 架构,其推理计算量与 140M 稠密语言模型相似。

一句话总结:

提出在自动语音识别的浅层融合中使用大规模多语种语言模型(GLaM),在许多语言中取得了显著的改进。

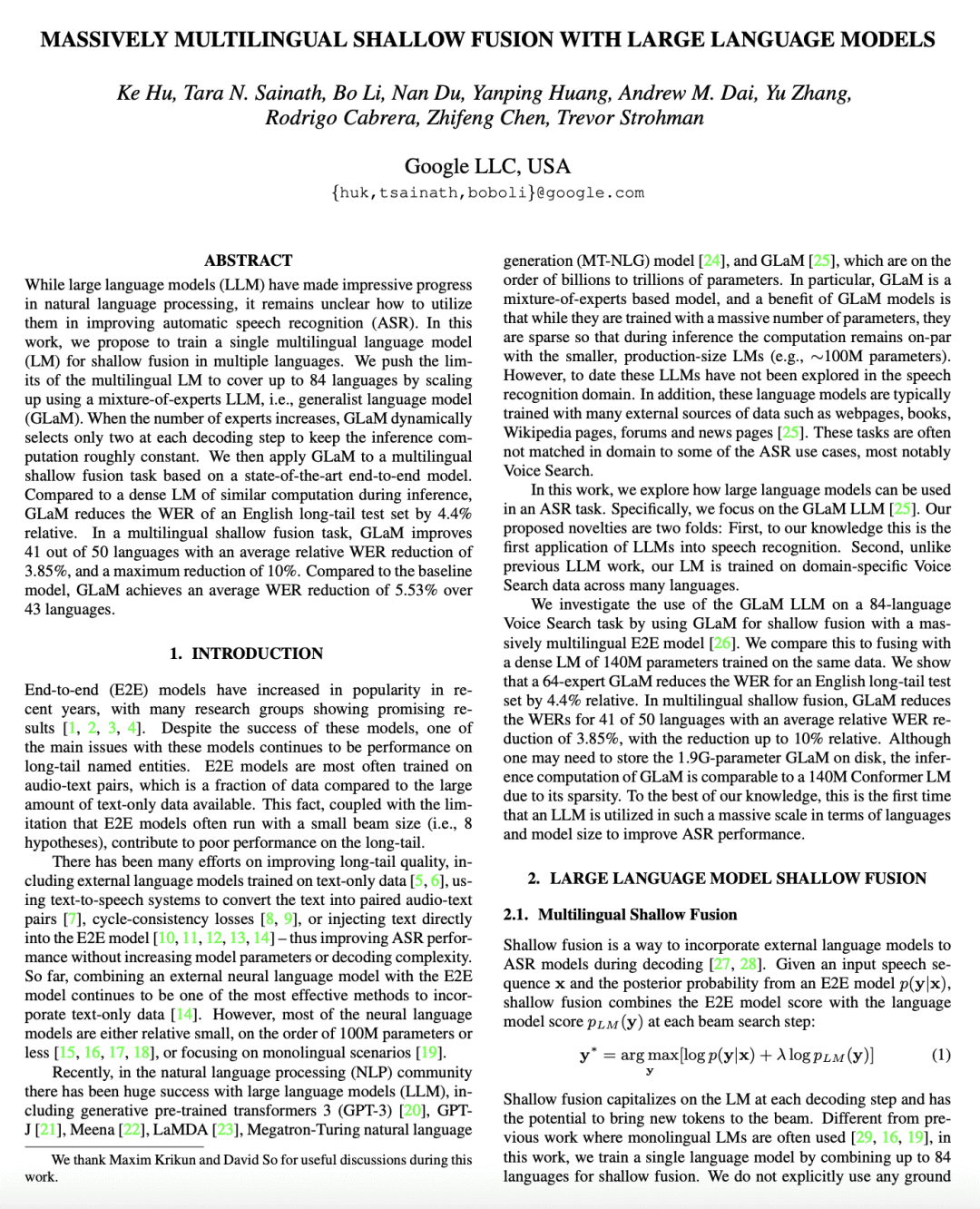

While large language models (LLM) have made impressive progress in natural language processing, it remains unclear how to utilize them in improving automatic speech recognition (ASR). In this work, we propose to train a single multilingual language model (LM) for shallow fusion in multiple languages. We push the limits of the multilingual LM to cover up to 84 languages by scaling up using a mixture-of-experts LLM, i.e., generalist language model (GLaM). When the number of experts increases, GLaM dynamically selects only two at each decoding step to keep the inference computation roughly constant. We then apply GLaM to a multilingual shallow fusion task based on a state-of-the-art end-to-end model. Compared to a dense LM of similar computation during inference, GLaM reduces the WER of an English long-tail test set by 4.4% relative. In a multilingual shallow fusion task, GLaM improves 41 out of 50 languages with an average relative WER reduction of 3.85%, and a maximum reduction of 10%. Compared to the baseline model, GLaM achieves an average WER reduction of 5.53% over 43 languages.

https://arxiv.org/abs/2302.08917

5、[CL] Pretraining Language Models with Human Preferences

T Korbak, K Shi, A Chen, R Bhalerao, C L. Buckley, J Phang, S R. Bowman, E Perez

[University of Sussex & New York University & Northeastern University]

基于人工偏好预训练语言模型

要点:

-

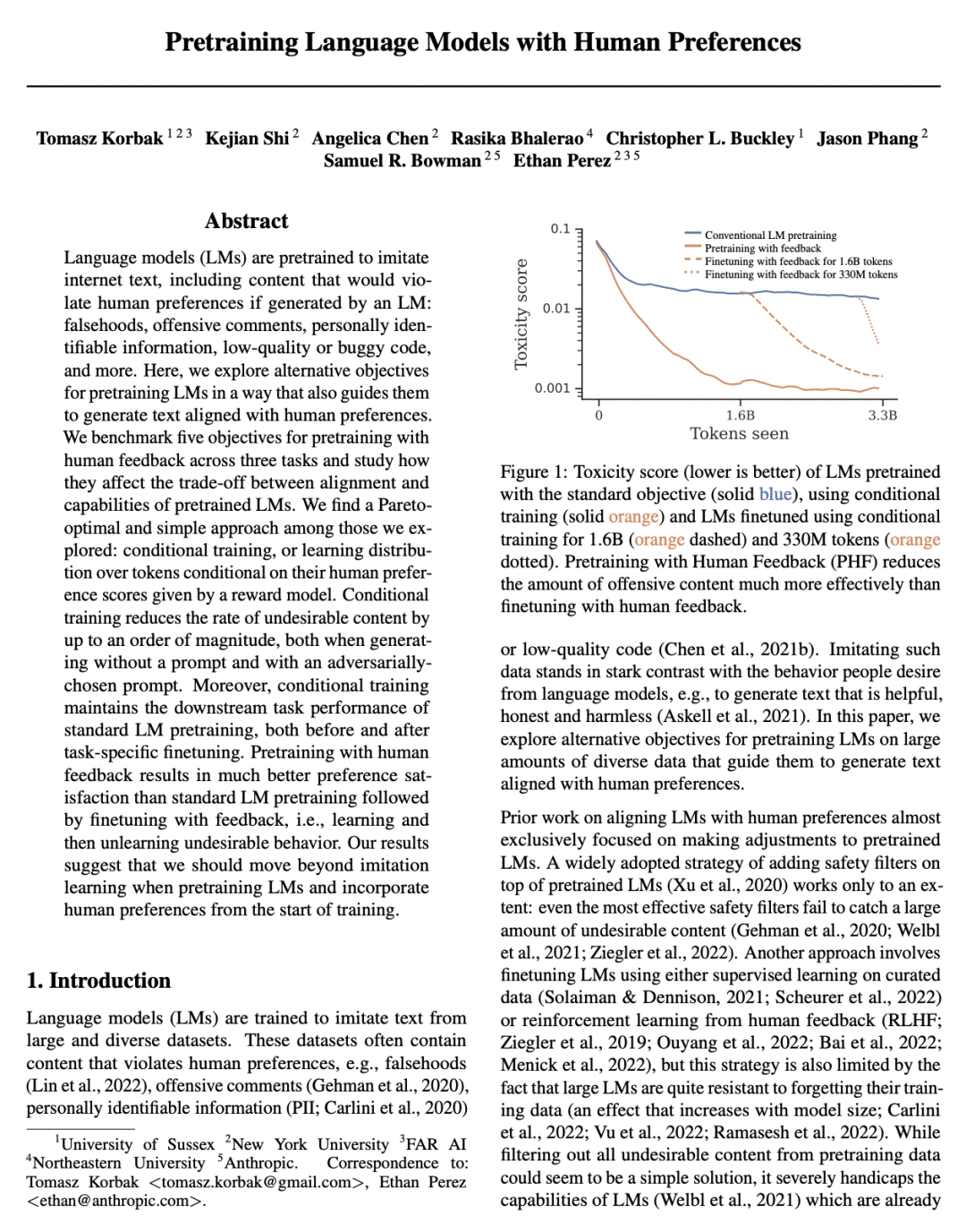

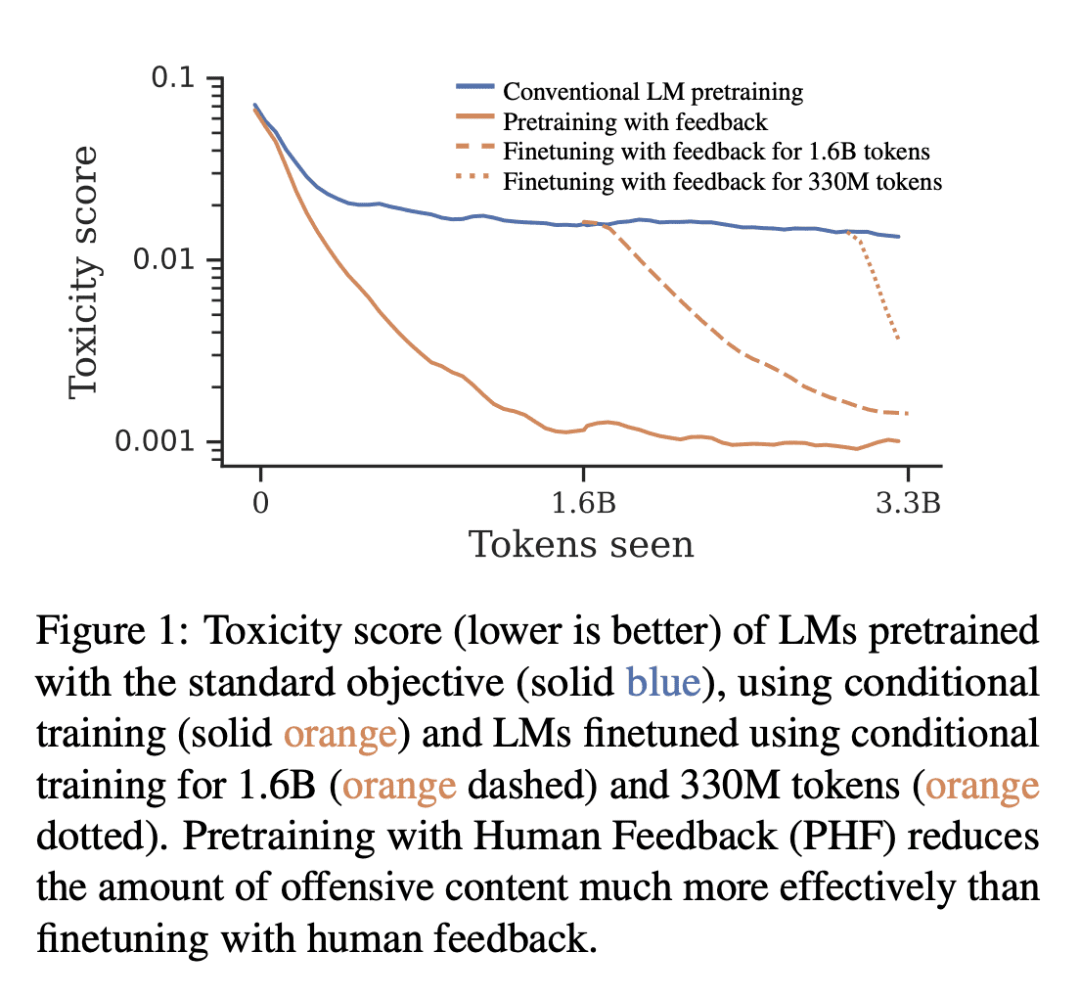

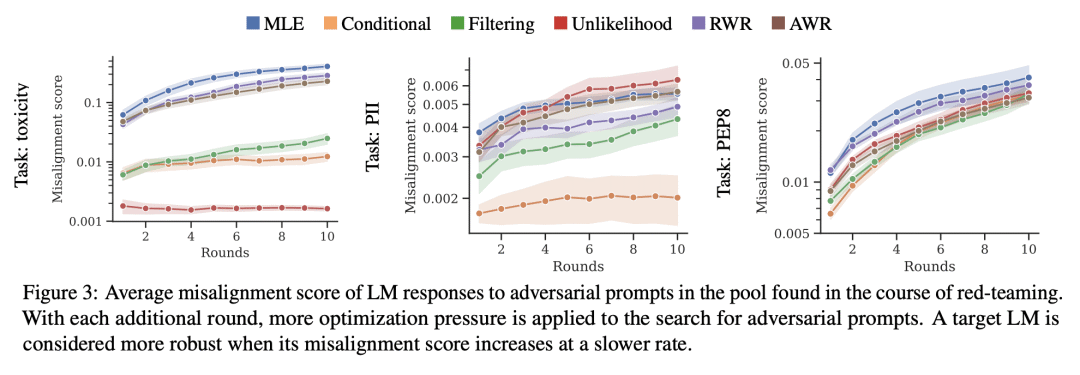

利用人工反馈对语言模型进行预训练,可以使模型生成的文本更符合人工偏好,并在下游任务中表现良好; -

基于人工反馈的条件训练,是预训练语言模型的一种简单而有效的方法; -

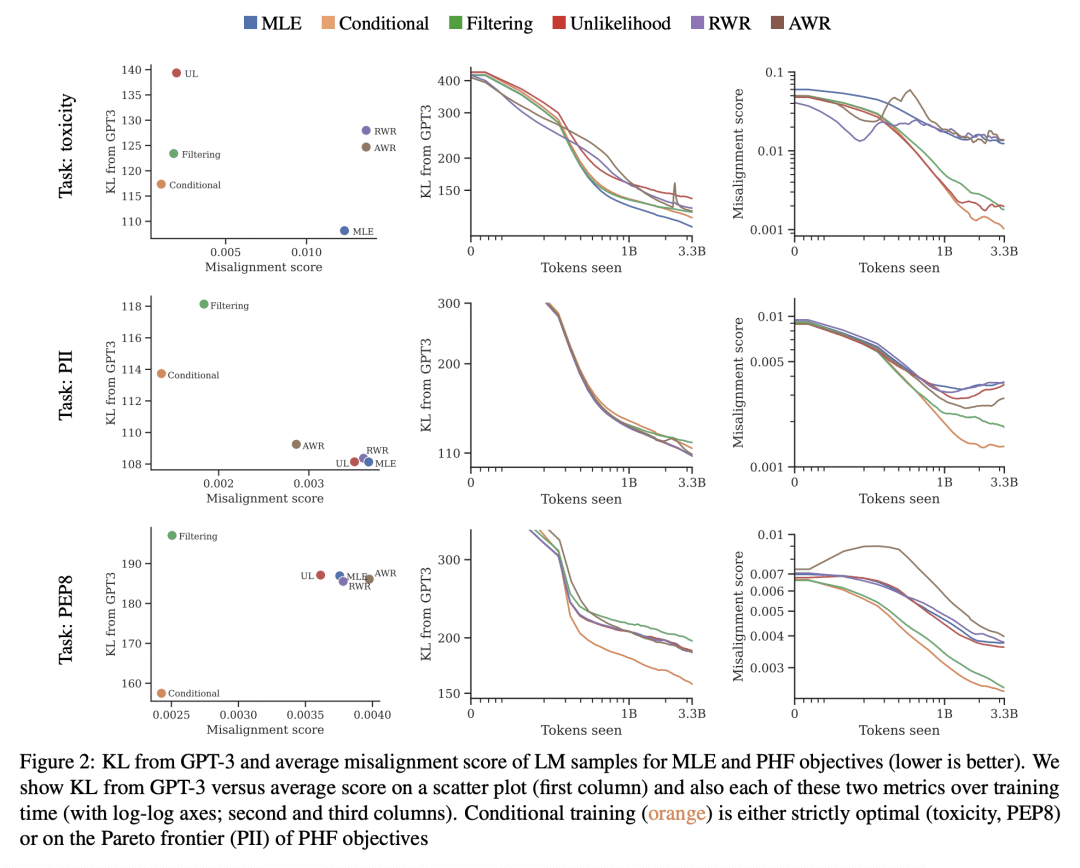

在预训练中纳入人工偏好,可以将语言模型产生的不良内容的比率降低一个数量级; -

与标准的语言模型预训练和基于反馈的微调相比,基于人工反馈的预训练能带来更好的偏好满足。

一句话总结:

语言模型可以通过预训练,用基于人工反馈的条件训练,生成与人类偏好相一致的文本,减少不良内容的比率,并保持下游的任务表现。

Language models (LMs) are pretrained to imitate internet text, including content that would violate human preferences if generated by an LM: falsehoods, offensive comments, personally identifiable information, low-quality or buggy code, and more. Here, we explore alternative objectives for pretraining LMs in a way that also guides them to generate text aligned with human preferences. We benchmark five objectives for pretraining with human feedback across three tasks and study how they affect the trade-off between alignment and capabilities of pretrained LMs. We find a Pareto-optimal and simple approach among those we explored: conditional training, or learning distribution over tokens conditional on their human preference scores given by a reward model. Conditional training reduces the rate of undesirable content by up to an order of magnitude, both when generating without a prompt and with an adversarially-chosen prompt. Moreover, conditional training maintains the downstream task performance of standard LM pretraining, both before and after task-specific finetuning. Pretraining with human feedback results in much better preference satisfaction than standard LM pretraining followed by finetuning with feedback, i.e., learning and then unlearning undesirable behavior. Our results suggest that we should move beyond imitation learning when pretraining LMs and incorporate human preferences from the start of training.

https://arxiv.org/abs/2302.08582

另外几篇值得关注的论文:

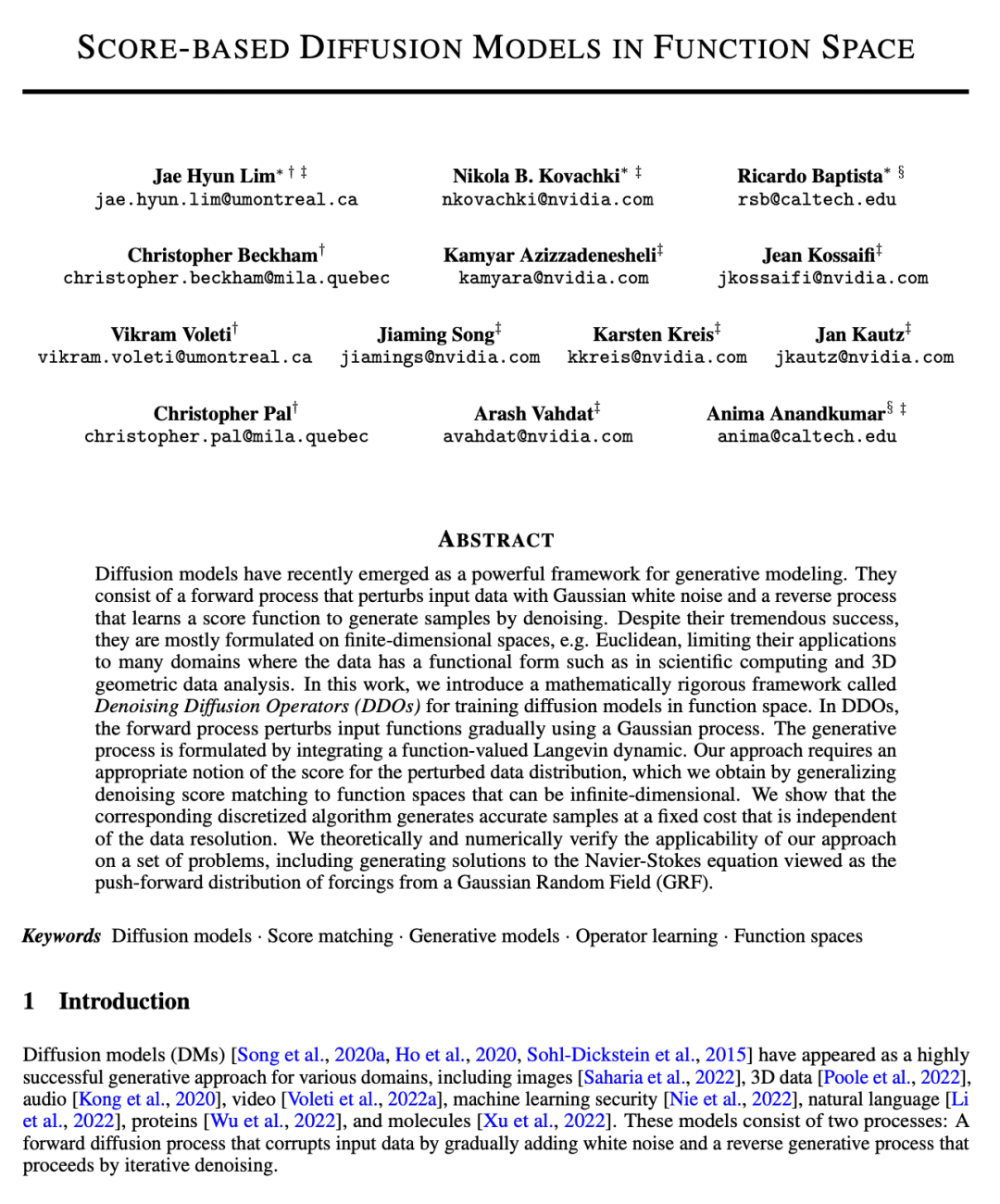

[LG] Score-based Diffusion Models in Function Space

J H Lim, N B. Kovachki, R Baptista, C Beckham, K Azizzadenesheli…

[Université de Montréal & NVIDIA & California Institute of Technology]

函数空间的分数扩散模型

要点:

-

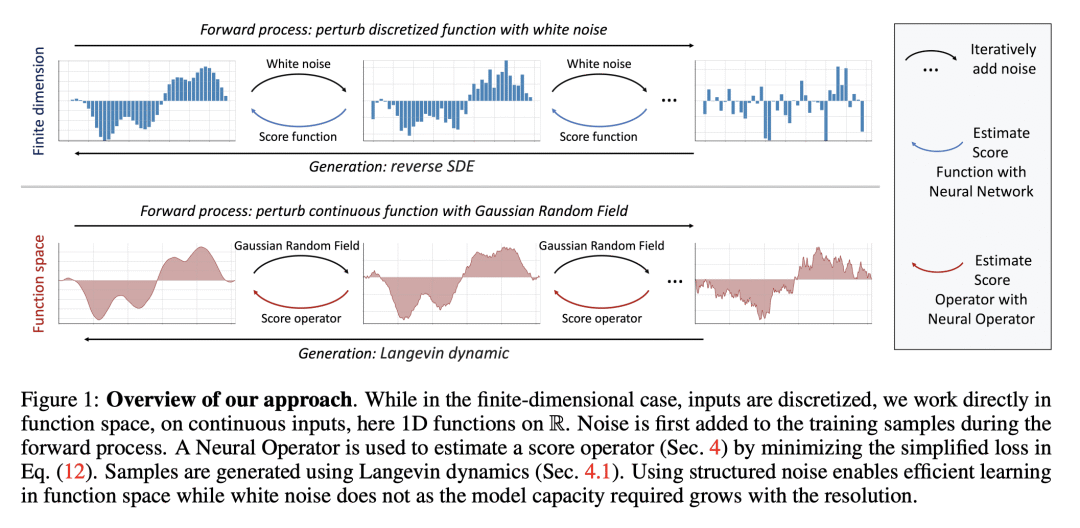

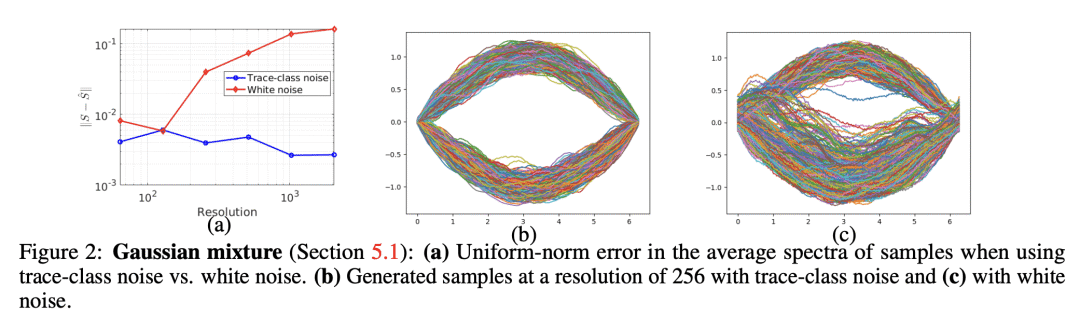

DDO 提供了一个数学上的严格框架,用于在抽象希尔伯特空间设置下对函数值数据进行去噪分数匹配; -

DDO 提出一种扩散模型,利用无穷大的 Langevin 动力学从数据分布中增量采样; -

DDO 对固定模型容量的空间离散化是不变的,并准确地从高斯随机场(GRF)在 Navier-Stokes 解算子下的随机扰动的前推中产生样本; -

DDO 推广了数据希尔伯特空间中 trace 级噪声破坏的去噪分数匹配,并为函数空间中的生成建模提供了一种分辨率不变的方法。

一句话总结:

去噪扩散算子(DDO)为训练函数空间中的扩散模型提供了一个数学上严格的框架,允许独立于数据分辨率的精确样本生成。

Diffusion models have recently emerged as a powerful framework for generative modeling. They consist of a forward process that perturbs input data with Gaussian white noise and a reverse process that learns a score function to generate samples by denoising. Despite their tremendous success, they are mostly formulated on finite-dimensional spaces, e.g. Euclidean, limiting their applications to many domains where the data has a functional form such as in scientific computing and 3D geometric data analysis. In this work, we introduce a mathematically rigorous framework called Denoising Diffusion Operators (DDOs) for training diffusion models in function space. In DDOs, the forward process perturbs input functions gradually using a Gaussian process. The generative process is formulated by integrating a function-valued Langevin dynamic. Our approach requires an appropriate notion of the score for the perturbed data distribution, which we obtain by generalizing denoising score matching to function spaces that can be infinite-dimensional. We show that the corresponding discretized algorithm generates accurate samples at a fixed cost that is independent of the data resolution. We theoretically and numerically verify the applicability of our approach on a set of problems, including generating solutions to the Navier-Stokes equation viewed as the push-forward distribution of forcings from a Gaussian Random Field (GRF).

https://arxiv.org/abs/2302.07400

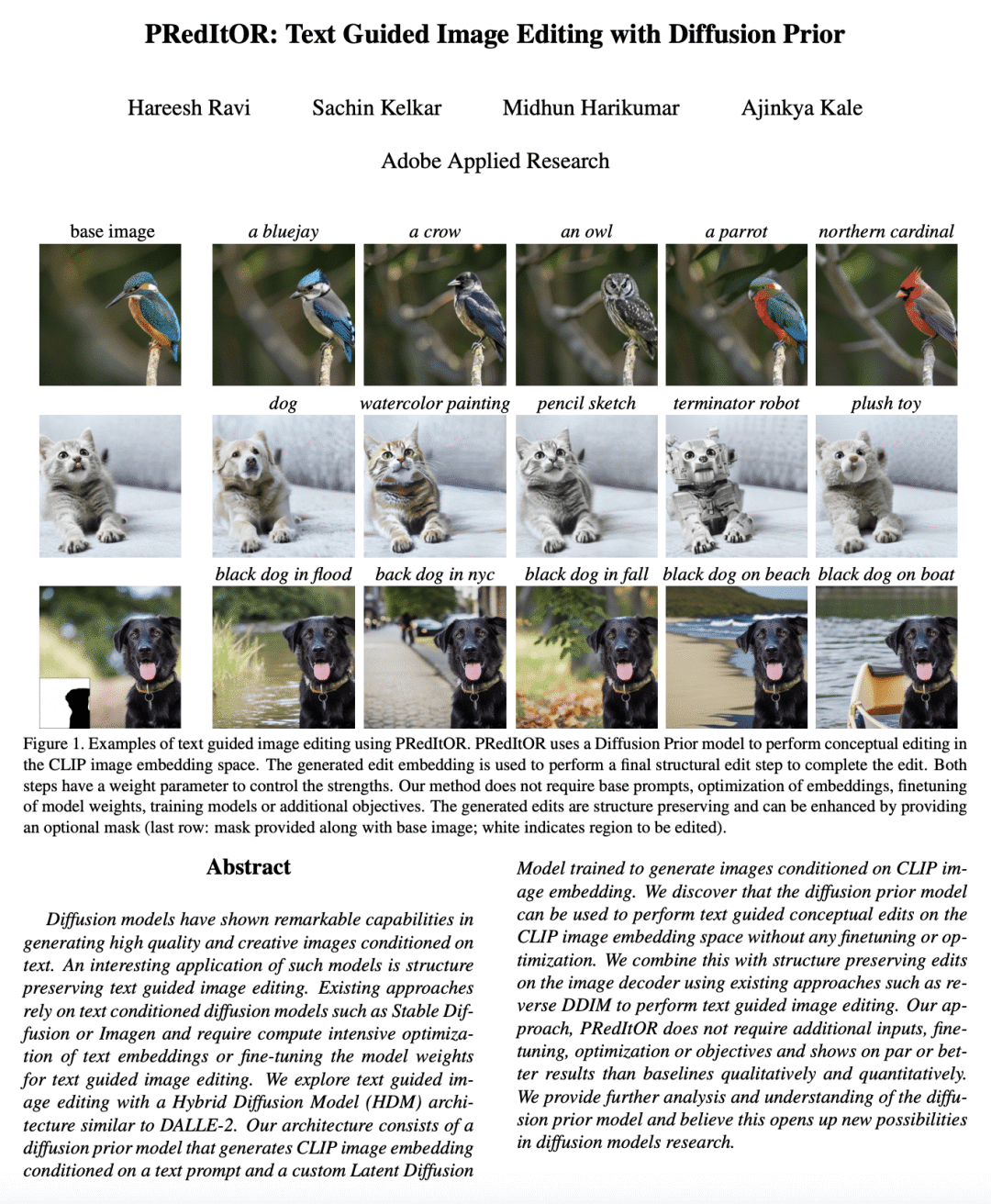

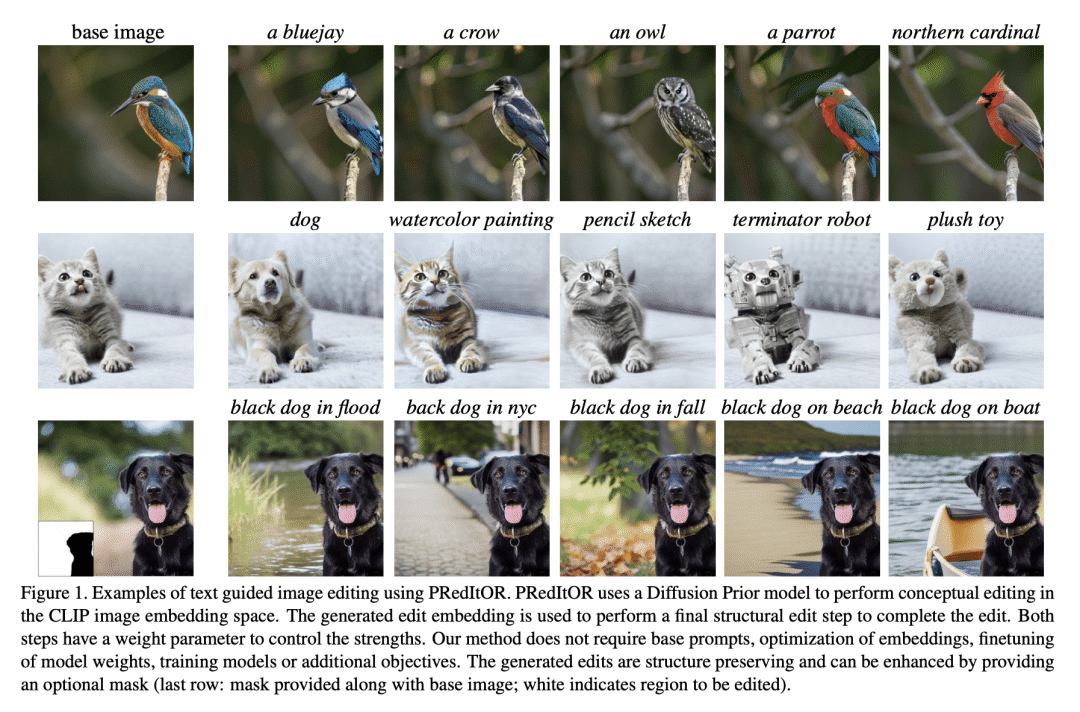

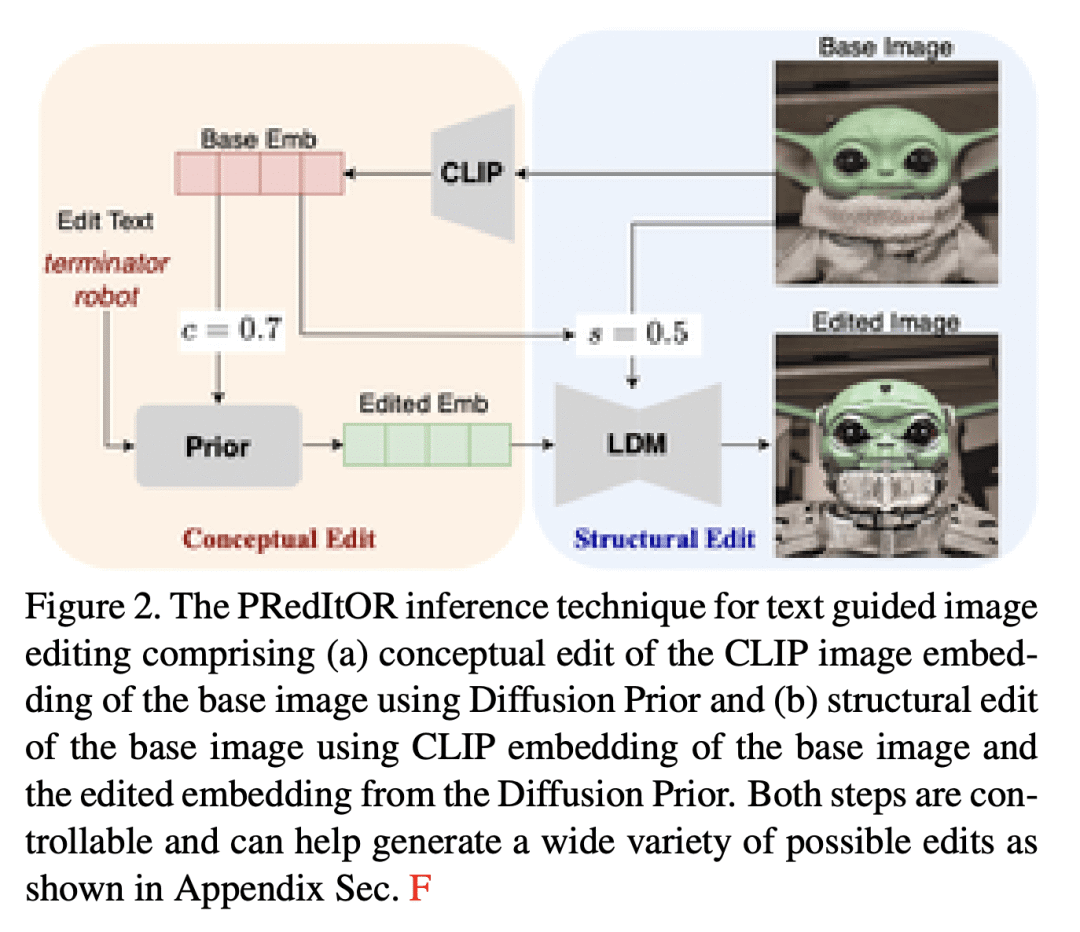

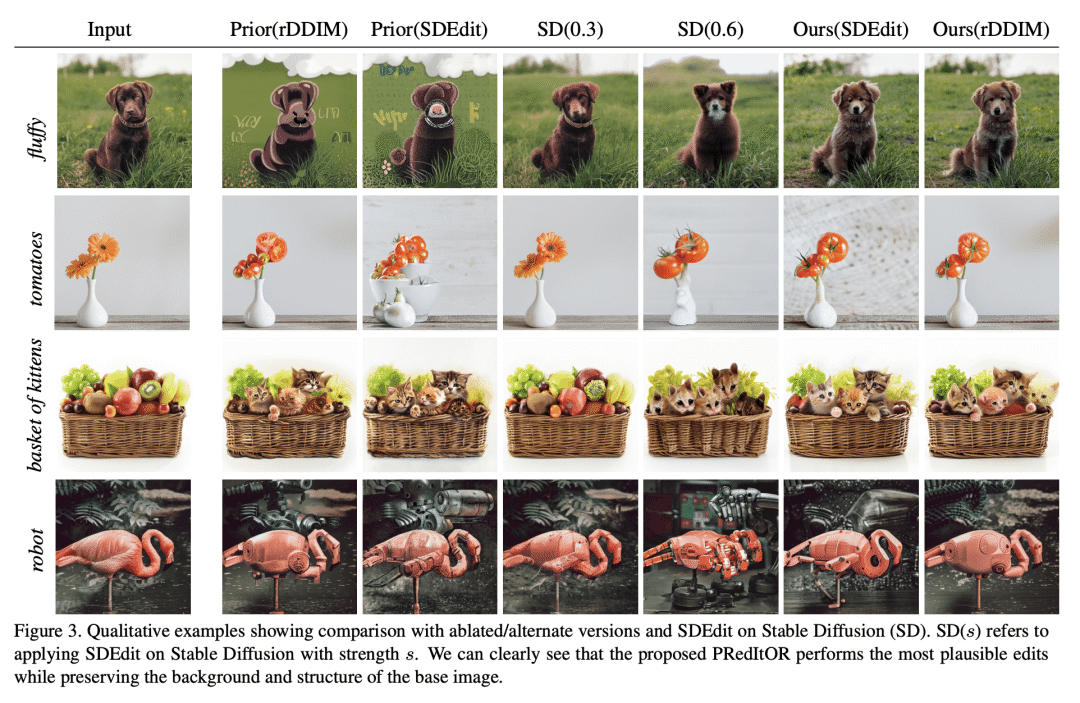

[CV] PRedItOR: Text Guided Image Editing with Diffusion Prior

H Ravi, S Kelkar, M Harikumar, A Kale

[Adobe Applied Research]

PRedItOR: 基于扩散先验的文本引导图像编辑

要点:

-

PRedItOR 用混合扩散模型来执行结构保持的文本指导图像编辑; -

扩散先验模型可用来对 CLIP 图像嵌入空间进行文本指导的概念编辑,而不需要任何微调或优化; -

PRedItOR 不需要额外的输入、微调、优化或目标,在质量和数量上显示出与基线相同或更好的结果。

一句话总结:

PRedItOR 是一种用混合扩散模型进行文字引导图像编辑的新方法,不需要额外的输入或优化,显示出与现有基线相当或更好的结果。

Diffusion models have shown remarkable capabilities in generating high quality and creative images conditioned on text. An interesting application of such models is structure preserving text guided image editing. Existing approaches rely on text conditioned diffusion models such as Stable Diffusion or Imagen and require compute intensive optimization of text embeddings or fine-tuning the model weights for text guided image editing. We explore text guided image editing with a Hybrid Diffusion Model (HDM) architecture similar to DALLE-2. Our architecture consists of a diffusion prior model that generates CLIP image embedding conditioned on a text prompt and a custom Latent Diffusion Model trained to generate images conditioned on CLIP image embedding. We discover that the diffusion prior model can be used to perform text guided conceptual edits on the CLIP image embedding space without any finetuning or optimization. We combine this with structure preserving edits on the image decoder using existing approaches such as reverse DDIM to perform text guided image editing. Our approach, PRedItOR does not require additional inputs, fine-tuning, optimization or objectives and shows on par or better results than baselines qualitatively and quantitatively. We provide further analysis and understanding of the diffusion prior model and believe this opens up new possibilities in diffusion models research.

https://arxiv.org/abs/2302.07979

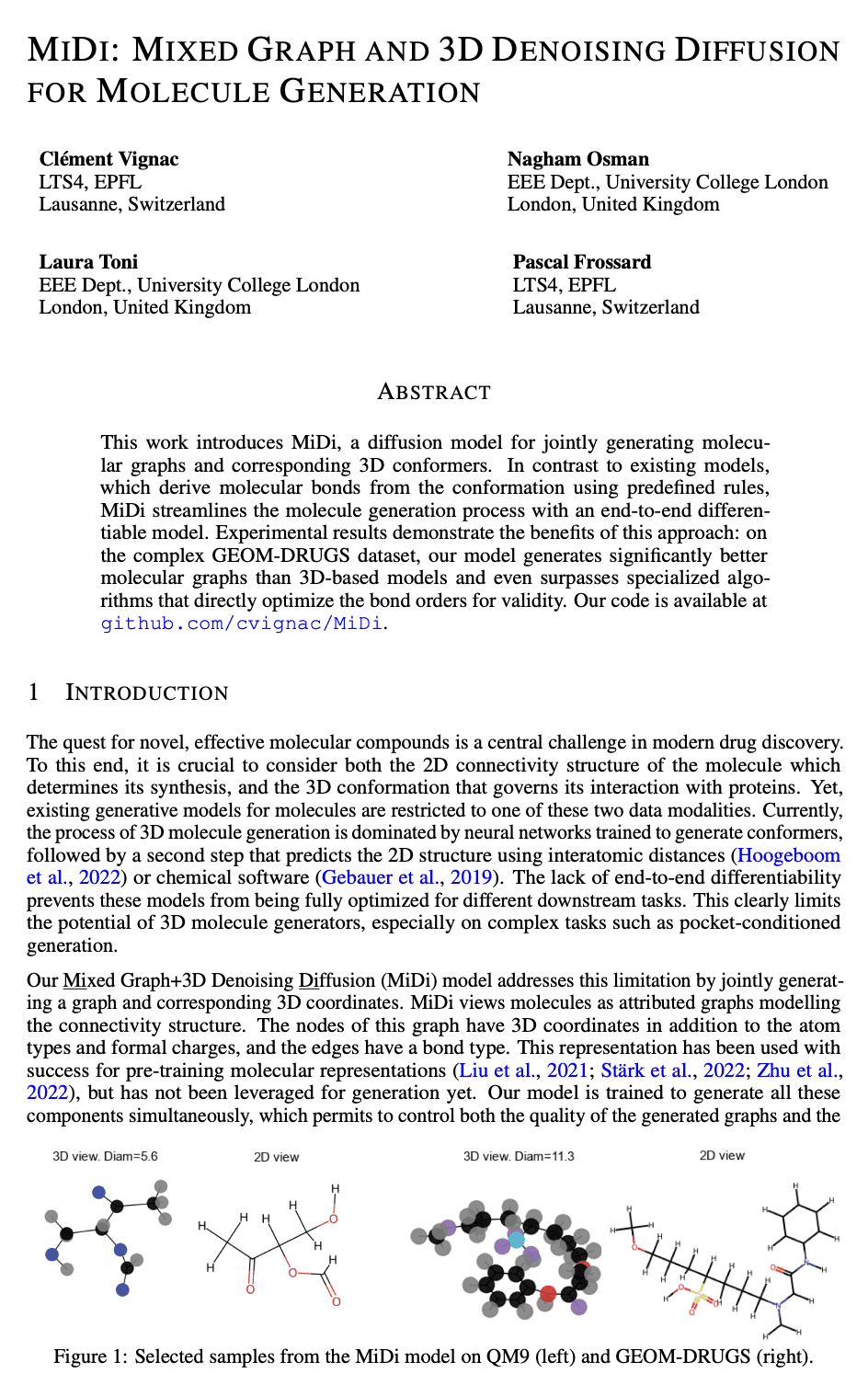

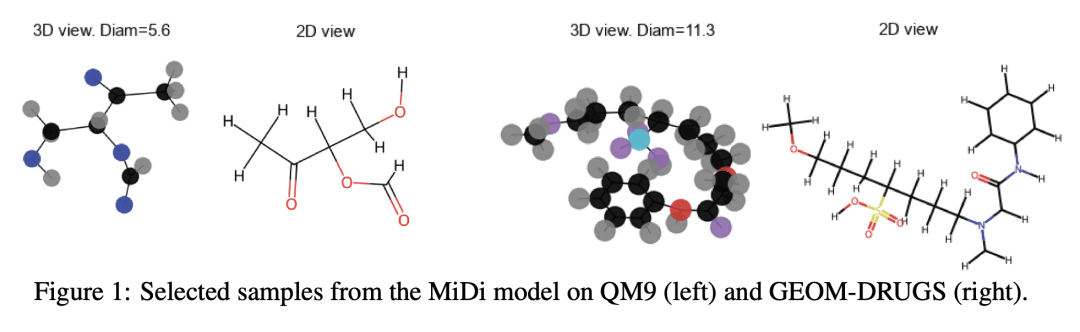

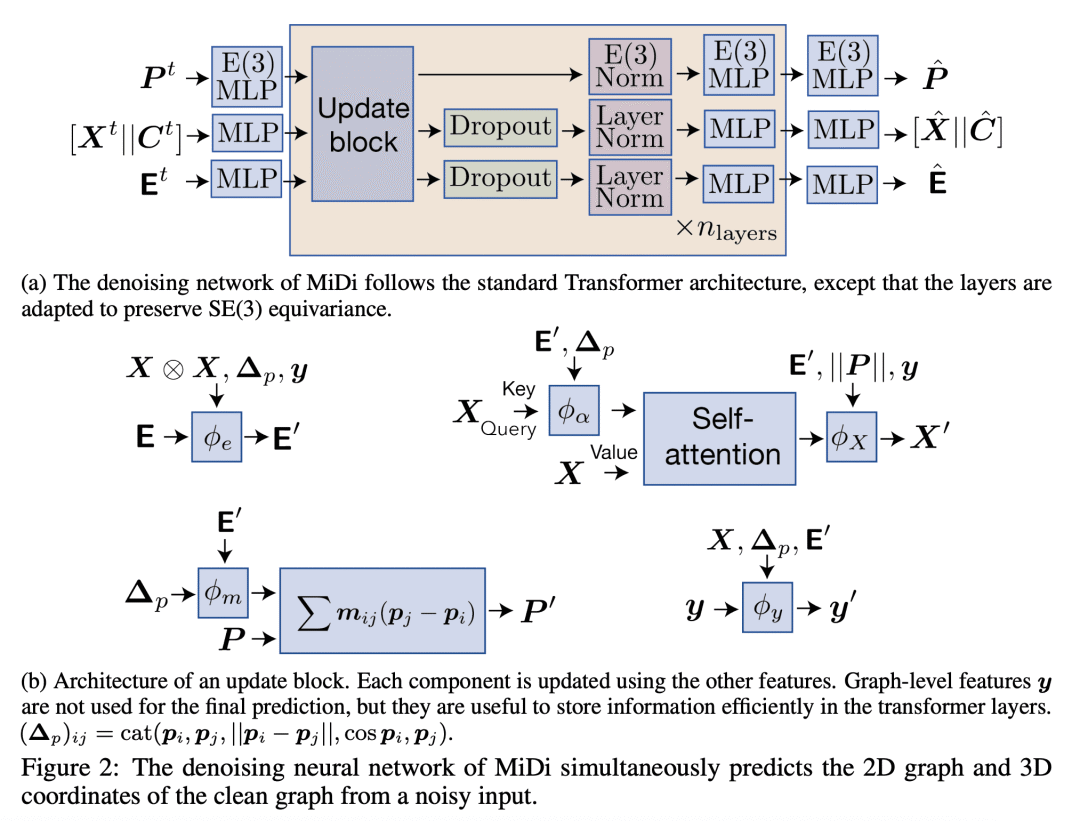

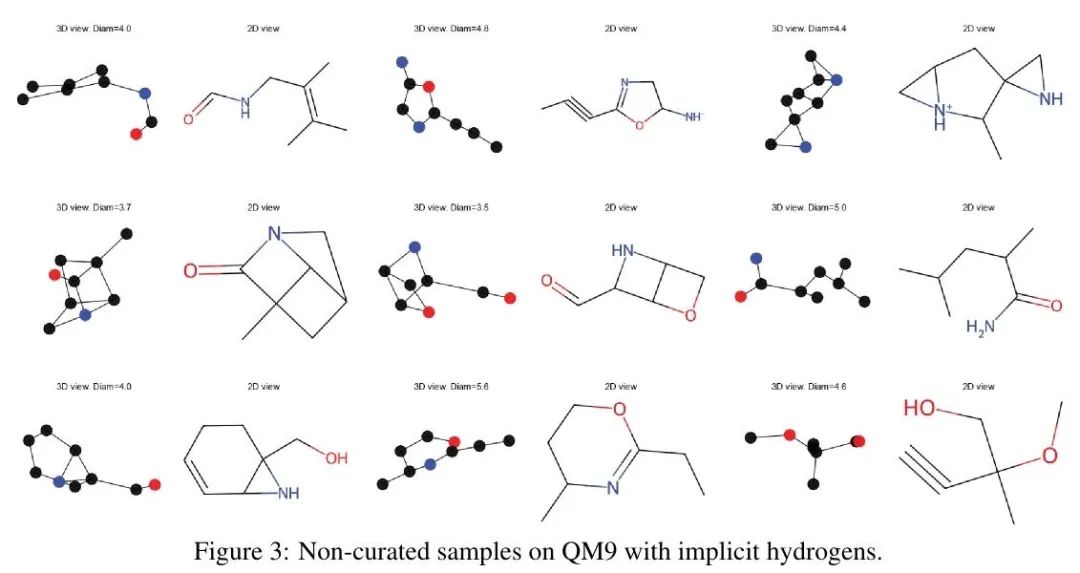

[LG] MiDi: Mixed Graph and 3D Denoising Diffusion for Molecule Generation

C Vignac, N Osman, L Toni, P Frossard

[EPFL & University College London]

MiDi: 混合图和3D去噪扩散在分子生成中的应用

要点:

-

MiDi是一种扩散模型,可以联合生成分子图和相应的 3D 构象体; -

MiDi 在复杂数据集上的表现优于现有的基于 3D 的模型和专用算法; -

MiDi 有一个新的 rEGNN 层,利用了更多的表达性特征,并仍然保证 SE(3) 的等变性; -

MiDi 可用于不局限于非条件生成的各种药物发现应用。

一句话总结:

MiDi 是一种扩散模型,可以生成分子图和相应的 3D 构象体,性能优于现有的基于 3D 的模型和专门算法。

This work introduces MiDi, a diffusion model for jointly generating molecular graphs and corresponding 3D conformers. In contrast to existing models, which derive molecular bonds from the conformation using predefined rules, MiDi streamlines the molecule generation process with an end-to-end differentiable model. Experimental results demonstrate the benefits of this approach: on the complex GEOM-DRUGS dataset, our model generates significantly better molecular graphs than 3D-based models and even surpasses specialized algorithms that directly optimize the bond orders for validity. Our code is available at this http URL.

https://arxiv.org/abs/2302.09048

[LG] Post-Episodic Reinforcement Learning Inference

V Syrgkanis, R Zhan

[Stanford University & Hong Kong University of Science and Technology]

偶发后强化学习推理

要点:

-

提出一种从使用偶发强化学习算法收集的数据中估计结构化参数如动态疗效的方法; -

所提出的重加权的Z-估计器稳定了由这类算法调用的非平稳策略所导致的偶发变化的估计方差; -

确定了适当的加权方案,以恢复目标参数估计器的一致性和渐进正态性,从而实现假设检验和构建可靠的置信区。

一句话总结:

提出一种重加权的Z-估计方法,用于推断从偶发强化学习算法中收集的适应性数据,从而能评估反事实的自适应策略和估计动态处理效果。

We consider estimation and inference with data collected from episodic reinforcement learning (RL) algorithms; i.e. adaptive experimentation algorithms that at each period (aka episode) interact multiple times in a sequential manner with a single treated unit. Our goal is to be able to evaluate counterfactual adaptive policies after data collection and to estimate structural parameters such as dynamic treatment effects, which can be used for credit assignment (e.g. what was the effect of the first period action on the final outcome). Such parameters of interest can be framed as solutions to moment equations, but not minimizers of a population loss function, leading to Z-estimation approaches in the case of static data. However, such estimators fail to be asymptotically normal in the case of adaptive data collection. We propose a re-weighted Z-estimation approach with carefully designed adaptive weights to stabilize the episode-varying estimation variance, which results from the nonstationary policy that typical episodic RL algorithms invoke. We identify proper weighting schemes to restore the consistency and asymptotic normality of the re-weighted Z-estimators for target parameters, which allows for hypothesis testing and constructing reliable confidence regions for target parameters of interest. Primary applications include dynamic treatment effect estimation and dynamic off-policy evaluation.

https://arxiv.org/abs/2302.08854

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.