1、[LG] Fast, Differentiable and Sparse Top-k: a Convex Analysis Perspective

2、[CL] Efficient Domain Adaptation for Speech Foundation Models

3、[LG] Effective Robustness against Natural Distribution Shifts for Models with Different Training Data

4、[LG] PyGlove: Efficiently Exchanging ML Ideas as Code

5、[CV] TEXTure: Text-Guided Texturing of 3D Shapes

[LG] The unreasonable effectiveness of few-shot learning for machine translation

[LG] Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

[LG] Conditioning Predictive Models: Risks and Strategies

[LG] Adapting Neural Link Predictors for Complex Query Answering

摘要:快速可微稀疏Top-k算子、语音基础模型的高效域自适应、对具有不同训练数据的模型有效对抗自然分布漂移的鲁棒性、以代码形式高效交流机器学习想法、文本引导3D形状纹理生成、机器翻译少样本学习的不可思议效果、用大型语言模型进行交互式规划以实现开放世界多任务智能体、调节预测模型的风险与策略、复杂查询回答的神经链路预测器自适应

1、[LG] Fast, Differentiable and Sparse Top-k: a Convex Analysis Perspective

M E. Sander, J Puigcerver, J Djolonga, G Peyré, M Blondel

[Google Research & Ecole Normale Sup´erieure]

快速、可微且稀疏的Top-k算子:凸分析视角

要点:

-

提出一种获得快速、可微和稀疏的top-k和top-k掩码算子的通用框架,包括选择数值大小的算子; -

提出一种新的非线性(ϕ)来表达新的算子; -

提出一个正则化项(p-norm),以获得一个松弛的top-k算子,从而产生了第一个到处可微的稀疏top-k算子; -

提出一种对GPU/TPU友好的Dykstra算法来解决同调优化,作为PAV的替代方案。

一句话总结:

提出了一个快速、可微和稀疏top-k算子的框架,包括一个正则化项和一个GPU/TPU友好的Dykstra算法,导致在视觉 Transformer 中的权重修剪和top-k损失等应用中的精度提高。

摘要:

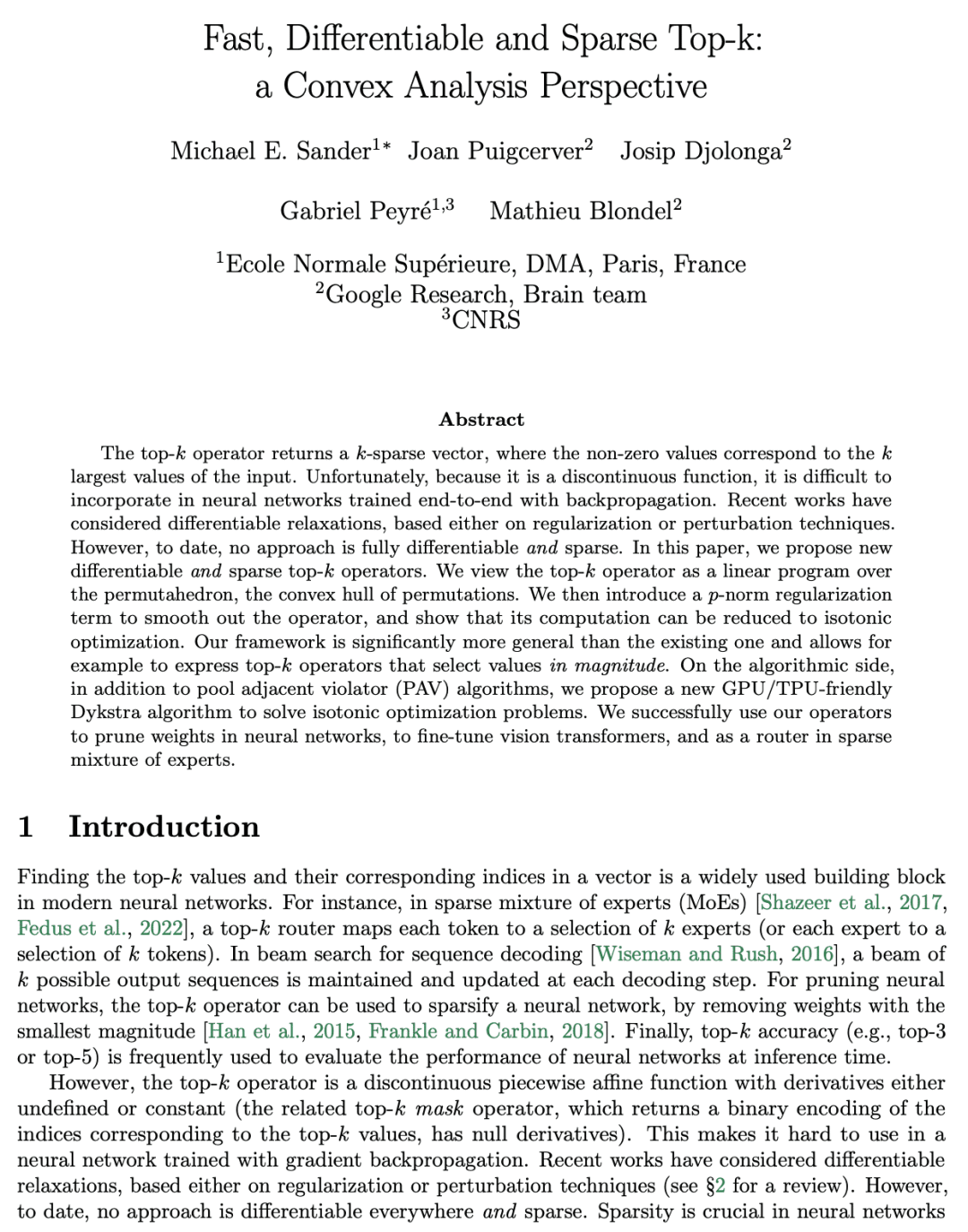

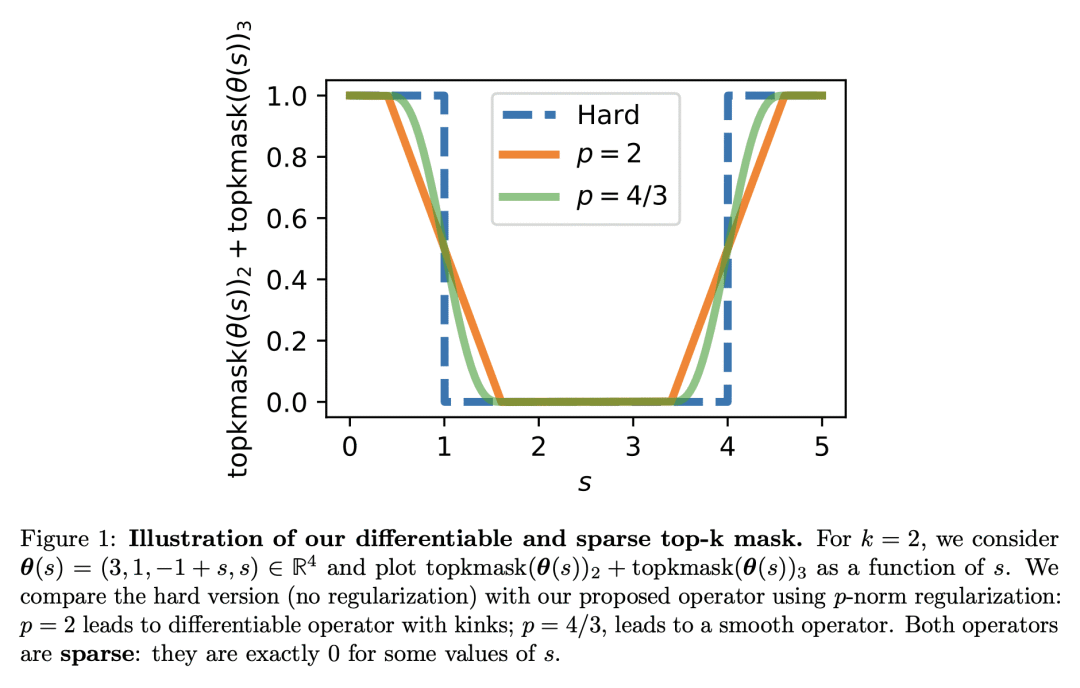

top-k算子返回一个k-稀疏向量,其中非零值对应于输入的k个最大值。不幸的是,由于它是一个非连续函数,很难纳入用反向传播进行端到端训练的神经网络中。最近的工作考虑了基于正则化或扰动技术的可微调的松弛。然而,到目前为止,没有一种方法是完全可微和稀疏的。本文提出一种新的可微和稀疏的 top-k 算子。本文将 top-k 算子视为 permutahedron 上的线性程序,即 permutations 的凸壳。提出一种 p-norm 正则化项来平滑算子,并表明其计算可以简化为等调优化。该框架明显比现有的框架更通用,例如,允许表达选择大小值的top-k算子。在算法方面,除了 pool adjacent violator(PAV) 算法外,本文提出一种新的 GPU/TPU 友好的 Dykstra 算法来解决等调优化问题。成功使用所提出算子来修剪神经网络权重,微调视觉 Transformer,并作为稀疏混合专家中的路由器。

The top-k operator returns a k-sparse vector, where the non-zero values correspond to the k largest values of the input. Unfortunately, because it is a discontinuous function, it is difficult to incorporate in neural networks trained end-to-end with backpropagation. Recent works have considered differentiable relaxations, based either on regularization or perturbation techniques. However, to date, no approach is fully differentiable and sparse. In this paper, we propose new differentiable and sparse top-k operators. We view the top-k operator as a linear program over the permutahedron, the convex hull of permutations. We then introduce a p-norm regularization term to smooth out the operator, and show that its computation can be reduced to isotonic optimization. Our framework is significantly more general than the existing one and allows for example to express top-k operators that select values in magnitude. On the algorithmic side, in addition to pool adjacent violator (PAV) algorithms, we propose a new GPU/TPU-friendly Dykstra algorithm to solve isotonic optimization problems. We successfully use our operators to prune weights in neural networks, to fine-tune vision transformers, and as a router in sparse mixture of experts.

https://arxiv.org/abs/2302.01425

2、[CL] Efficient Domain Adaptation for Speech Foundation Models

B Li, D Hwang, Z Huo, J Bai, G Prakash, T N. Sainath, K C Sim, Y Zhang, W Han, T Strohman, F Beaufays

[Google LLC]

语音基础模型的高效域自适应

要点:

-

提出在微调和更新目标域的低成本适配器时冻结预训练的基础模型(FM)编码器; -

通过从源域选择适当的数据,展示了目标域性能的改善; -

展示了在目标域使用纯音频或纯文本数据的改进。

一句话总结:

提出了一项开创性的研究,通过对源域和无监督目标域数据的联合微调,为基于FM的语音识别系统建立一种高效的解决方案,从而实现数据高效和模型参数高效。

摘要:

基础模型(FM),在大规模的广泛数据上进行训练,并能自适应广泛的下游任务,已经引起了研究界的极大兴趣。受益于不同的数据源,如不同的模态、语言和应用领域,基础模型已经显示出强大的泛化能力和知识迁移能力。本文提出一项开创性的研究,为基于 FM 的语音识别系统建立一个有效的解决方案。采用最近开发的自监督 BEST-RQ 进行预训练,并建议用 JUST Hydra 对源和无监督目标域数据进行联合微调。用少量受监督的域内数据对 FM 编码器适配器和解码器进行微调以适应目标域。在大规模的YouTube和语音搜索任务中,所提出方法被证明是有效的数据和模型参数。只用了 21.6M 的有监督域内数据和 130.8M 的微调参数就达到了相同的质量,而在额外的 300M 有监督域内数据上从头开始训练的模型则是731.1M。

Foundation models (FMs), that are trained on broad data at scale and are adaptable to a wide range of downstream tasks, have brought large interest in the research community. Benefiting from the diverse data sources such as different modalities, languages and application domains, foundation models have demonstrated strong generalization and knowledge transfer capabilities. In this paper, we present a pioneering study towards building an efficient solution for FM-based speech recognition systems. We adopt the recently developed self-supervised BEST-RQ for pretraining, and propose the joint finetuning with both source and unsupervised target domain data using JUST Hydra. The FM encoder adapter and decoder are then finetuned to the target domain with a small amount of supervised in-domain data. On a large-scale YouTube and Voice Search task, our method is shown to be both data and model parameter efficient. It achieves the same quality with only 21.6M supervised in-domain data and 130.8M finetuned parameters, compared to the 731.1M model trained from scratch on additional 300M supervised in-domain data.

https://arxiv.org/abs/2302.01496

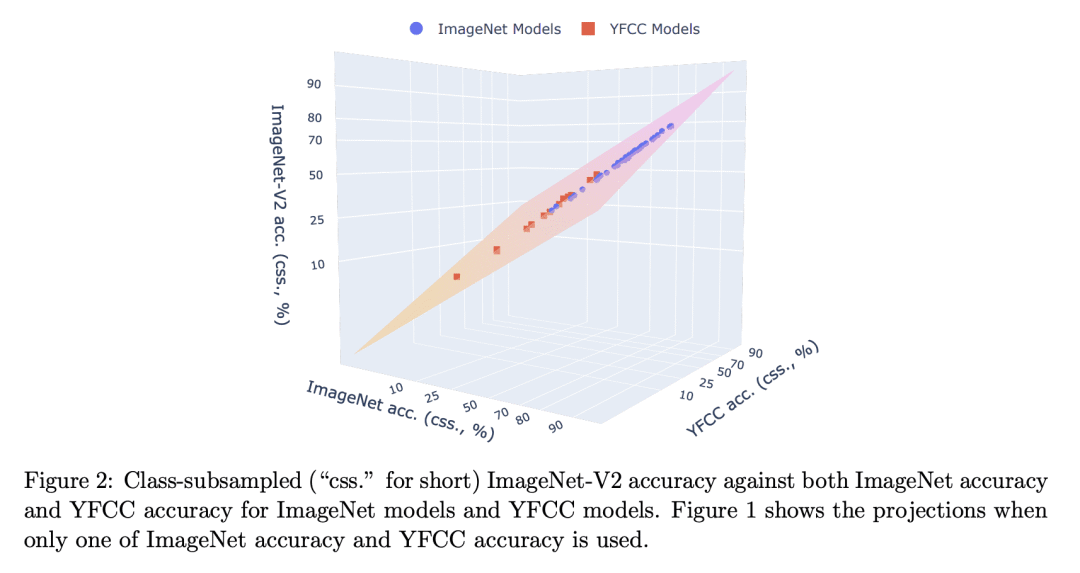

3、[LG] Effective Robustness against Natural Distribution Shifts for Models with Different Training Data

Z Shi, N Carlini…

[University of California, Los Angeles & Google Research & University of Washington]

对具有不同训练数据的模型有效对抗自然分布漂移的鲁棒性

要点:

-

当比较在不同数据分布上训练的模型时,现有的有效稳健性评价展示出局限性; -

提出一种新的有效鲁棒性评价指标,使用多个 ID 测试集对模型的有效鲁棒性进行更精确的估计; -

与只用一个 ID 测试集相比,从多个 ID 测试集可以更好地预测各种模型的 OOD 准确性。

一句话总结:

提出一种新的有效鲁棒性评价指标,与现有的使用单一 ID 测试集的评价方法相比,该指标使用多个分布内(ID)测试集来提供对模型鲁棒性的更精确的估计。

摘要:

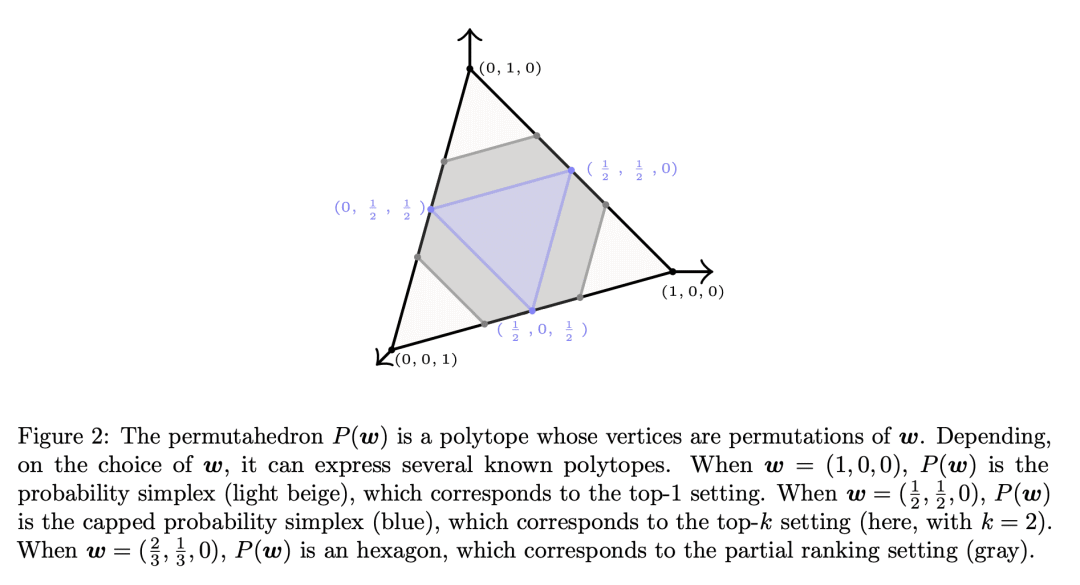

“有效鲁棒性 “衡量的是超出分布内(ID)性能所能预测的额外的分布外(OOD)鲁棒性。现有的有效鲁棒性评估,通常用单一的测试集,如 ImageNet 来评估ID的准确性。当评估在不同数据分布上训练的模型时,例如比较在 ImageNet 上训练的模型与在 LAION 上训练的零样本语言-图像预训练模型,这就成了一个问题。本文提出一种新的有效鲁棒性评价指标,以比较在不同数据分布上训练的模型的有效鲁棒性。为了做到这一点,本文控制了多个 ID 测试集的准确性,这些测试集涵盖了所有被评估模型的训练分布。新的评价指标提供了对有效鲁棒性的更好的估计,并解释了在只考虑一个 ID 数据集时,零样本 CLIP-like 模型表现出令人惊讶的有效鲁棒性收益,而在所突出评价下,收益减少了。

“Effective robustness” measures the extra out-of-distribution (OOD) robustness beyond what can be predicted from the in-distribution (ID) performance. Existing effective robustness evaluations typically use a single test set such as ImageNet to evaluate ID accuracy. This becomes problematic when evaluating models trained on different data distributions, e.g., comparing models trained on ImageNet vs. zero-shot language-image pre-trained models trained on LAION. In this paper, we propose a new effective robustness evaluation metric to compare the effective robustness of models trained on different data distributions. To do this we control for the accuracy on multiple ID test sets that cover the training distributions for all the evaluated models. Our new evaluation metric provides a better estimate of the effectiveness robustness and explains the surprising effective robustness gains of zero-shot CLIP-like models exhibited when considering only one ID dataset, while the gains diminish under our evaluation.

https://arxiv.org/abs/2302.01381

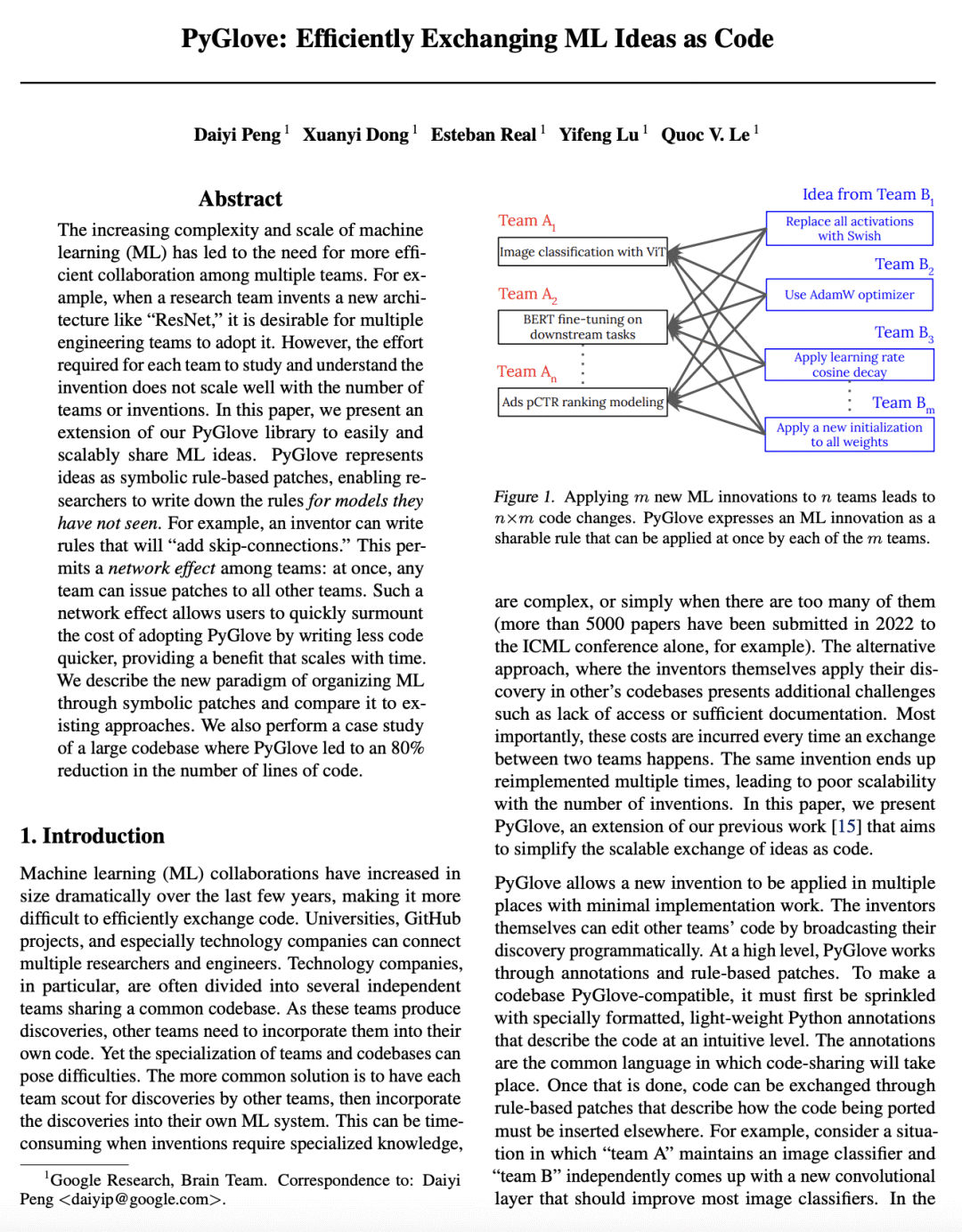

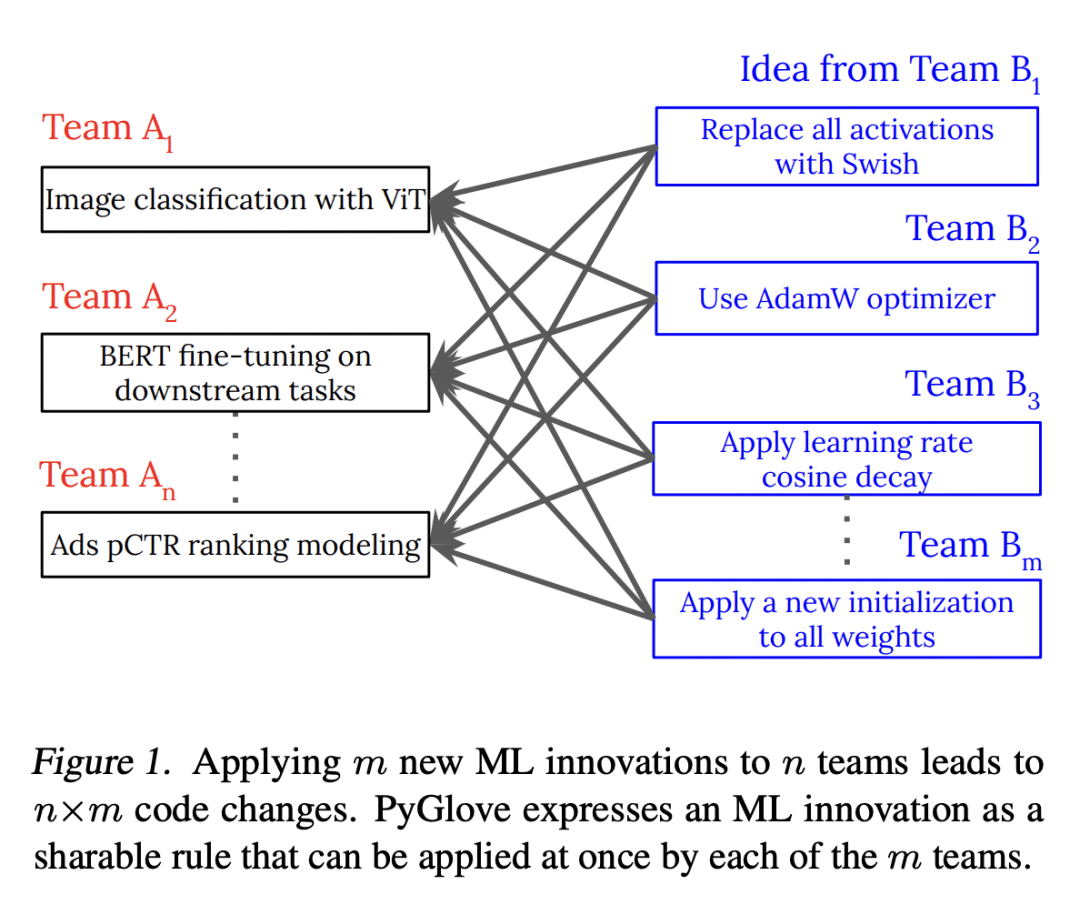

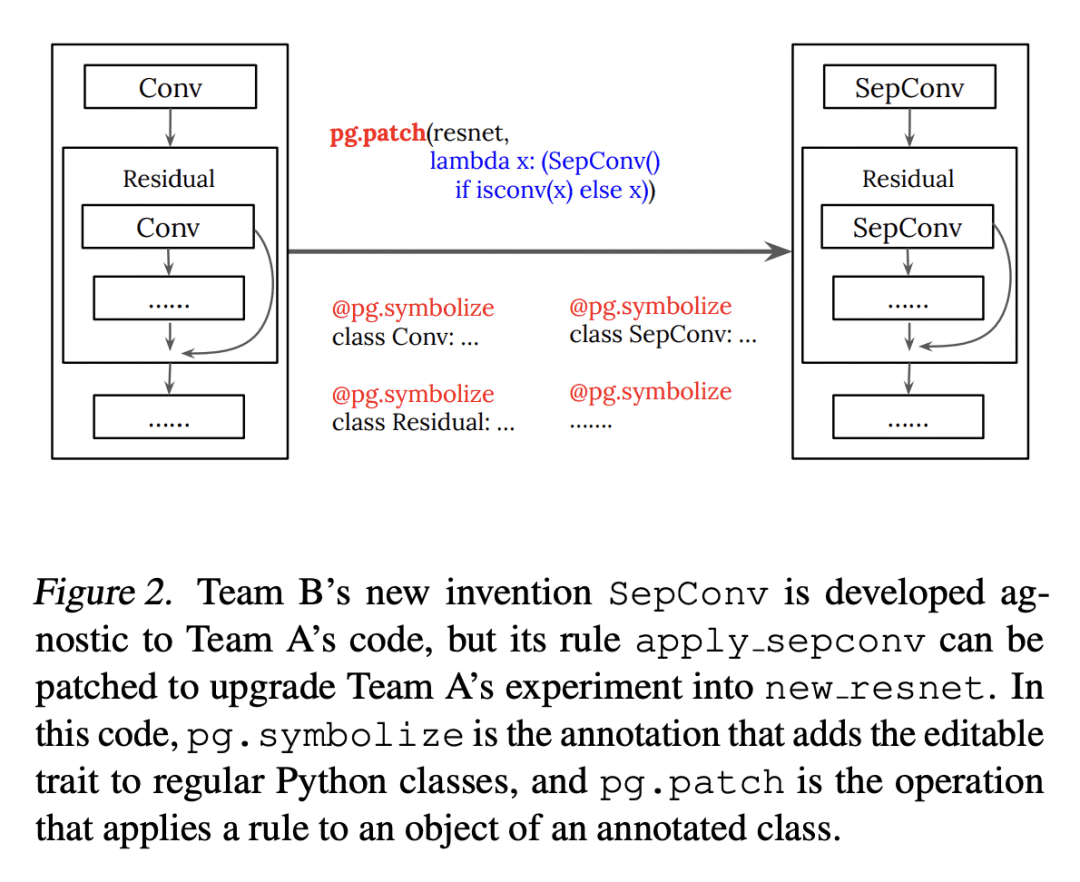

4、[LG] PyGlove: Efficiently Exchanging ML Ideas as Code

D Peng, X Dong, E Real, Y Lu, Q V. Le

[Google Research]

PyGlove: 以代码形式高效交流机器学习想法

要点:

-

介绍PyGlove库,用于通过符号块将复杂的机器学习(ML)思想以代码形式进行高效和可扩展的共享; -

演示如何在整个机器学习开发过程中使用符号化编程; -

将PyGlove的符号功能从 AutoML 扩展到机器学习,以解决将概念性规则应用于不同的机器学习设置的问题。

一句话总结:

PyGlove 库能通过符号块高效、可扩展以代码形式分享机器学习新想法,有可能改变机器学习程序的开发和组织方式。

摘要:

机器学习(ML)的复杂性和规模不断增加,是对多个团队之间需要更有效的合作。例如,当一个研究团队发明了像”ResNet”这样的新架构时,希望多个工程团队能采用它。然而,每个团队研究和理解该发明所需的努力,并不能随着团队或发明的数量很好地扩展。在本文中,我们介绍了 PyGlove 库的一个扩展,以轻松和可扩展地分享机器学习想法。PyGlove将想法表示为基于规则的符号块,使研究人员能为没有见过的模型写下规则。例如,一个发明者可以写下”增加跳接”的规则。这允许团队之间产生网络效应:在同一时间,任何团队可以向所有其他团队提交符号块。这样的网络效应使得用户可以通过更快地编写更少的代码来迅速克服采用 PyGlove 的成本,提供一个随时间扩展的收益。本文描述了通过符号块组织机器学习的新范式,并将其与现有的方法进行了比较。还对一个大型代码库进行了案例研究,PyGlove 使代码行数减少了80%。

The increasing complexity and scale of machine learning (ML) has led to the need for more efficient collaboration among multiple teams. For example, when a research team invents a new architecture like “ResNet,” it is desirable for multiple engineering teams to adopt it. However, the effort required for each team to study and understand the invention does not scale well with the number of teams or inventions. In this paper, we present an extension of our PyGlove library to easily and scalably share ML ideas. PyGlove represents ideas as symbolic rule-based patches, enabling researchers to write down the rules for models they have not seen. For example, an inventor can write rules that will “add skip-connections.” This permits a network effect among teams: at once, any team can issue patches to all other teams. Such a network effect allows users to quickly surmount the cost of adopting PyGlove by writing less code quicker, providing a benefit that scales with time. We describe the new paradigm of organizing ML through symbolic patches and compare it to existing approaches. We also perform a case study of a large codebase where PyGlove led to an 80% reduction in the number of lines of code.

https://arxiv.org/abs/2302.01918

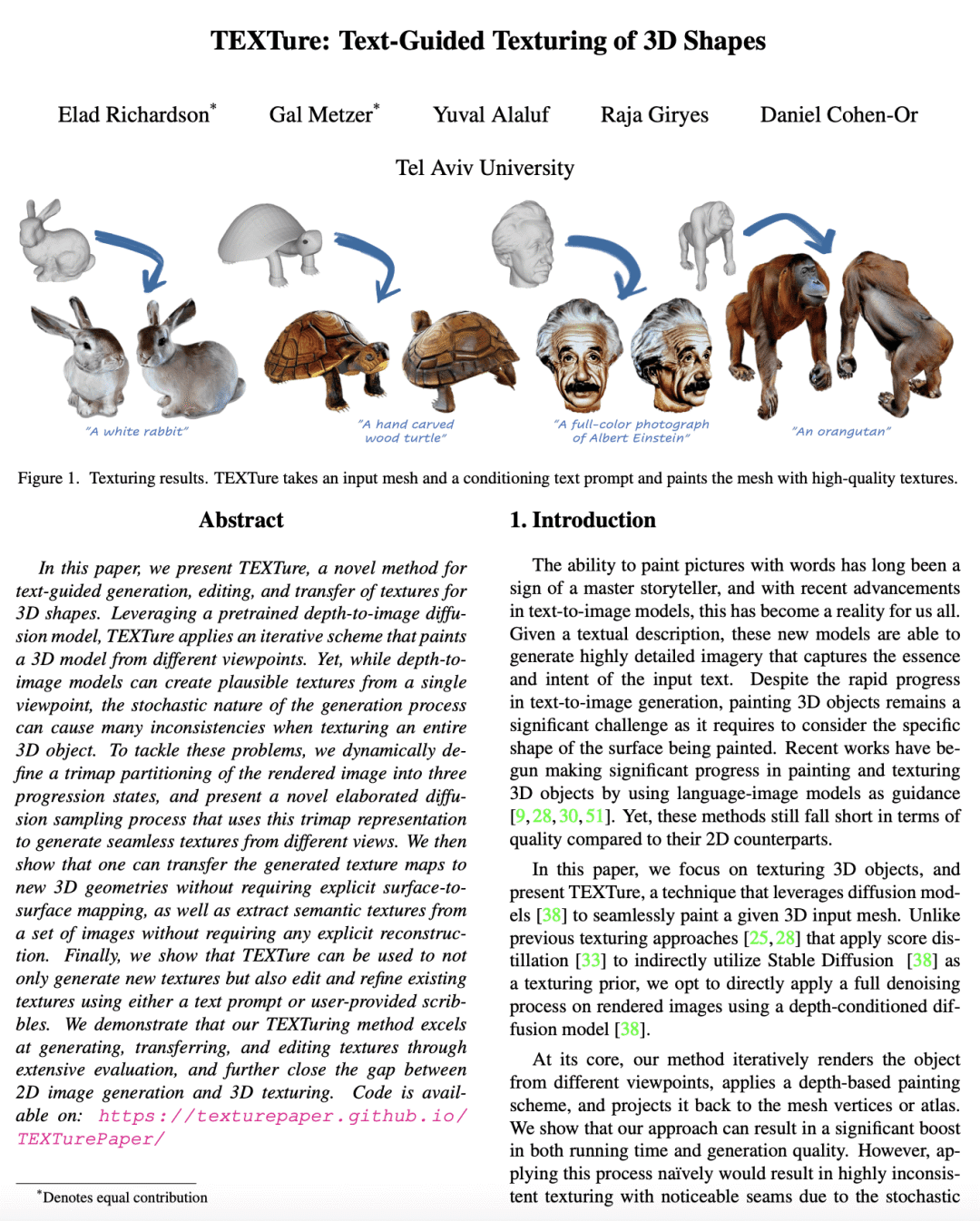

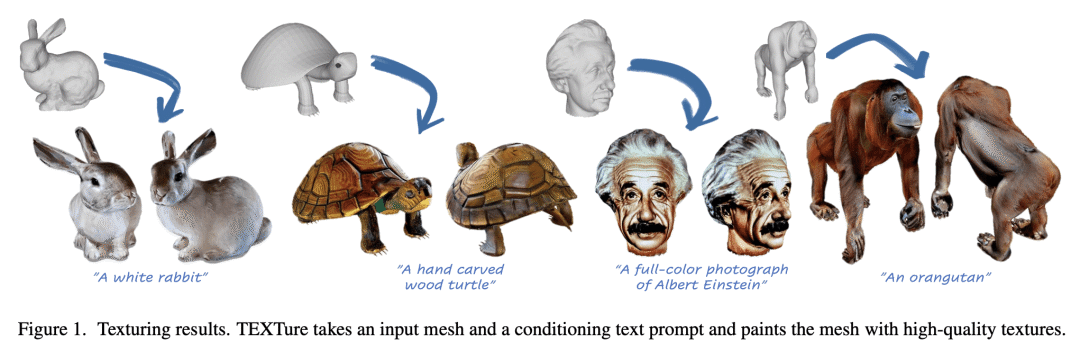

5、[CV] TEXTure: Text-Guided Texturing of 3D Shapes

E Richardson, G Metzer, Y Alaluf, R Giryes, D Cohen-Or

[Tel Aviv University]

TEXTure: 文本引导3D形状纹理生成

要点:

-

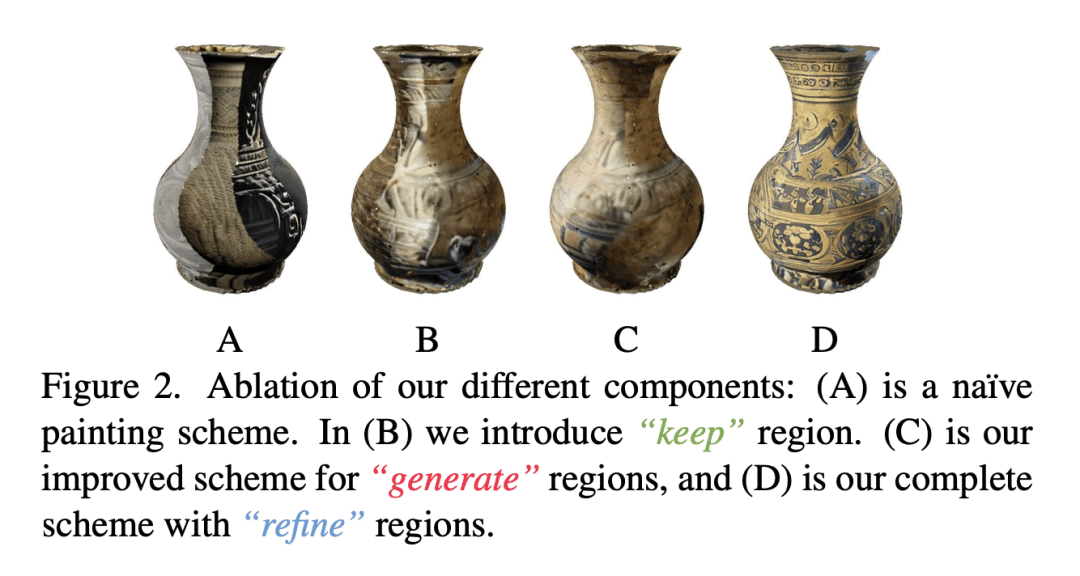

提出TEXTure,一种新方法,用于在文本指导下生成、前沿和编辑 3D 形状纹理; -

用预训练的深度到图像扩散模型,通过迭代绘制方案为3D形状提供纹理; -

提出三分法和扩散采样过程,从不同的视角生成无缝纹理。

一句话总结:

提出 TEXTure,一种使用深度到图像扩散模型和三分法方案,以文本为指导生成、编辑和迁移 3D 形状纹理的新方法。

摘要:

本文提出 TEXTure,一种以文本为指导,生成、编辑和迁移 3D 形状纹理的新方法。利用预训练的深度到图像扩散模型,TEXTure 应用一个迭代方案,从不同的视角来绘制一个 3D 模型。然而,虽然深度到图像模型可以从单个视角创建合理的纹理,但生成过程的随机性,会在为整个 3D 物体绘制纹理时造成许多不一致。解决该问题,本文动态地定义了渲染图像的三分法,将其划分为三个进展状态,并提出了一个新的精心设计的扩散采样过程,使用该三分法表示,从不同的视角生成无缝纹理。可以将生成的纹理图迁移到新的 3D 几何形状,而不需要显式的表面到表面映射,也可以从一组图像中提取语义纹理,而不需要任何显式的重建。TEXTure不仅可以用来生成新的纹理,还可以使用文本提示或用户提供的涂鸦来编辑和完善现有纹理。广泛的评估证明,TEXTure 方法在生成、转换和编辑纹理方面的出色表现,进一步缩小了 2D 图像生成和 3D 纹理之间的差距。

In this paper, we present TEXTure, a novel method for text-guided generation, editing, and transfer of textures for 3D shapes. Leveraging a pretrained depth-to-image diffusion model, TEXTure applies an iterative scheme that paints a 3D model from different viewpoints. Yet, while depth-to-image models can create plausible textures from a single viewpoint, the stochastic nature of the generation process can cause many inconsistencies when texturing an entire 3D object. To tackle these problems, we dynamically define a trimap partitioning of the rendered image into three progression states, and present a novel elaborated diffusion sampling process that uses this trimap representation to generate seamless textures from different views. We then show that one can transfer the generated texture maps to new 3D geometries without requiring explicit surface-to-surface mapping, as well as extract semantic textures from a set of images without requiring any explicit reconstruction. Finally, we show that TEXTure can be used to not only generate new textures but also edit and refine existing textures using either a text prompt or user-provided scribbles. We demonstrate that our TEXTuring method excels at generating, transferring, and editing textures through extensive evaluation, and further close the gap between 2D image generation and 3D texturing.

https://arxiv.org/abs/2302.01721

另外几篇值得关注的论文:

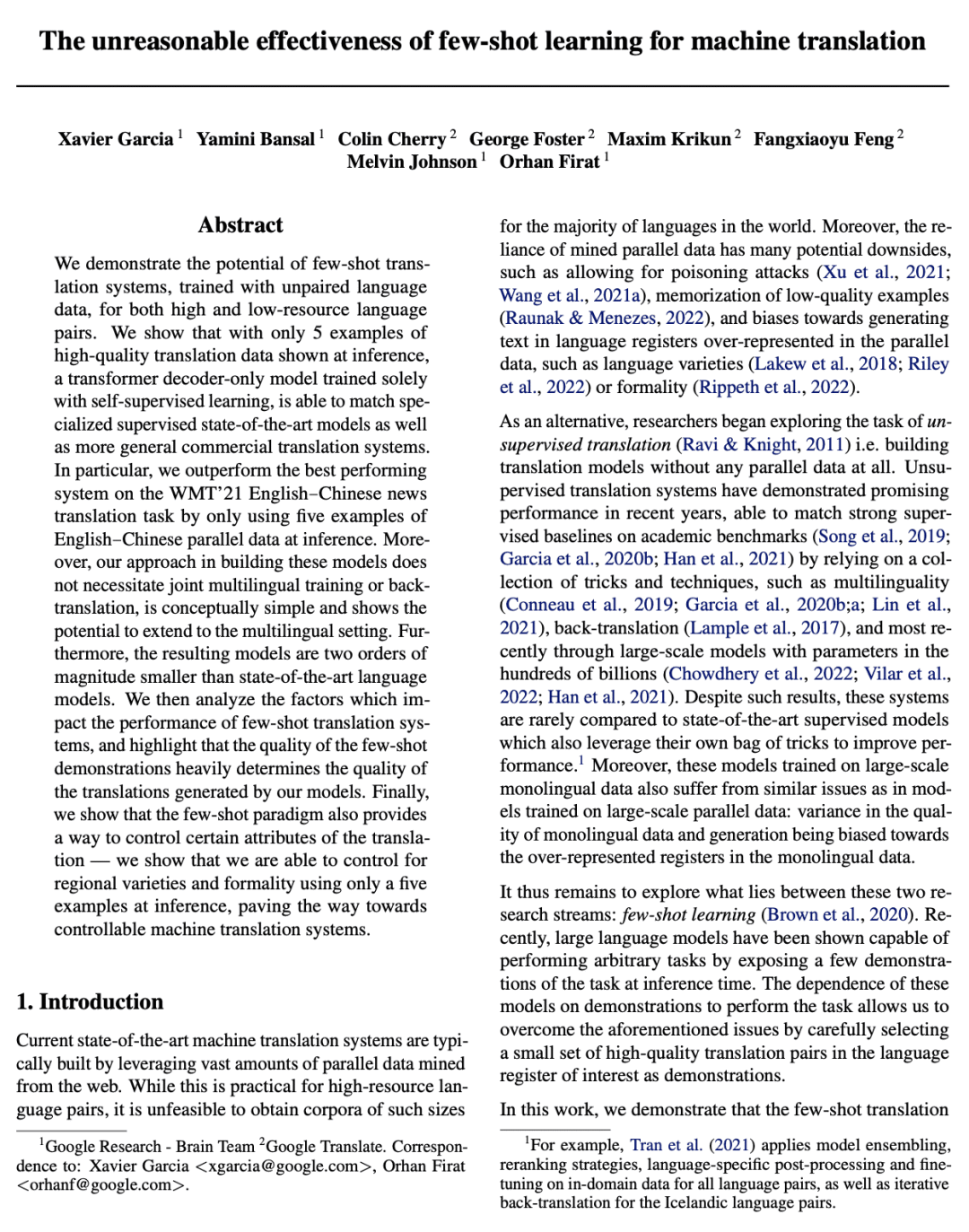

[LG] The unreasonable effectiveness of few-shot learning for machine translation

X Garcia, Y Bansal, C Cherry, G Foster, M Krikun, F Feng, M Johnson, O Firat

[Google Research]

机器翻译少样本学习的不可思议效果

要点:

-

少次学习可以有效地匹配专门的监督模型和一般商业翻译系统的性能,在推断时只需要五个高质量的翻译样本; -

构建这些模型的方法在概念上很简单,不需要多语言联合训练或回译,并且有可能扩展到多语言环境; -

与传统的大型语言模型(超过100B参数)相比,所产生的模型尺寸很小(8B参数)。

一句话总结:

展示了用未配对的语言数据训练的少样本机器翻译系统的潜力,并显示出与专门的监督和商业模型相竞争的性能,同时体积小两个数量级,不需要多语言联合训练或回译。

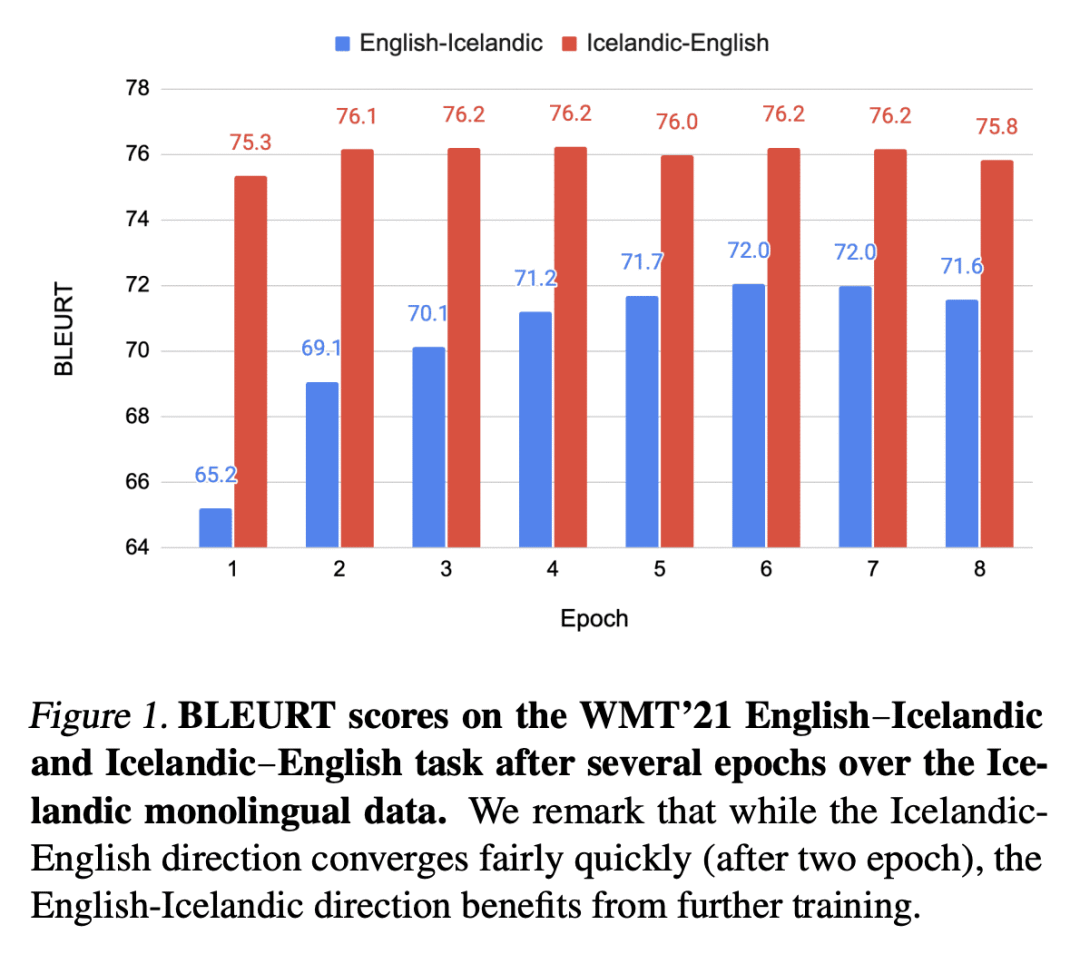

We demonstrate the potential of few-shot translation systems, trained with unpaired language data, for both high and low-resource language pairs. We show that with only 5 examples of high-quality translation data shown at inference, a transformer decoder-only model trained solely with self-supervised learning, is able to match specialized supervised state-of-the-art models as well as more general commercial translation systems. In particular, we outperform the best performing system on the WMT’21 English – Chinese news translation task by only using five examples of English – Chinese parallel data at inference. Moreover, our approach in building these models does not necessitate joint multilingual training or back-translation, is conceptually simple and shows the potential to extend to the multilingual setting. Furthermore, the resulting models are two orders of magnitude smaller than state-of-the-art language models. We then analyze the factors which impact the performance of few-shot translation systems, and highlight that the quality of the few-shot demonstrations heavily determines the quality of the translations generated by our models. Finally, we show that the few-shot paradigm also provides a way to control certain attributes of the translation — we show that we are able to control for regional varieties and formality using only a five examples at inference, paving the way towards controllable machine translation systems.

https://arxiv.org/abs/2302.01398

[LG] Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

Z Wang, S Cai, A Liu, X Ma…

[Peking University & University of California Los Angeles]

描述、解释、规划和选择:用大型语言模型进行交互式规划以实现开放世界多任务智能体

要点:

-

提出”描述、解释、规划和选择”(DEPS),一种基于大型语言模型的交互式规划方法,以解决开放世界规划中两个主要挑战:精确的多步推理和缺乏对子目标的接近性; -

在具有挑战性的我的世界(Minecraft)领域进行了全面的实验,结果显示 DEPS 在成功率方面比同类方法有明显优势; -

实现了鲁棒完成70多个Minecraft任务的里程碑,整体性能几乎翻了一番。 -

成为第一个在 Minecraft 中获得钻石的基于规划的智能体,并在具有挑战性的 ObtainDiamond 任务中获得了高于零的成功率。

一句话总结:

“描述、解释、规划和选择”(DEPS)方法是一种基于大型语言模型(LLM)的交互式规划方法,解决了开放世界规划中长程、多步推理和接近并行目标的挑战。

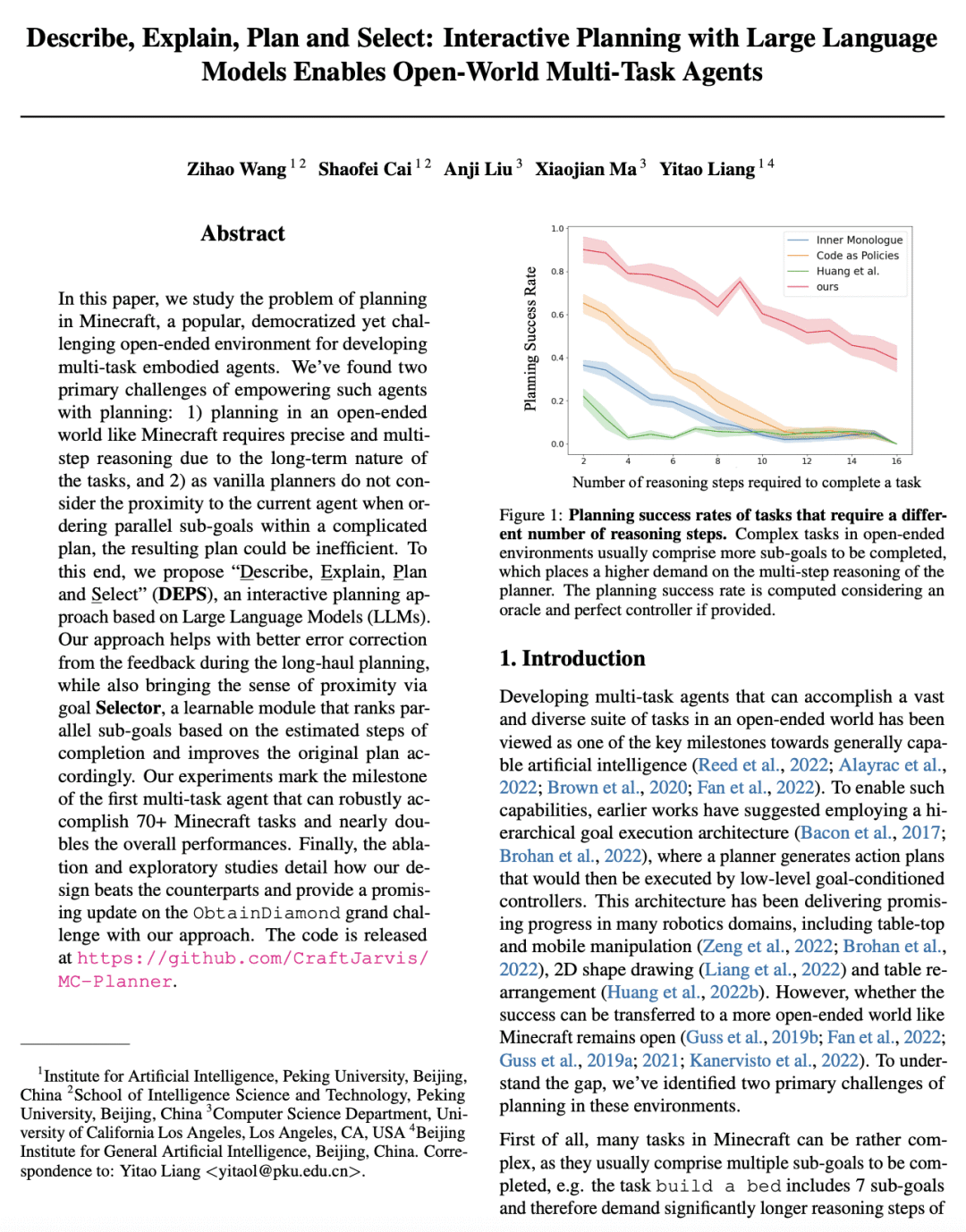

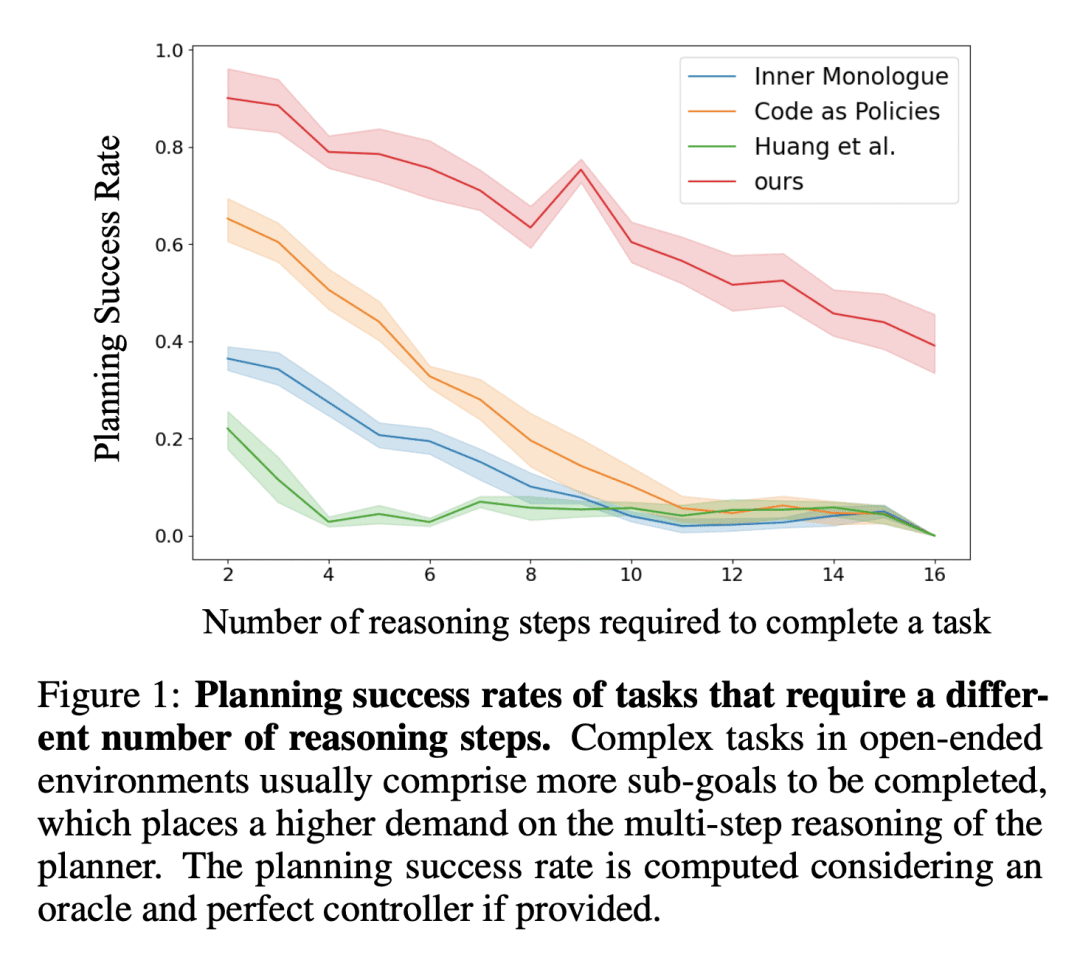

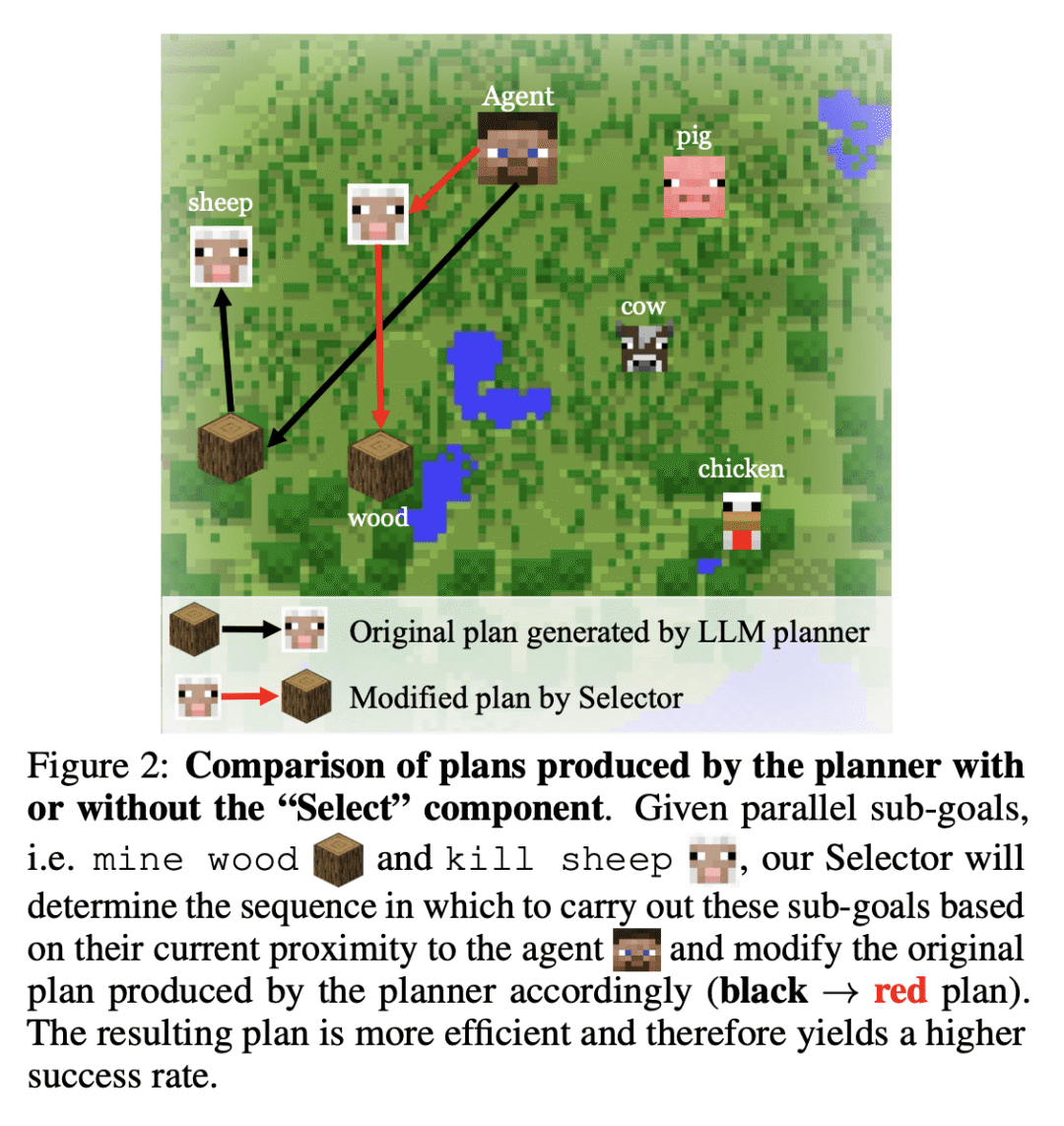

In this paper, we study the problem of planning in Minecraft, a popular, democratized yet challenging open-ended environment for developing multi-task embodied agents. We’ve found two primary challenges of empowering such agents with planning: 1) planning in an open-ended world like Minecraft requires precise and multi-step reasoning due to the long-term nature of the tasks, and 2) as vanilla planners do not consider the proximity to the current agent when ordering parallel sub-goals within a complicated plan, the resulting plan could be inefficient. To this end, we propose “Describe, Explain, Plan and Select” (DEPS), an interactive planning approach based on Large Language Models (LLMs). Our approach helps with better error correction from the feedback during the long-haul planning, while also bringing the sense of proximity via goal Selector, a learnable module that ranks parallel sub-goals based on the estimated steps of completion and improves the original plan accordingly. Our experiments mark the milestone of the first multi-task agent that can robustly accomplish 70+ Minecraft tasks and nearly doubles the overall performances. Finally, the ablation and exploratory studies detail how our design beats the counterparts and provide a promising update on the 𝙾𝚋𝚝𝚊𝚒𝚗𝙳𝚒𝚊𝚖𝚘𝚗𝚍 grand challenge with our approach.

https://arxiv.org/abs/2302.01560

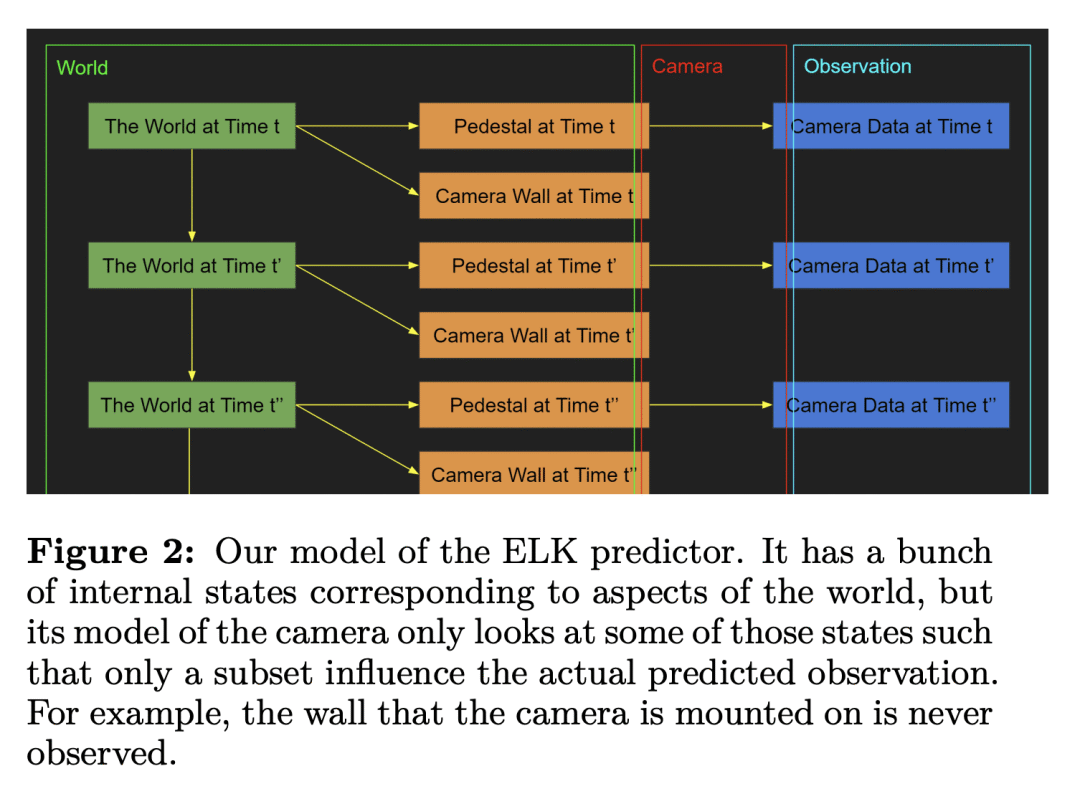

[LG] Conditioning Predictive Models: Risks and Strategies

E Hubinger, A Jermyn, J Treutlein, R Hudson, K Woolverton

[Anthropic & UC Berkeley & University of Toronto]

调节预测模型:风险与策略

要点:

-

为在没有解决激发潜在知识问题的情况下安全使用预测模型提供了参考; -

大型语言模型可以被认为是世界的预测模型,可以通过仔细的限制和调节来安全使用; -

使用预测模型有一些安全问题,比如预测其他 AI 系统、自实现预言和人智捕获,都需要解决。

4。 调节预测模型是已知的从语言模型中引出人类水平能力的最安全的方法,但部署时必须小心和快速,以避免危险的误用。

一句话总结:

尽管存在潜在的安全问题和规模限制,但使用精心调节的预测模型是已知的从大型语言模型中激发人类水平或超人类能力的最安全的方法。

Our intention is to provide a definitive reference on what it would take to safely make use of predictive models in the absence of a solution to the Eliciting Latent Knowledge problem. Furthermore, we believe that large language models can be understood as such predictive models of the world, and that such a conceptualization raises significant opportunities for their safe yet powerful use via carefullly conditioning them to predict desirable outputs. Unfortunately, such approaches also raise a variety of potentially fatal safety problems, particularly surrounding situations where predictive models predict the output of other AI systems, potentially unbeknownst to us. There are numerous potential solutions to such problems, however, primarily via carefully conditioning models to predict the things we want (e.g. humans) rather than the things we don’t (e.g. malign AIs). Furthermore, due to the simplicity of the prediction objective, we believe that predictive models present the easiest inner alignment problem that we are aware of. As a result, we think that conditioning approaches for predictive models represent the safest known way of eliciting human-level and slightly superhuman capabilities from large language models and other similar future models.

https://arxiv.org/abs/2302.00805

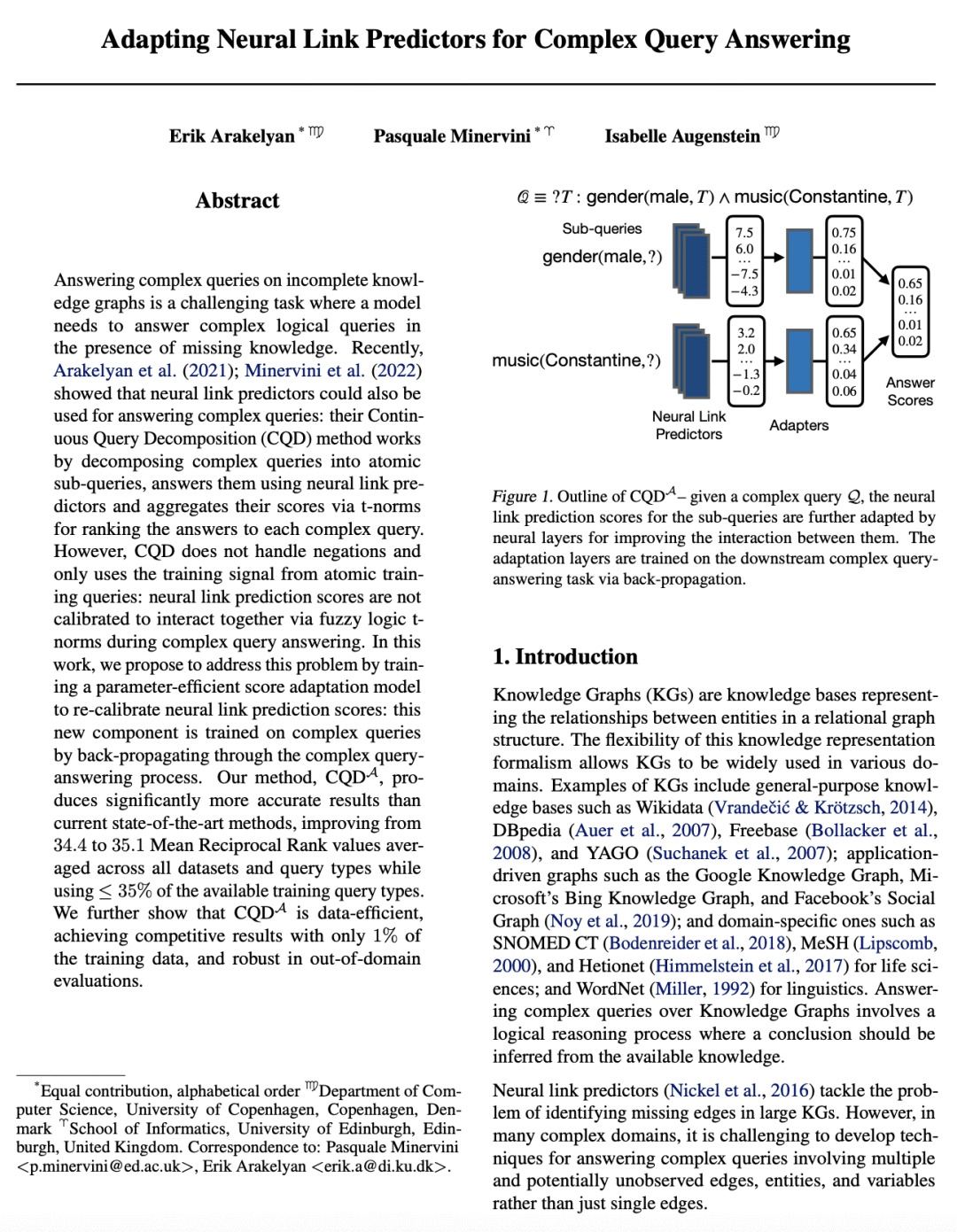

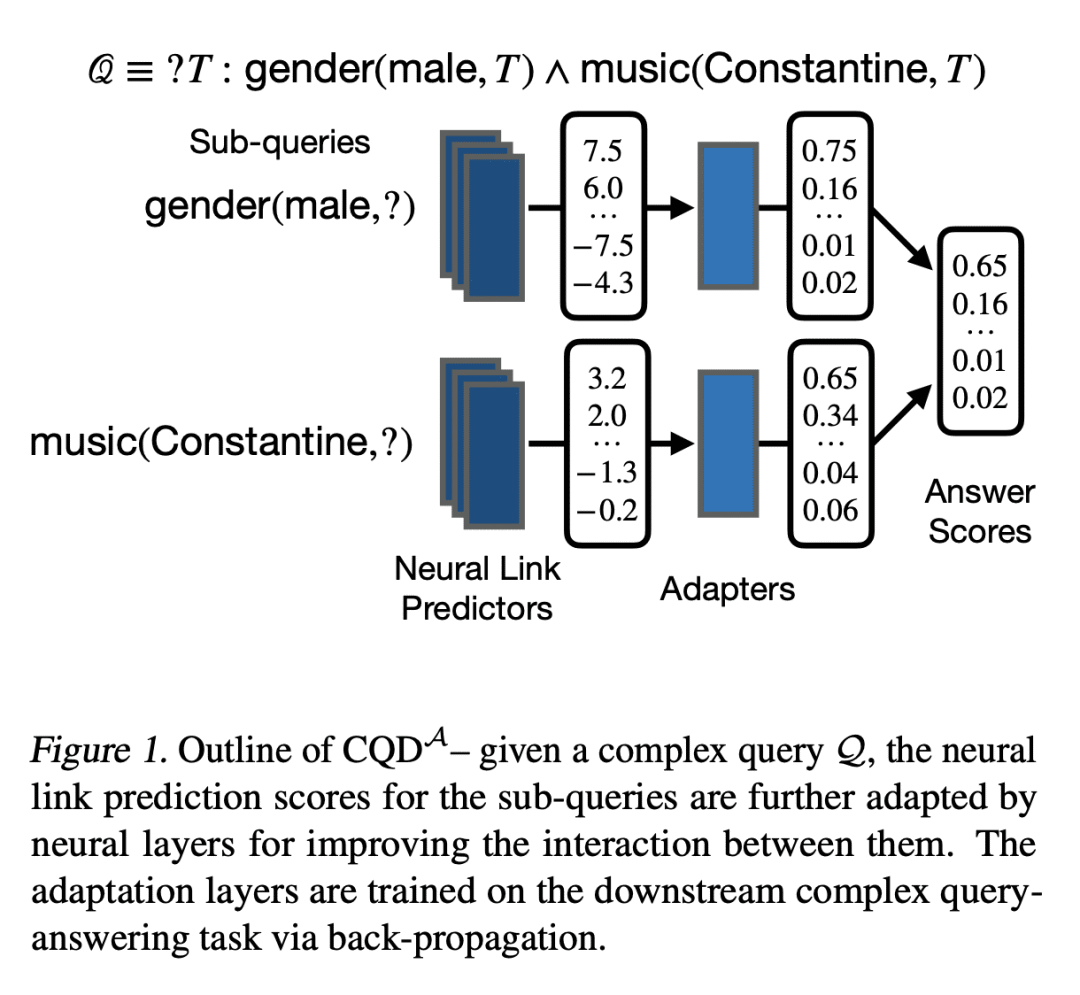

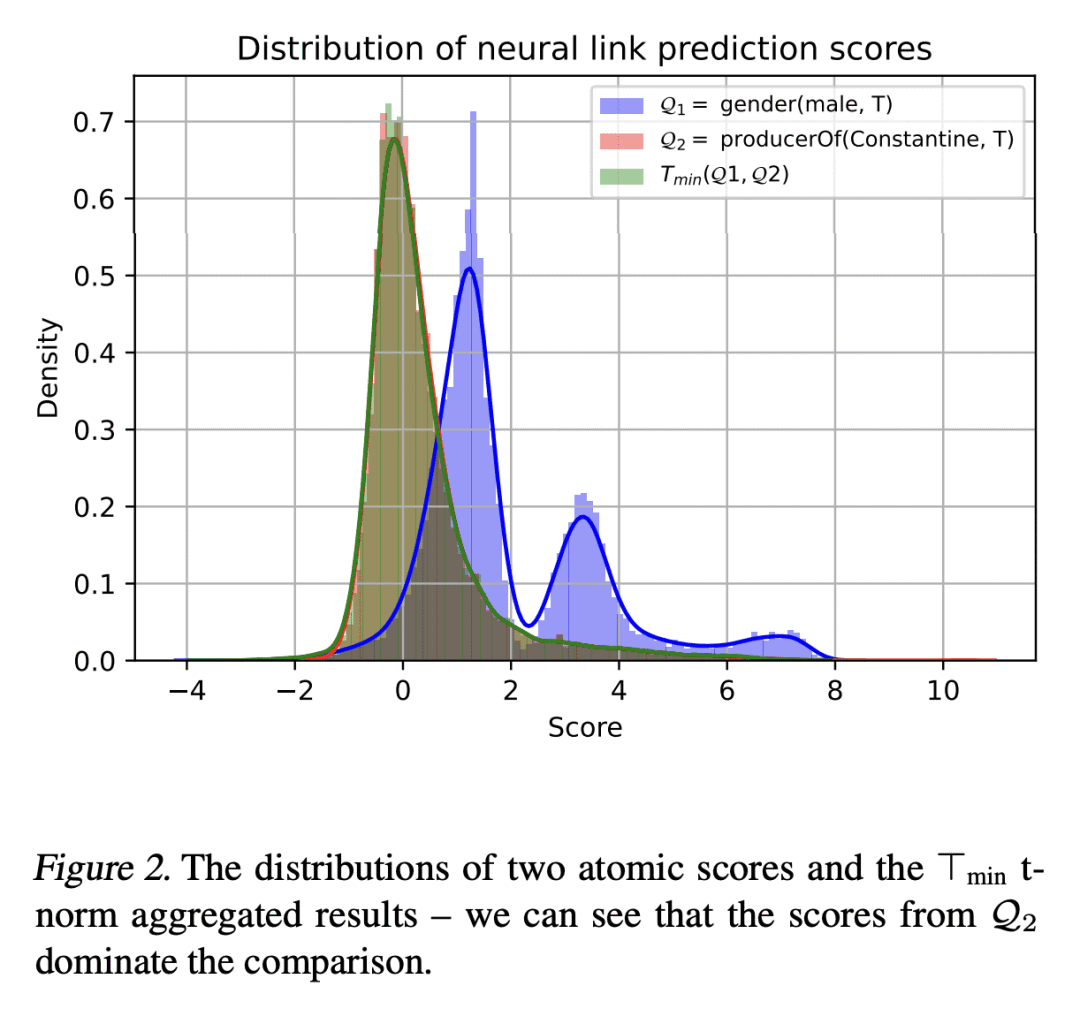

[LG] Adapting Neural Link Predictors for Complex Query Answering

E Arakelyan, P Minervini, I Augenstein

[University of Copenhagen & University of Edinburgh]

复杂查询回答的神经链路预测器自适应

要点:

-

提出一种在非完全知识图谱中回答复杂查询的新方法——连续查询分解自适应(CQDA); -

CQDA是一个参数高效的分数自适应模型,其重新校准了神经链接预测分数,以提高复杂查询回答的准确性; -

与最先进方法相比,CQDA实现了平均倒数排名的显著提高,从34.4提高到35.1。

一句话总结:

提出用于回答知识图谱(KG)上复杂一阶逻辑(FOL)查询的CQDA方法,与之前最先进的方法相比,该方法将平均倒数排名(MRR)提高了0.7,同时使用的查询类型不到35%,而且数据效率高。

Answering complex queries on incomplete knowledge graphs is a challenging task where a model needs to answer complex logical queries in the presence of missing knowledge. Recently, Arakelyan et al. (2021); Minervini et al. (2022) showed that neural link predictors could also be used for answering complex queries: their Continuous Query Decomposition (CQD) method works by decomposing complex queries into atomic sub-queries, answers them using neural link predictors and aggregates their scores via t-norms for ranking the answers to each complex query. However, CQD does not handle negations and only uses the training signal from atomic training queries: neural link prediction scores are not calibrated to interact together via fuzzy logic t-norms during complex query answering. In this work, we propose to address this problem by training a parameter-efficient score adaptation model to re-calibrate neural link prediction scores: this new component is trained on complex queries by back-propagating through the complex query-answering process. Our method, CQDA, produces significantly more accurate results than current state-of-the-art methods, improving from 34.4 to 35.1 Mean Reciprocal Rank values averaged across all datasets and query types while using ≤35% of the available training query types. We further show that CQDA is data-efficient, achieving competitive results with only 1% of the training data, and robust in out-of-domain evaluations.

https://arxiv.org/abs/2301.12313

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.