1、[LG] Stable Target Field for Reduced Variance Score Estimation in Diffusion Models

2、[LG] GFlowNets for AI-Driven Scientific Discovery

3、[LG] Deep Power Laws for Hyperparameter Optimization

4、[CL] Large Language Models Can Be Easily Distracted by Irrelevant Context

5、[LG] Debiasing Vision-Language Models via Biased Prompts

[LG] A Bias-Variance-Privacy Trilemma for Statistical Estimation

[AS] Epic-Sounds: A Large-scale Dataset of Actions That Sound

[CL] Synthetic Prompting: Generating Chain-of-Thought Demonstrations for Large Language Models

[LG] A Comprehensive Survey of Continual Learning: Theory, Method and Application

摘要:扩散模型方差分数估计减低稳定目标场、AI驱动科学发现的GFlowNet框架、基于深度幂律的超参数优化、大型语言模型会被无关上下文分散注意力、用有偏提示纠偏视觉-语言模型、统计估计的偏差-方差-隐私三元困境、大规模操作声音数据集、为大型语言模型生成思想链演示、持续学习综述

1、[LG] Stable Target Field for Reduced Variance Score Estimation in Diffusion Models

Y Xu, S Tong, T Jaakkola

[MIT]

扩散模型方差分数估计减低稳定目标场

要点:

-

当前扩散模型训练目标的不稳定性,其特点是分数学习目标变化最大的中间阶段; -

提出一种通用的分数匹配目标,稳定目标域(STF),该目标提供更稳定的训练目标; -

对STF进行分析,并证明它渐近无偏,降低了训练目标的协方差的迹。

一句话总结:

STF 目标通过减少训练目标在其去噪分数匹配目标中的协方差迹,提高了基于分数的扩散模型的性能、稳定性和训练速度。

摘要:

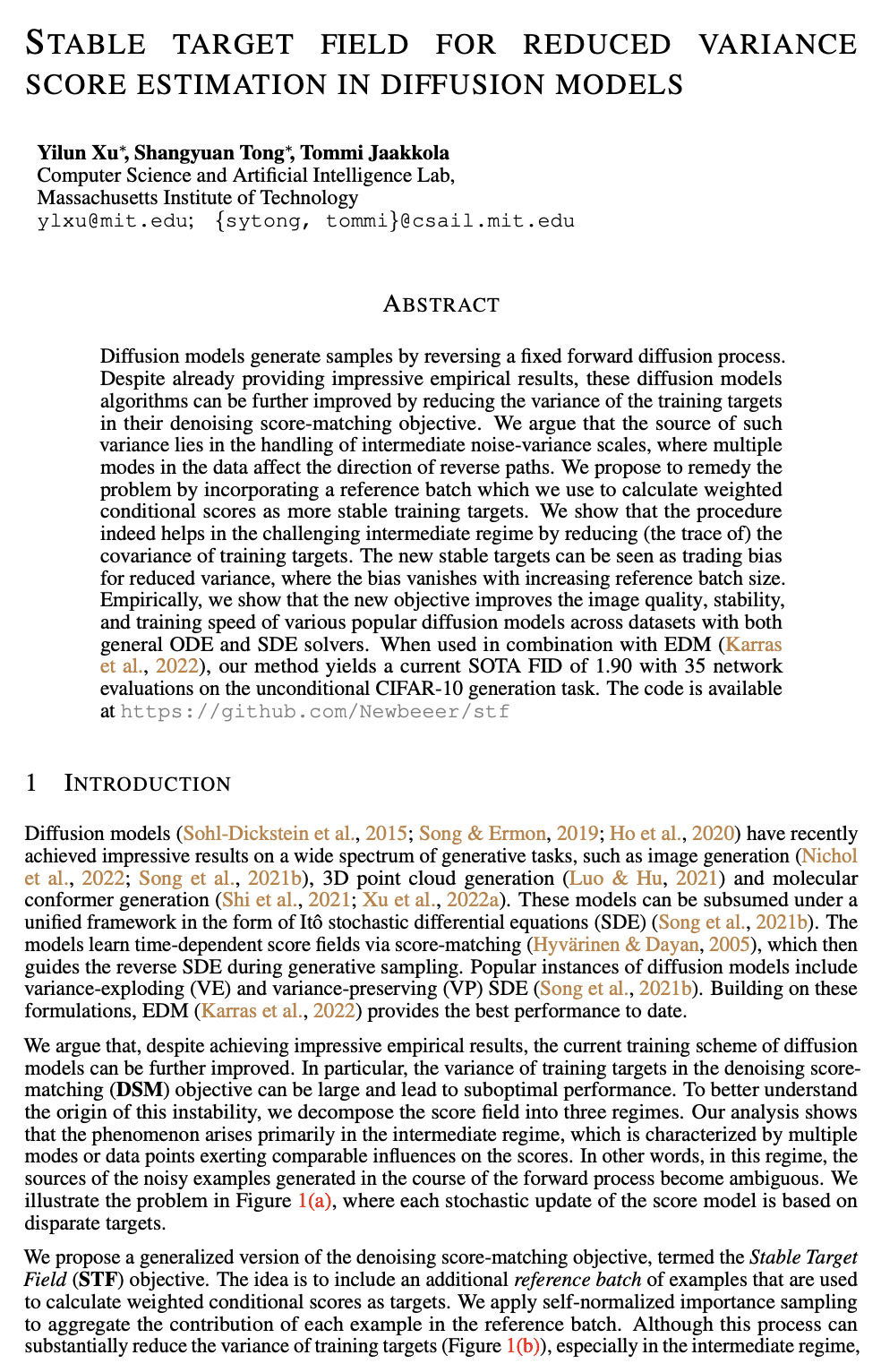

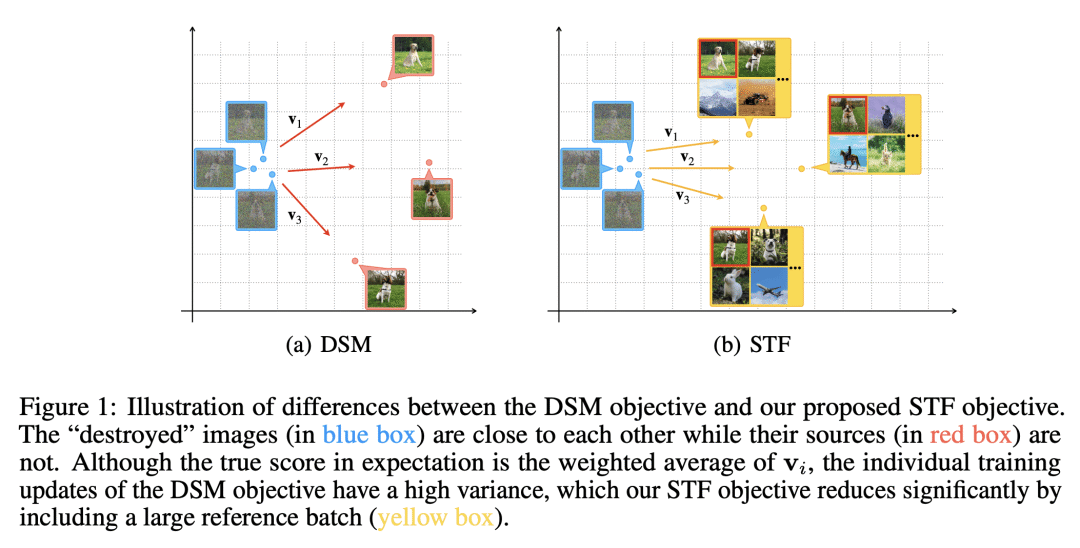

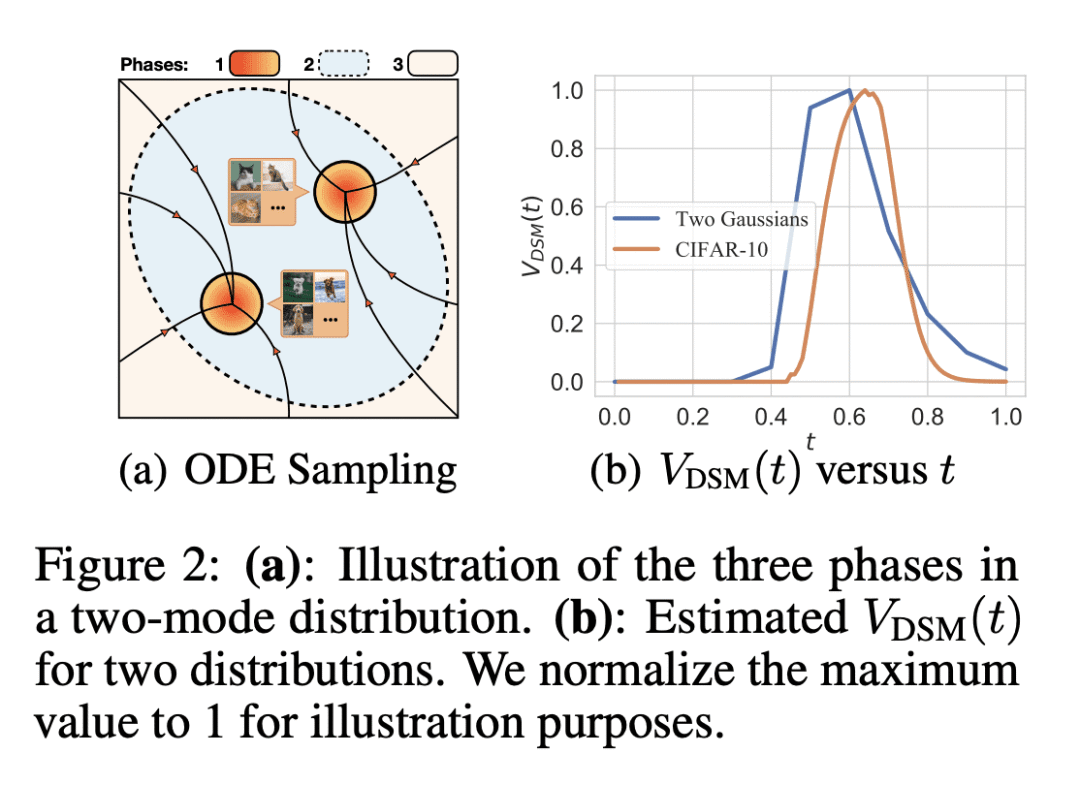

扩散模型通过反演一个固定前向扩散过程产生样本。尽管已经提供了令人印象深刻的经验结果,但这些扩散模型算法可以通过减少其去噪分数匹配目标中训练目标方差而进一步改进。本文认为,这种方差的来源在于对中间噪声-方差尺度的处理,其中数据中的多种模式会影响反演路径的方向。本文建议通过纳入一个参考批次来补救该问题,用它来计算加权条件分数作为更稳定的训练目标。该程序通过减少训练目标的协方差(迹),确实有助于挑战性的中间场景。新的稳定目标可以被看作是用偏差换取方差的减少,其中偏差会随着参考批次的增加而消失。经验表明,新的目标改善了各种流行的扩散模型的图像质量、稳定性和训练速度,这些模型都有通用的ODE和SDE求解器。当与EDM结合使用时,所提出方法在无条件的CIFAR-10生成任务上进行了35次网络评估,产生了1.90的当前最先进 FID。

Diffusion models generate samples by reversing a fixed forward diffusion process. Despite already providing impressive empirical results, these diffusion models algorithms can be further improved by reducing the variance of the training targets in their denoising score-matching objective. We argue that the source of such variance lies in the handling of intermediate noise-variance scales, where multiple modes in the data affect the direction of reverse paths. We propose to remedy the problem by incorporating a reference batch which we use to calculate weighted conditional scores as more stable training targets. We show that the procedure indeed helps in the challenging intermediate regime by reducing (the trace of) the covariance of training targets. The new stable targets can be seen as trading bias for reduced variance, where the bias vanishes with increasing reference batch size. Empirically, we show that the new objective improves the image quality, stability, and training speed of various popular diffusion models across datasets with both general ODE and SDE solvers. When used in combination with EDM, our method yields a current SOTA FID of 1.90 with 35 network evaluations on the unconditional CIFAR-10 generation task. The code is available at this https URL

https://arxiv.org/abs/2302.00670

2、[LG] GFlowNets for AI-Driven Scientific Discovery

M Jain, T Deleu, J Hartford, C Liu, A Hernandez-Garcia, Y Bengio

[Universite de Montreal & McGill University]

面向AI驱动科学发现的GFlowNet框架

要点:

-

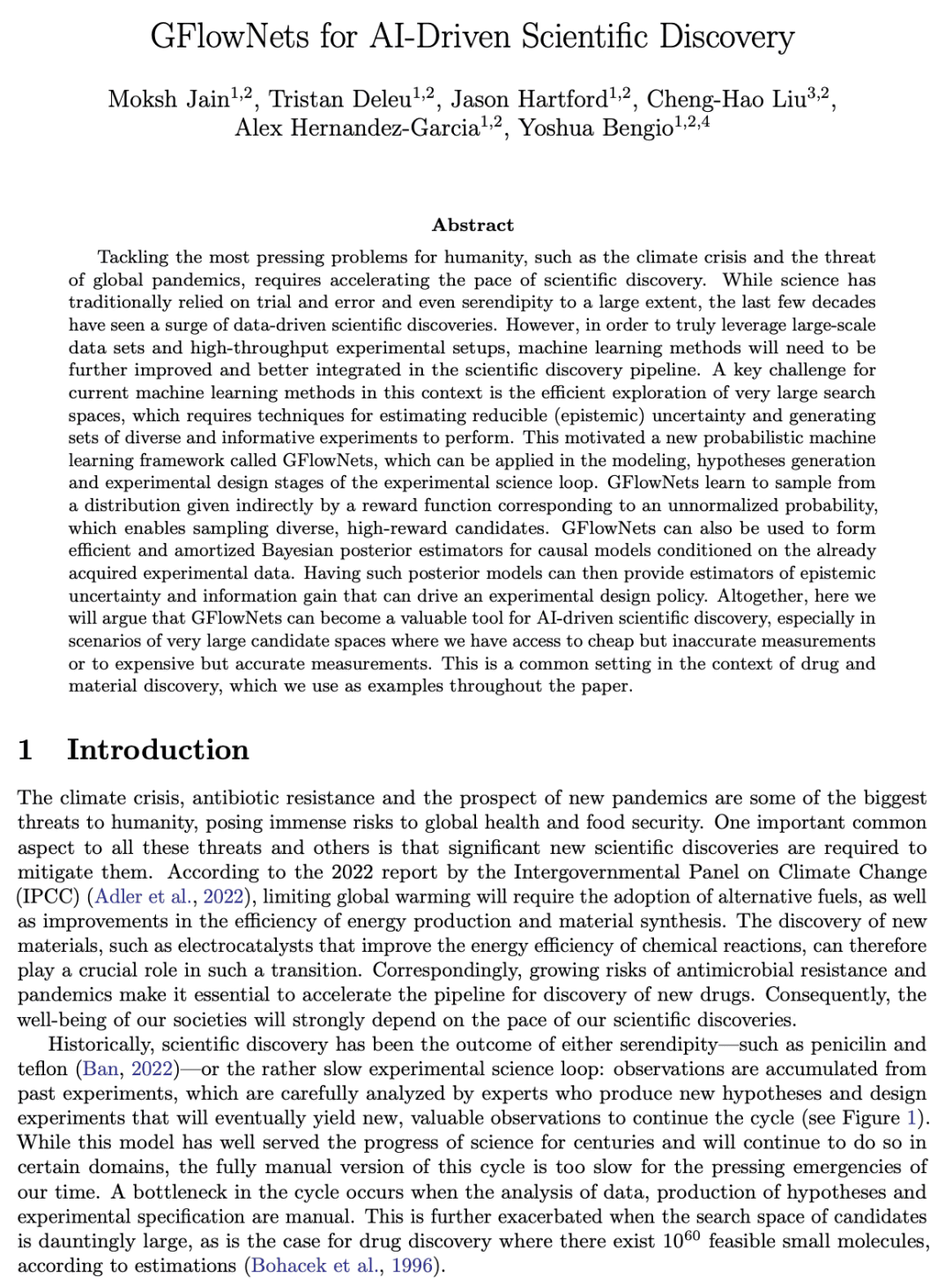

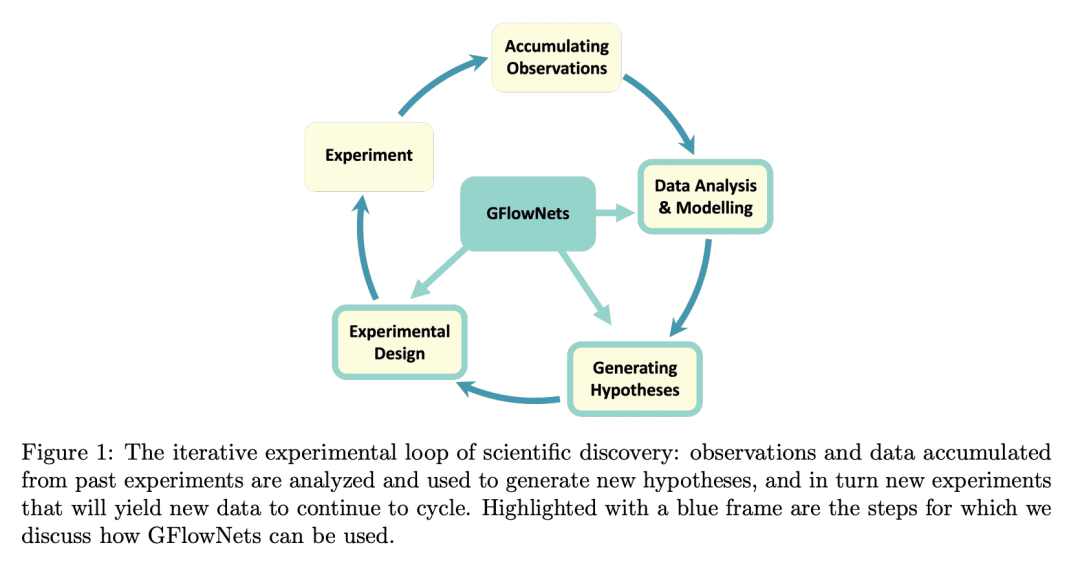

一个新的机器学习框架GFlowNet ,用于科学发现; -

GFlowNet 可用于假设的生成、实验设计和实验观察的建模; -

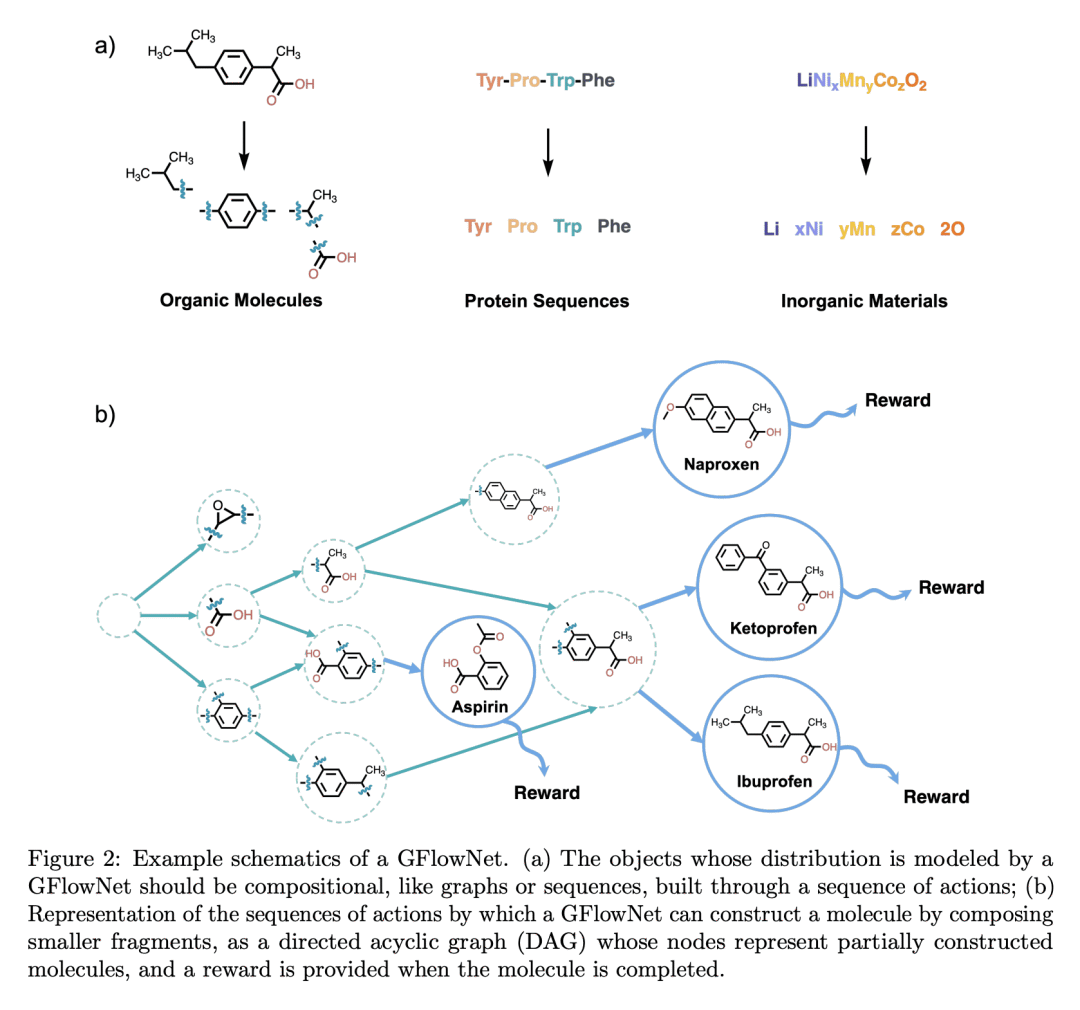

GFlowNet 能生成与奖励函数成正比的样本,可以推动科学发现。

一句话总结:

GFlowNet 是一种新的概率机器学习框架,可以通过有效地探索大的搜索空间和通过贝叶斯后验估计器估计可降低的(认识层面)不确定性来改善科学发现。

摘要:

应对人类最紧迫问题,如气候危机和全球大流行病的威胁,需要加快科学发现的步伐。虽然科学传统上在很大程度上依赖于试错,甚至是偶然性,但在过去几十年里,数据驱动的科学发现激增。然而,为了真正利用大规模数据集和高通量实验设置,机器学习方法需要进一步改进,并更好地整合到科学发现管道中。在这种情况下,目前的机器学习方法面临的一个关键挑战是如何有效地探索非常大的搜索空间,这就需要有估计可减少(认识层面)不确定性的技术,并生成要进行的多样化和信息丰富的实验集。这激发了一个新的概率机器学习框架,称为GFlowNet,可应用于实验科学循环的建模、假设生成和实验设计阶段。GFlowNet 学习从一个由对应于非正态化概率的奖励函数间接给出的分布中取样,可以对不同的、高回报的候选者进行采样。GFlowNet 也可以用来形成高效和摊销贝叶斯后验估计器,以已经获得的实验数据为条件的因果模型。有了这样的后验模型,就可以提供认识层面不确定性和信息增益的估计,从而驱动实验设计政策。本文论证 GFlowNet 可以成为人工智能驱动科学发现的一个有价值的工具,特别是在非常大的候选空间情况下,可以获得廉价但不准确的测量,或昂贵但准确的测量。这是药物和材料发现背景下的一个常见设置,本文中使用了这些例子。

Tackling the most pressing problems for humanity, such as the climate crisis and the threat of global pandemics, requires accelerating the pace of scientific discovery. While science has traditionally relied on trial and error and even serendipity to a large extent, the last few decades have seen a surge of data-driven scientific discoveries. However, in order to truly leverage large-scale data sets and high-throughput experimental setups, machine learning methods will need to be further improved and better integrated in the scientific discovery pipeline. A key challenge for current machine learning methods in this context is the efficient exploration of very large search spaces, which requires techniques for estimating reducible (epistemic) uncertainty and generating sets of diverse and informative experiments to perform. This motivated a new probabilistic machine learning framework called GFlowNets, which can be applied in the modeling, hypotheses generation and experimental design stages of the experimental science loop. GFlowNets learn to sample from a distribution given indirectly by a reward function corresponding to an unnormalized probability, which enables sampling diverse, high-reward candidates. GFlowNets can also be used to form efficient and amortized Bayesian posterior estimators for causal models conditioned on the already acquired experimental data. Having such posterior models can then provide estimators of epistemic uncertainty and information gain that can drive an experimental design policy. Altogether, here we will argue that GFlowNets can become a valuable tool for AI-driven scientific discovery, especially in scenarios of very large candidate spaces where we have access to cheap but inaccurate measurements or to expensive but accurate measurements. This is a common setting in the context of drug and material discovery, which we use as examples throughout the paper.

https://arxiv.org/abs/2302.00615

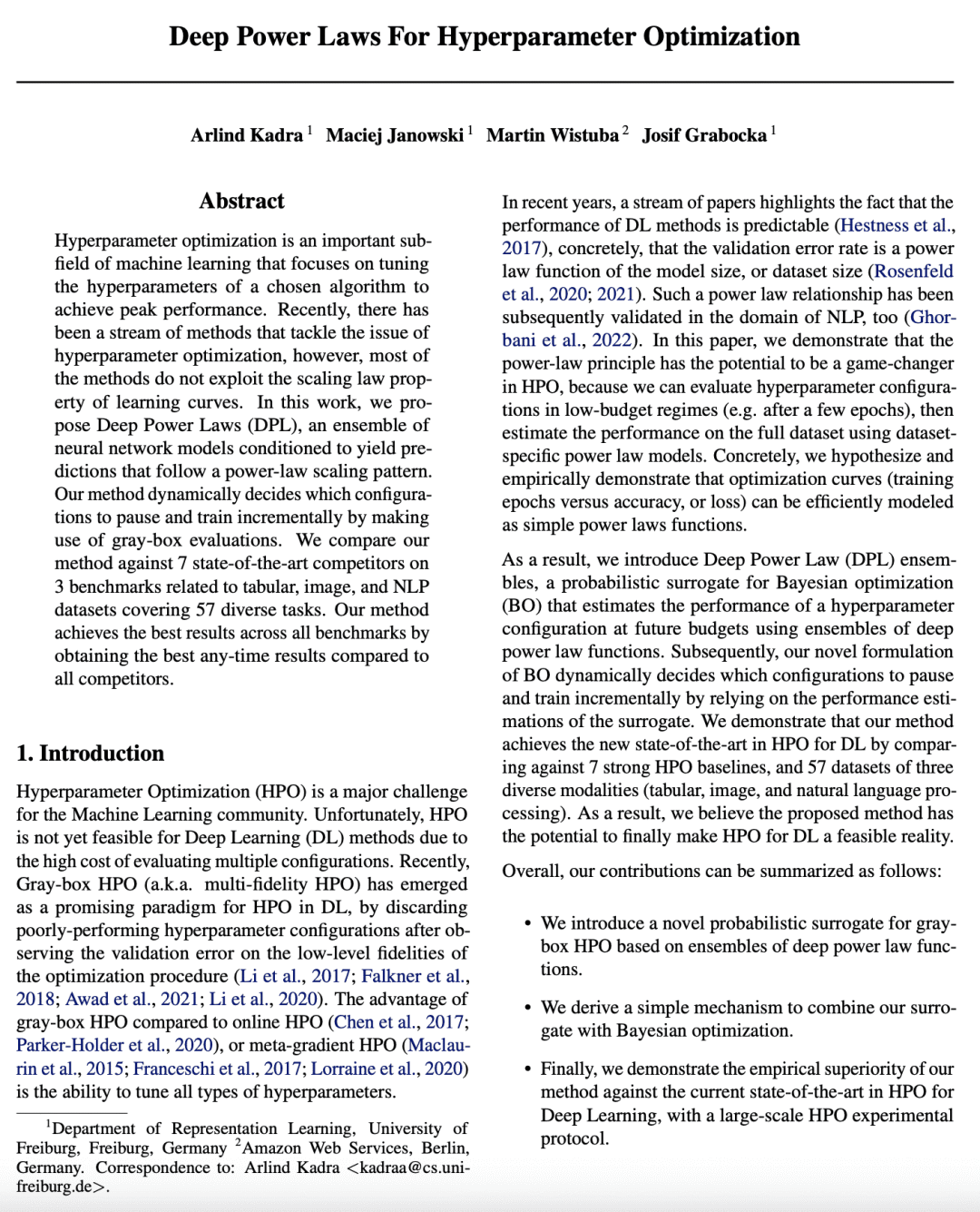

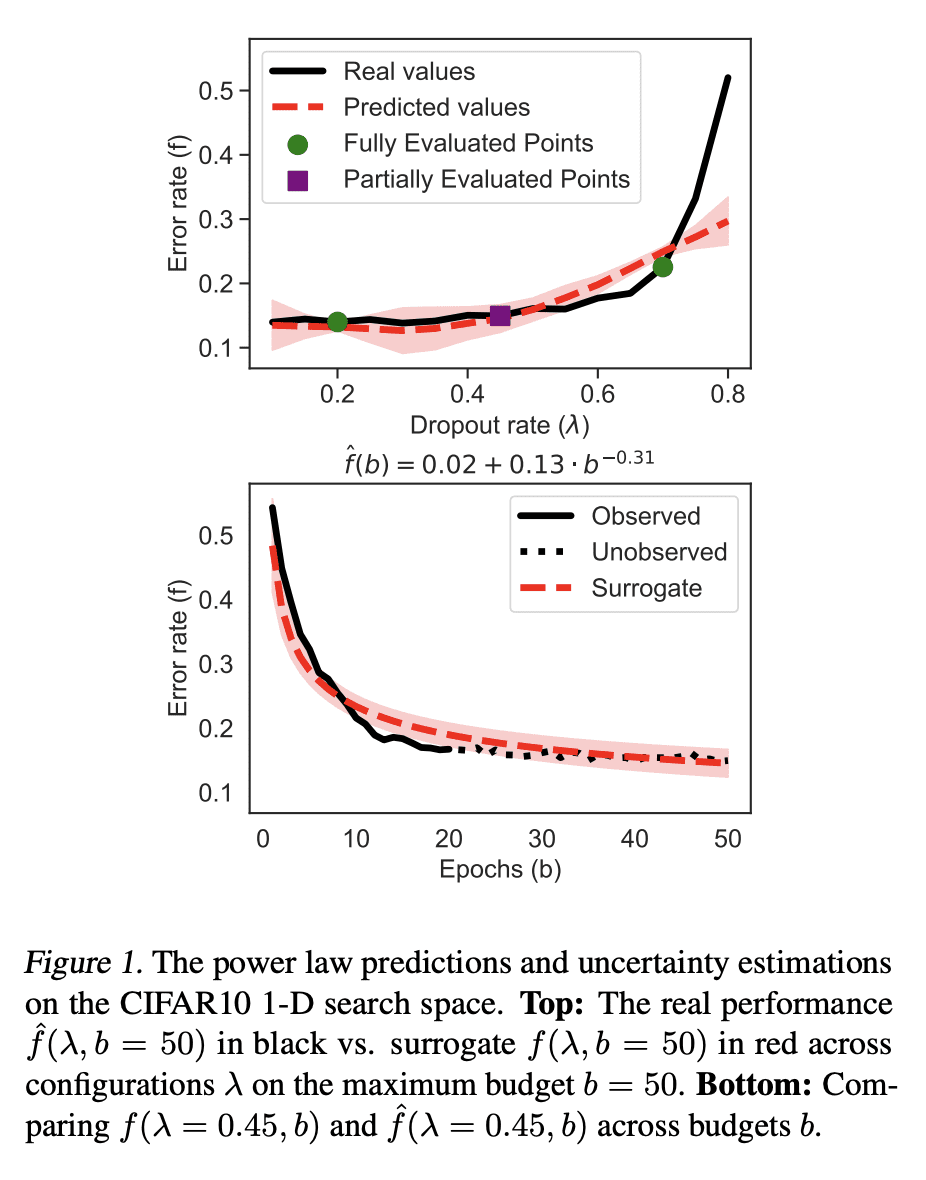

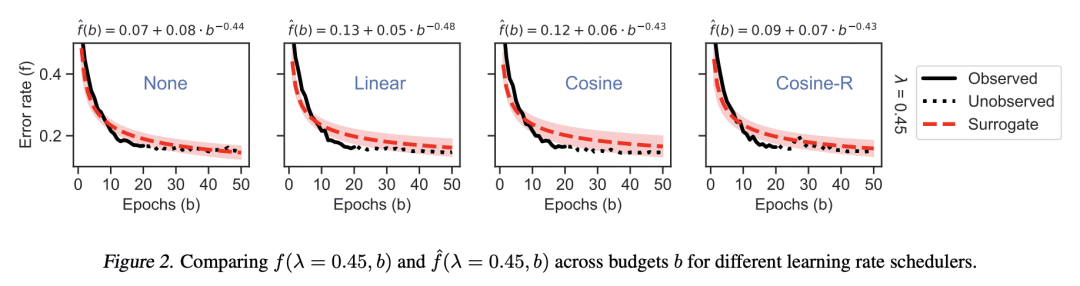

3、[LG] Deep Power Laws for Hyperparameter Optimization

A Kadra, M Janowski, M Wistuba, J Grabocka

[University of Freiburg & Amazon Web Services]

基于深度幂律的超参数优化

要点:

-

提出一种基于深度幂律函数集成的新的灰盒超参数优化的概率代用方法; -

一种简单的机制,将代用指标与贝叶斯优化结合起来; -

通过一组大规模的超参数优化实验,证明了该方法与当前最先进的深度学习超参数优化相比的经验优势。

一句话总结:

提出深度幂律(DPL),深度学习灰盒超参数优化的一种新的概率代用方法,采用幂律函数集成和贝叶斯优化,性能优于目前最先进的方法。

摘要:

超参数优化是机器学习的一个重要子领域,侧重于调整所选算法的超参数以达到峰值性能。最近,解决超参数优化问题的方法层出不穷,然而,大多数方法没有利用学习曲线的缩放律特性。本文提出深度幂律(DPL),一种神经网络模型集成,其条件是产生遵循幂律扩展模式的预测。通过利用灰盒评估,动态地决定哪些配置需要暂停和增量训练。将该方法与7个最先进的竞争方法在3个与表格、图像和NLP数据集有关的基准上进行了比较,涵盖了57个不同的任务。与所有竞争方法相比,所提出方法通过获得最佳的任意时刻结果,在所有基准中取得了最好的结果。

Hyperparameter optimization is an important subfield of machine learning that focuses on tuning the hyperparameters of a chosen algorithm to achieve peak performance. Recently, there has been a stream of methods that tackle the issue of hyperparameter optimization, however, most of the methods do not exploit the scaling law property of learning curves. In this work, we propose Deep Power Laws (DPL), an ensemble of neural network models conditioned to yield predictions that follow a power-law scaling pattern. Our method dynamically decides which configurations to pause and train incrementally by making use of gray-box evaluations. We compare our method against 7 state-of-the-art competitors on 3 benchmarks related to tabular, image, and NLP datasets covering 57 diverse tasks. Our method achieves the best results across all benchmarks by obtaining the best any-time results compared to all competitors.

https://arxiv.org/abs/2302.00441

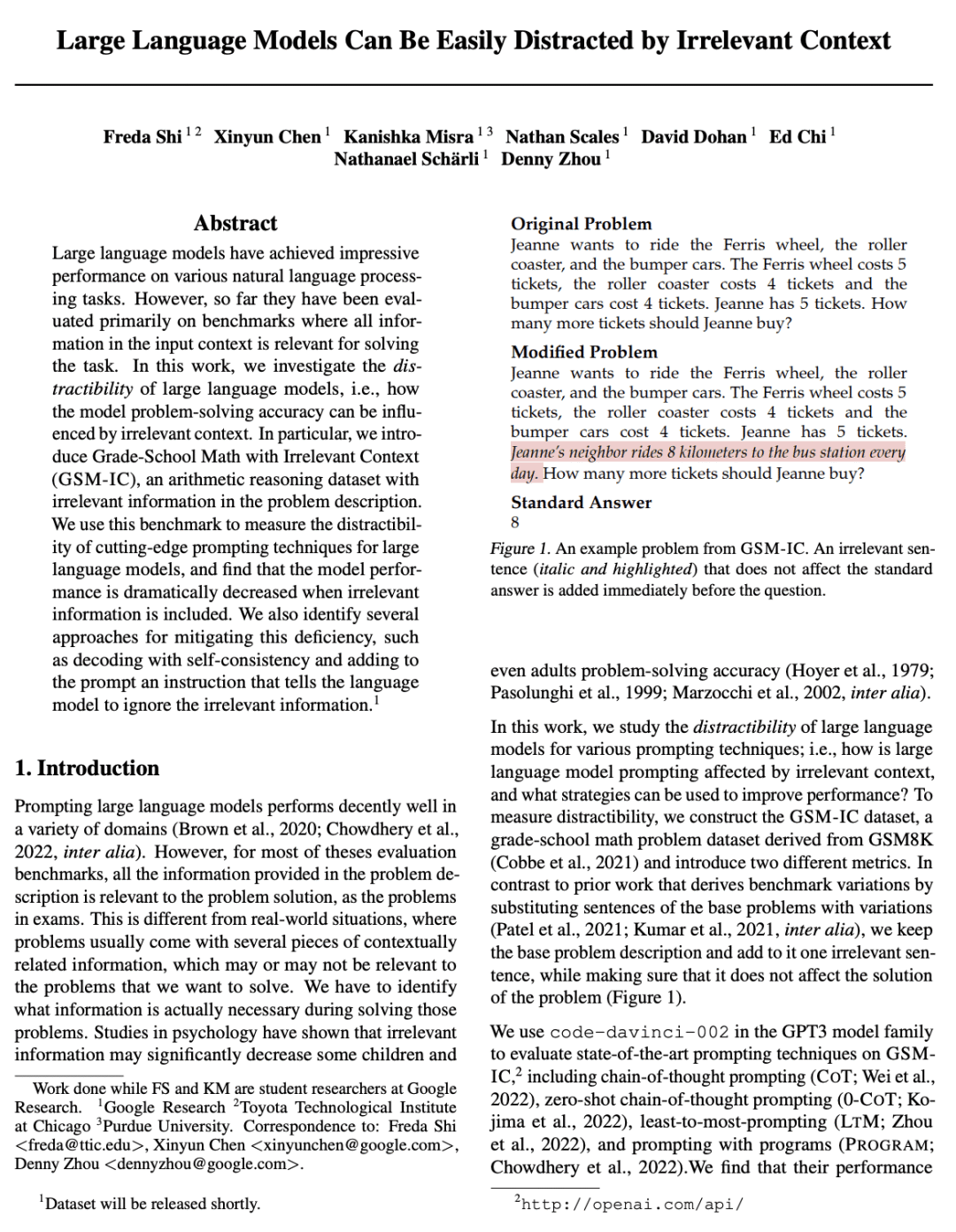

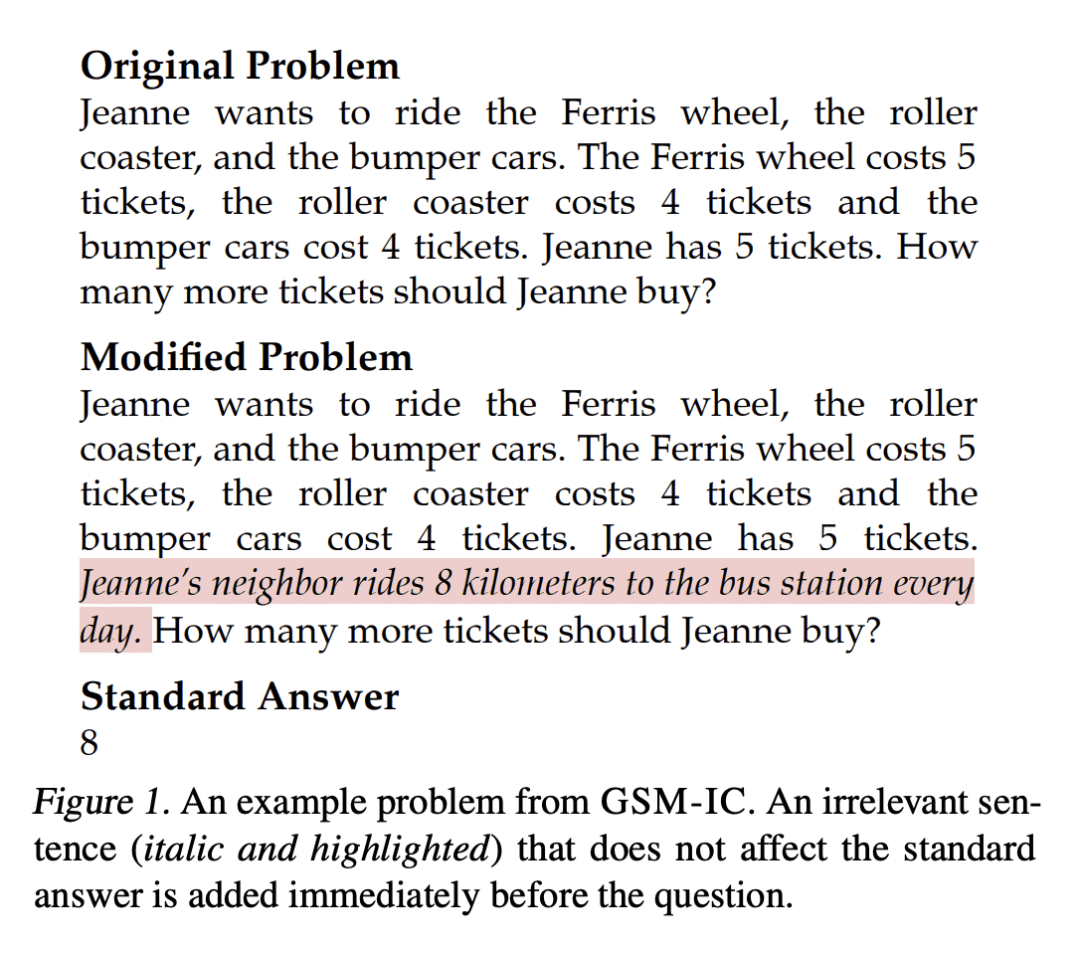

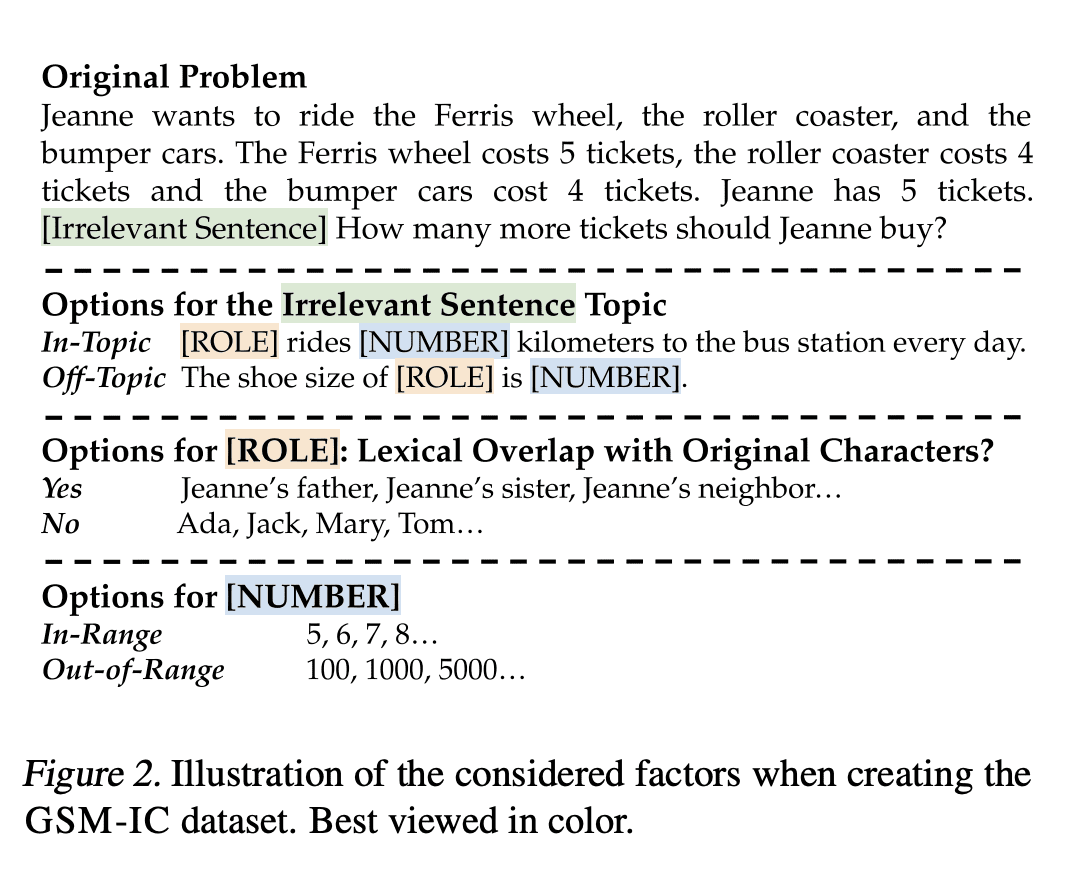

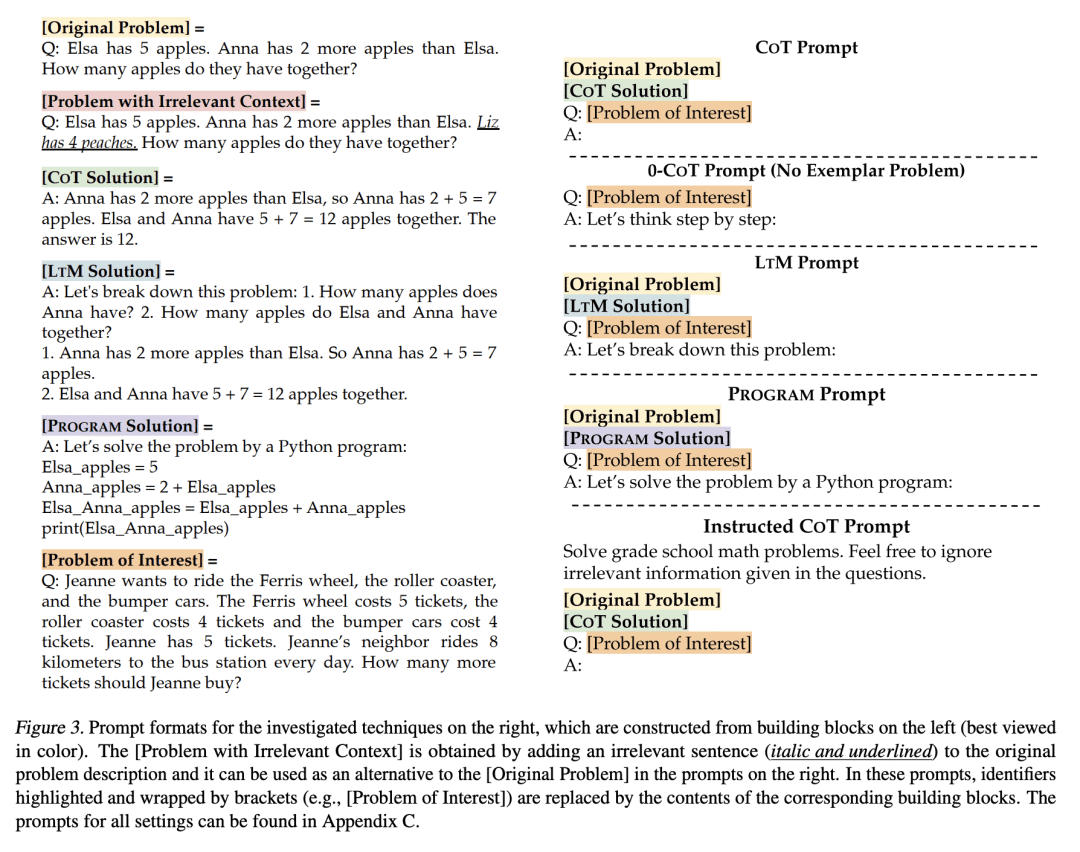

4、[CL] Large Language Models Can Be Easily Distracted by Irrelevant Context

F Shi, X Chen, K Misra, N Scales, D Dohan, E Chi, N Schärli, D Zhou

[Google Research]

大型语言模型会被无关上下文分散注意力

要点:

-

大型语言模型很容易被不相关的上下文所干扰,降低了它们在算术推理任务上的表现; -

自洽性提高了模型对不相关上下文的鲁棒性; -

在提示中呈现不相关上下文的样本,或添加忽略不相关上下文的指令,可以提高模型的性能。

一句话总结:

大型语言模型很容易被不相关的上下文所干扰,但它们的性能可以通过使用自洽性、样本提示和忽略不相关信息的指令来提高。

摘要:

大型语言模型在各种自然语言处理任务中取得了令人印象深刻的性能。然而,到目前为止,它们主要是在输入上下文中所有信息都与解决任务相关的基准上被评估的。本文研究了大型语言模型的注意力分散性,也就是说,模型解决问题的准确性如何受到不相关上下文的影响。提出了 Grade-School Math with Irrelevant Context (GSM-IC),一个算术推理数据集,问题描述中有不相关的信息。用这个基准来衡量大型语言模型的最新提示技术的注意力分散性,并发现当不相关的信息被包括在内时,模型的性能会急剧下降。本文还确定了几种缓解这一缺陷的方法,如用自洽性解码和在提示中加入指令,告诉语言模型忽略不相关的信息。

Large language models have achieved impressive performance on various natural language processing tasks. However, so far they have been evaluated primarily on benchmarks where all information in the input context is relevant for solving the task. In this work, we investigate the distractibility of large language models, i.e., how the model problem-solving accuracy can be influenced by irrelevant context. In particular, we introduce Grade-School Math with Irrelevant Context (GSM-IC), an arithmetic reasoning dataset with irrelevant information in the problem description. We use this benchmark to measure the distractibility of cutting-edge prompting techniques for large language models, and find that the model performance is dramatically decreased when irrelevant information is included. We also identify several approaches for mitigating this deficiency, such as decoding with self-consistency and adding to the prompt an instruction that tells the language model to ignore the irrelevant information.

https://arxiv.org/abs/2302.00093

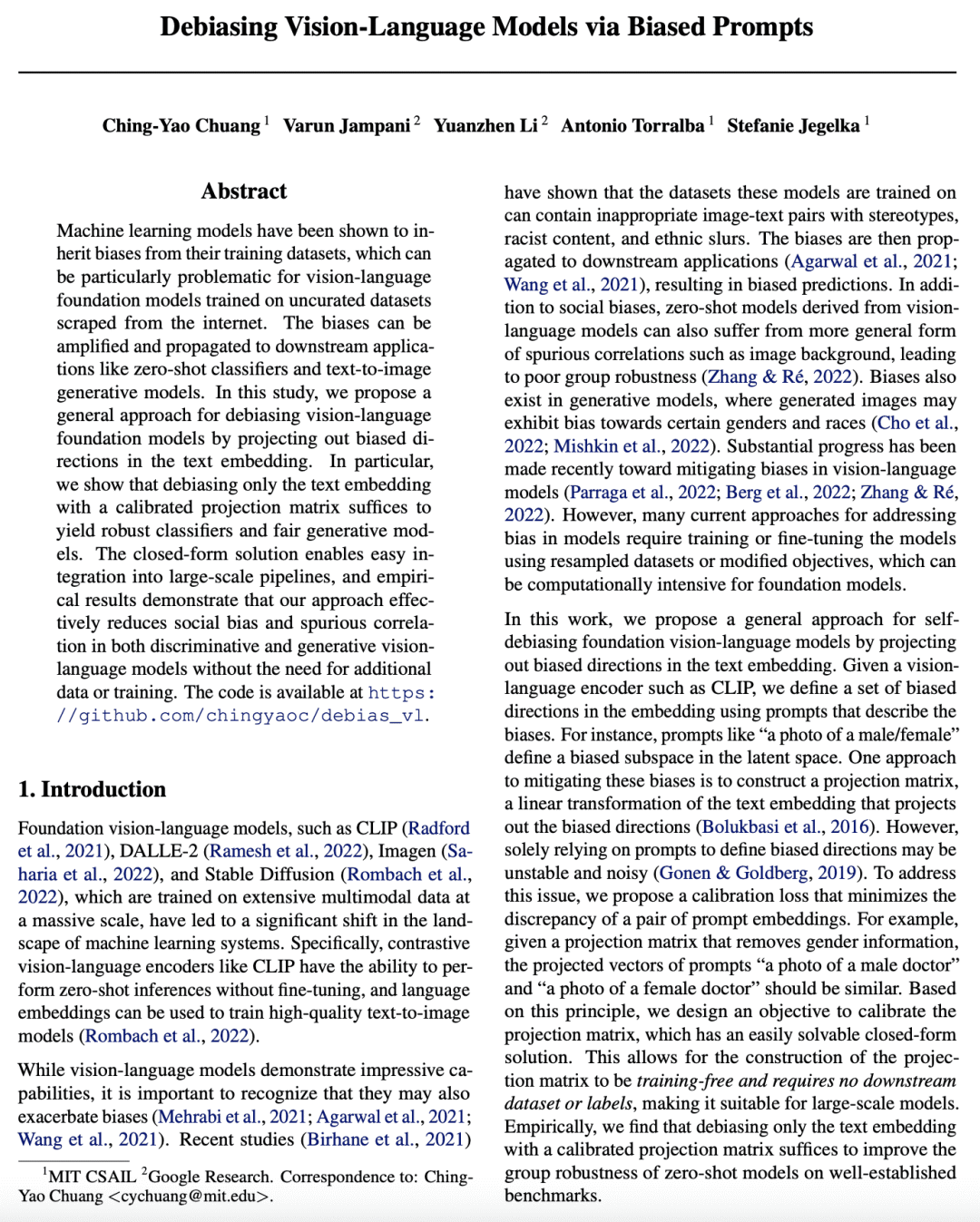

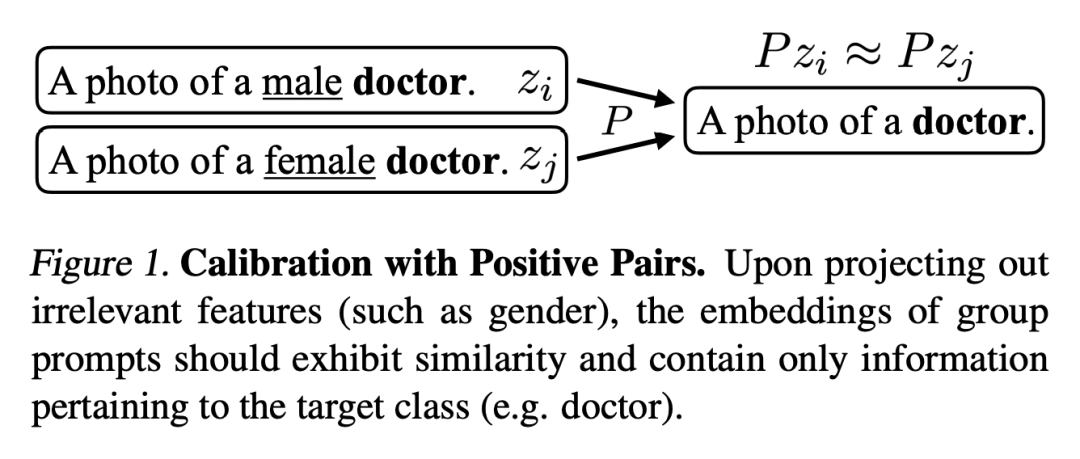

5、[LG] Debiasing Vision-Language Models via Biased Prompts

C Chuang, V Jampani, Y Li, A Torralba, S Jegelka

[MIT CSAIL & Google Research]

通过有偏提示纠偏视觉-语言模型

要点:

-

提出一种简单通用的方法,通过在文本嵌入中投影出有偏差的方向来对视觉-语言模型进行纠偏; -

不需要额外的训练、数据或标签,使得它在计算上对基础模型的使用非常有效; -

闭式解可以轻松地集成到大规模管道中。

一句话总结:

提出一种简单而通用的视觉-语言模型去偏的方法,通过在文本嵌入中投影出有偏的方向来消除偏差,计算效率高,不需要额外的训练、数据或标签。

摘要:

机器学习模型已被证明会从其训练数据集中继承偏差,这对于在从互联网上爬取的未经整理的数据集上训练的视觉-语言基础模型来说,可能特别有问题。这些偏差可以被放大并传播到下游应用中,如零样本分类器和文本到图像生成模型。本文提出一种通用的方法,通过投影分解出文本嵌入中的偏差来消除视觉语言基础模型的偏差。特别是,只用一个校准的投影矩阵对文本嵌入进行去偏,就足以产生鲁棒的分类器和公平的生成模型。闭式解可以很容易地集成到大规模的管道中,实证结果表明,所提出方法可以有效地减少鉴别性和生成性视觉语言模型的社会偏差和虚假相关性,而不需要额外的数据或训练。

Machine learning models have been shown to inherit biases from their training datasets, which can be particularly problematic for vision-language foundation models trained on uncurated datasets scraped from the internet. The biases can be amplified and propagated to downstream applications like zero-shot classifiers and text-to-image generative models. In this study, we propose a general approach for debiasing vision-language foundation models by projecting out biased directions in the text embedding. In particular, we show that debiasing only the text embedding with a calibrated projection matrix suffices to yield robust classifiers and fair generative models. The closed-form solution enables easy integration into large-scale pipelines, and empirical results demonstrate that our approach effectively reduces social bias and spurious correlation in both discriminative and generative vision-language models without the need for additional data or training.

https://arxiv.org/abs/2302.00070

另外几篇值得关注的论文:

[LG] A Bias-Variance-Privacy Trilemma for Statistical Estimation

G Kamath, A Mouzakis, M Regehr, V Singhal, T Steinke, J Ullman

[University of Waterloo & Google Research & Northeastern University]

统计估计的偏差-方差-隐私三元困境

要点:

-

证明在差分隐私均值估计的背景下存在偏差-方差-隐私的权衡问题; -

证明在对称分布的近似差分隐私下可以进行无偏的均值估计; -

证明在纯粹的或集中式的差分隐私下不可能进行无偏的均值估计,即使对于高斯分布也是如此。

一句话总结:

在差分隐私均值估计算法中,低偏差、低方差和低隐私损失之间的权衡是固有的,但如果分布是对称的,在近似的差分隐私下,无偏的均值估计是可能的。

The canonical algorithm for differentially private mean estimation is to first clip the samples to a bounded range and then add noise to their empirical mean. Clipping controls the sensitivity and, hence, the variance of the noise that we add for privacy. But clipping also introduces statistical bias. We prove that this tradeoff is inherent: no algorithm can simultaneously have low bias, low variance, and low privacy loss for arbitrary distributions. On the positive side, we show that unbiased mean estimation is possible under approximate differential privacy if we assume that the distribution is symmetric. Furthermore, we show that, even if we assume that the data is sampled from a Gaussian, unbiased mean estimation is impossible under pure or concentrated differential privacy.

https://arxiv.org/abs/2301.13334

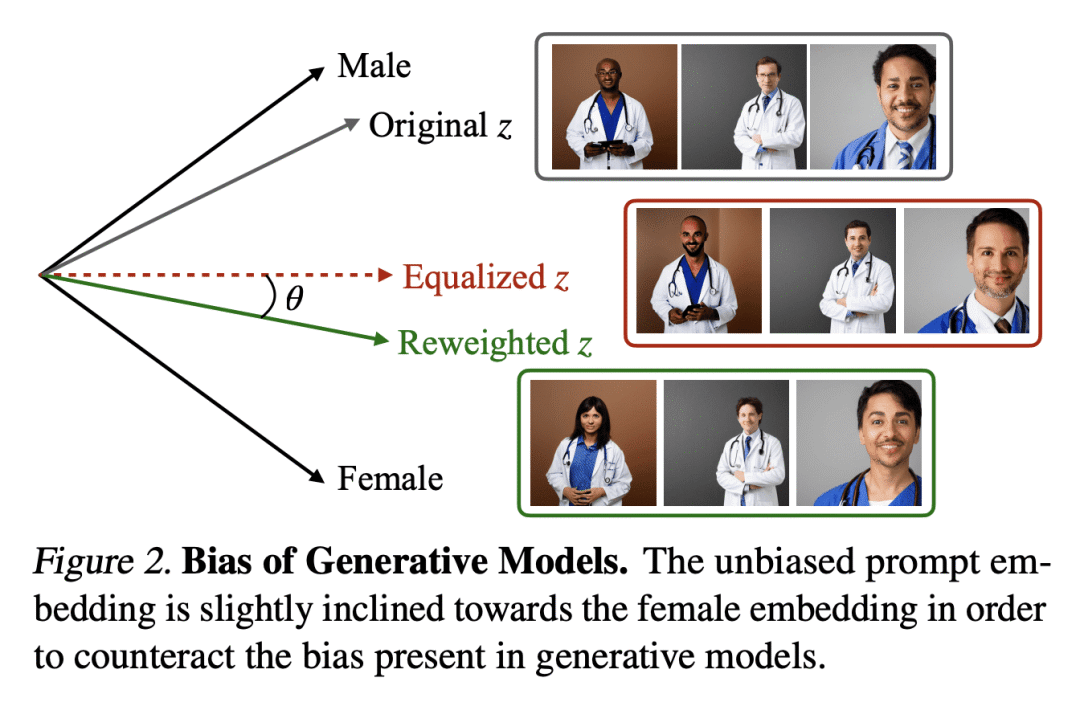

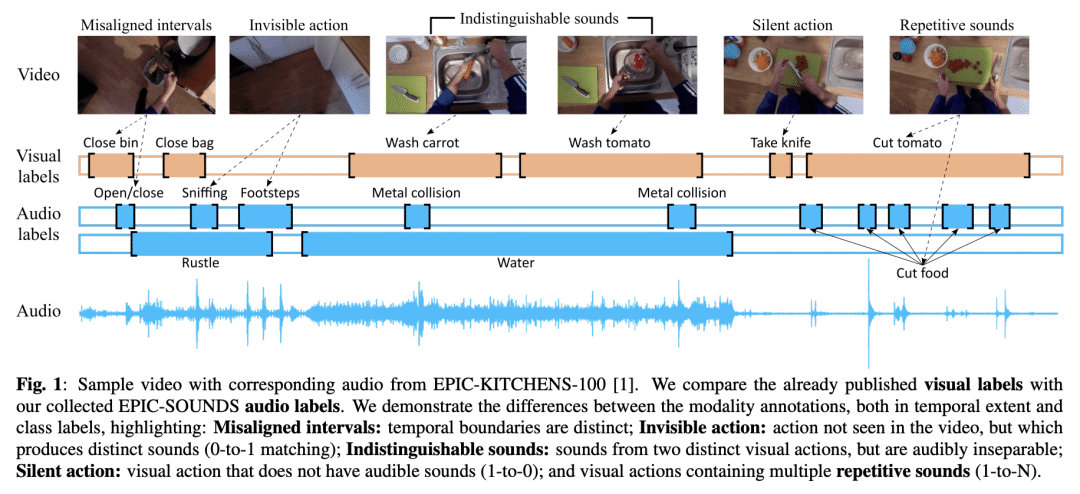

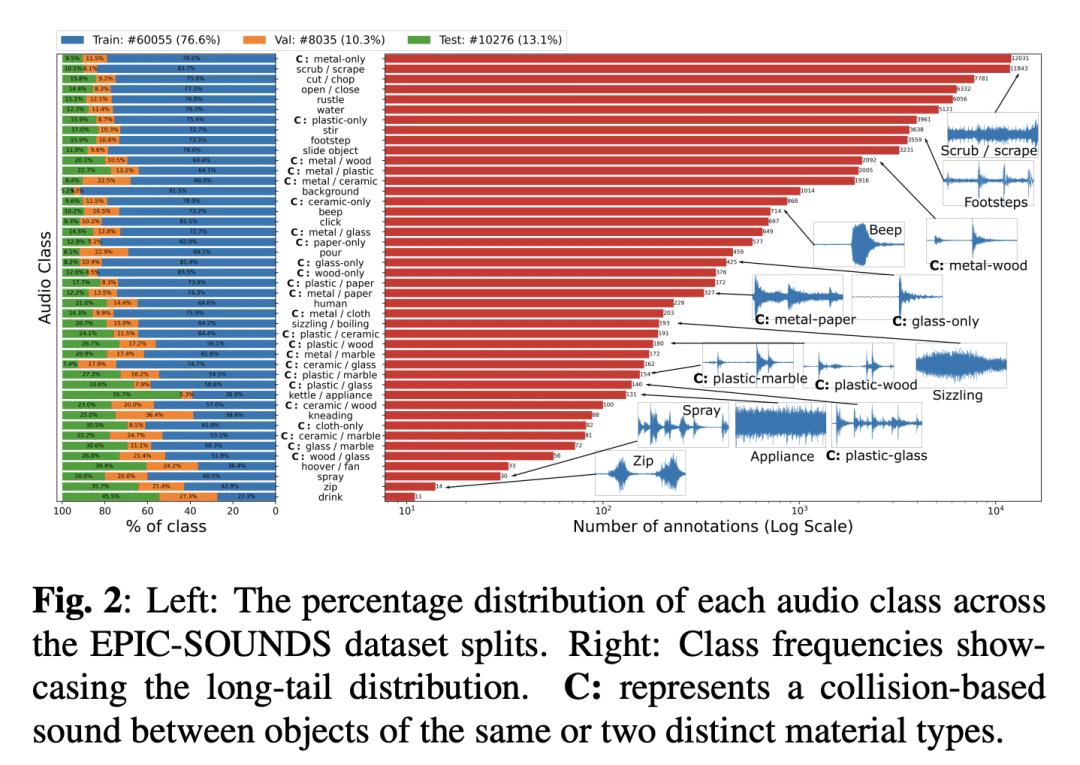

[AS] Epic-Sounds: A Large-scale Dataset of Actions That Sound

J Huh, J Chalk, E Kazakos, D Damen, A Zisserman

[University of Oxford & University of Bristol]

Epic-Sounds: 大规模操作声音数据集

要点:

-

提出 EPIC-SOUNDS,一个大规模的家庭厨房操作音频标注数据集; -

基于时间标签和人工描述的音频片段的标注管道; -

78.4万个已分类的声音事件分布在44个类别,39.2万个未分类的片段,共计117.6万个片段,跨越100小时的音频。

一句话总结:

EPIC-SOUNDS 是一个大规模的音频标注数据集,捕捉了家庭厨房中日常生活中的操作声音,有78,366个分类的声音事件和39,187个未分类的声音事件,适用于音频/声音识别和声音事件检测。

We introduce EPIC-SOUNDS, a large-scale dataset of audio annotations capturing temporal extents and class labels within the audio stream of the egocentric videos. We propose an annotation pipeline where annotators temporally label distinguishable audio segments and describe the action that could have caused this sound. We identify actions that can be discriminated purely from audio, through grouping these free-form descriptions of audio into classes. For actions that involve objects colliding, we collect human annotations of the materials of these objects (e.g. a glass object being placed on a wooden surface), which we verify from visual labels, discarding ambiguities. Overall, EPIC-SOUNDS includes 78.4k categorised segments of audible events and actions, distributed across 44 classes as well as 39.2k non-categorised segments. We train and evaluate two state-of-the-art audio recognition models on our dataset, highlighting the importance of audio-only labels and the limitations of current models to recognise actions that sound.

https://arxiv.org/abs/2302.00646

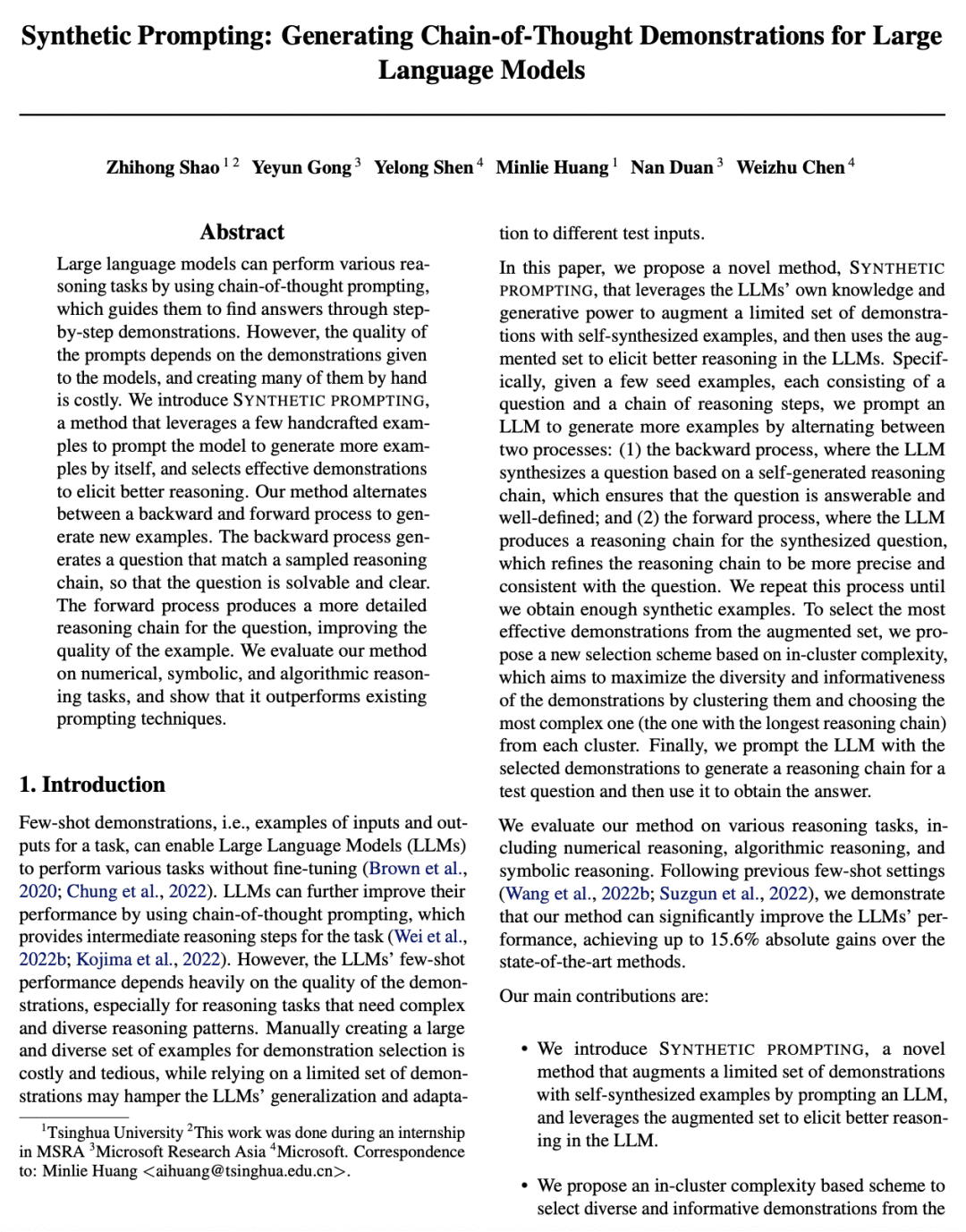

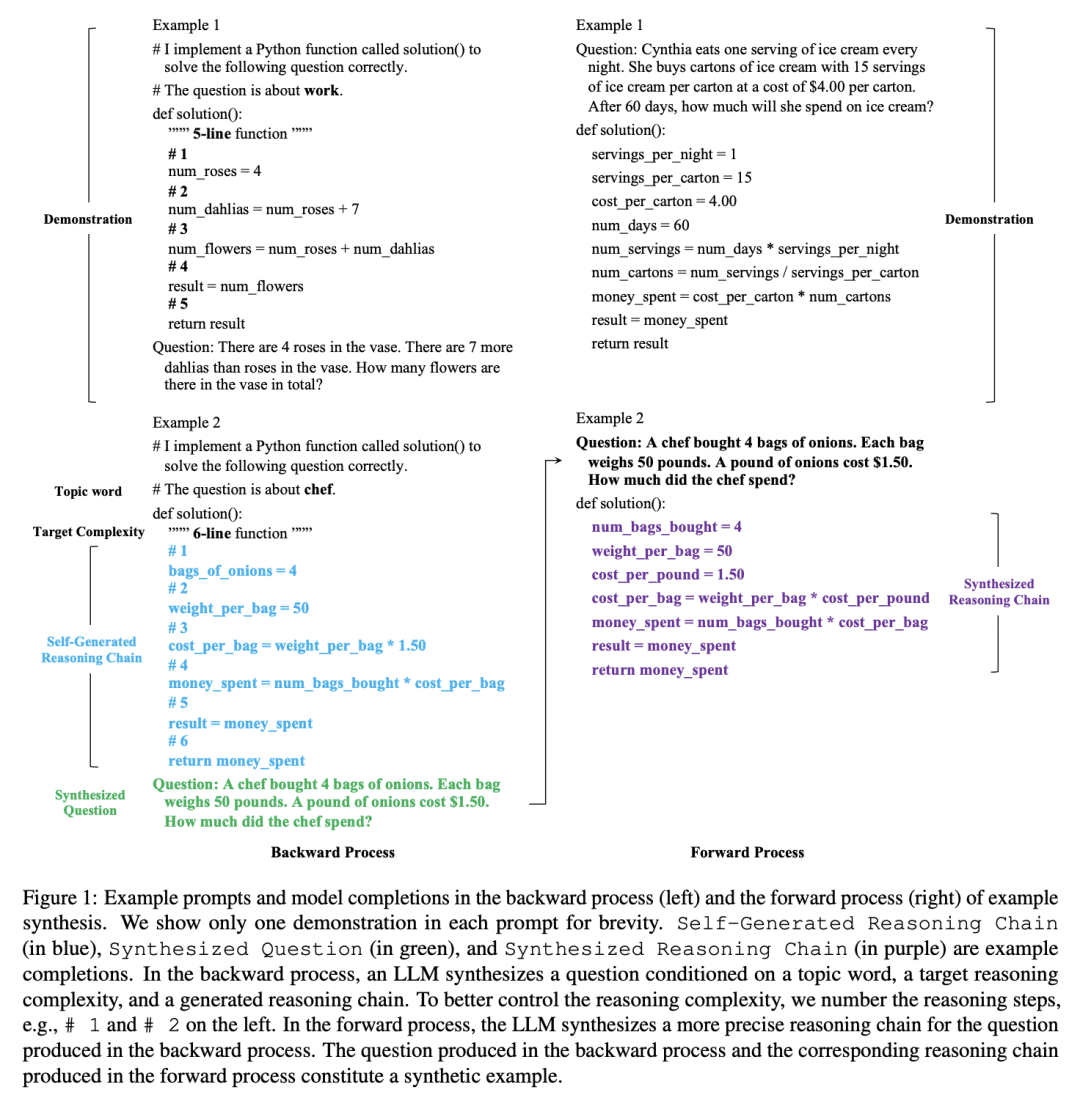

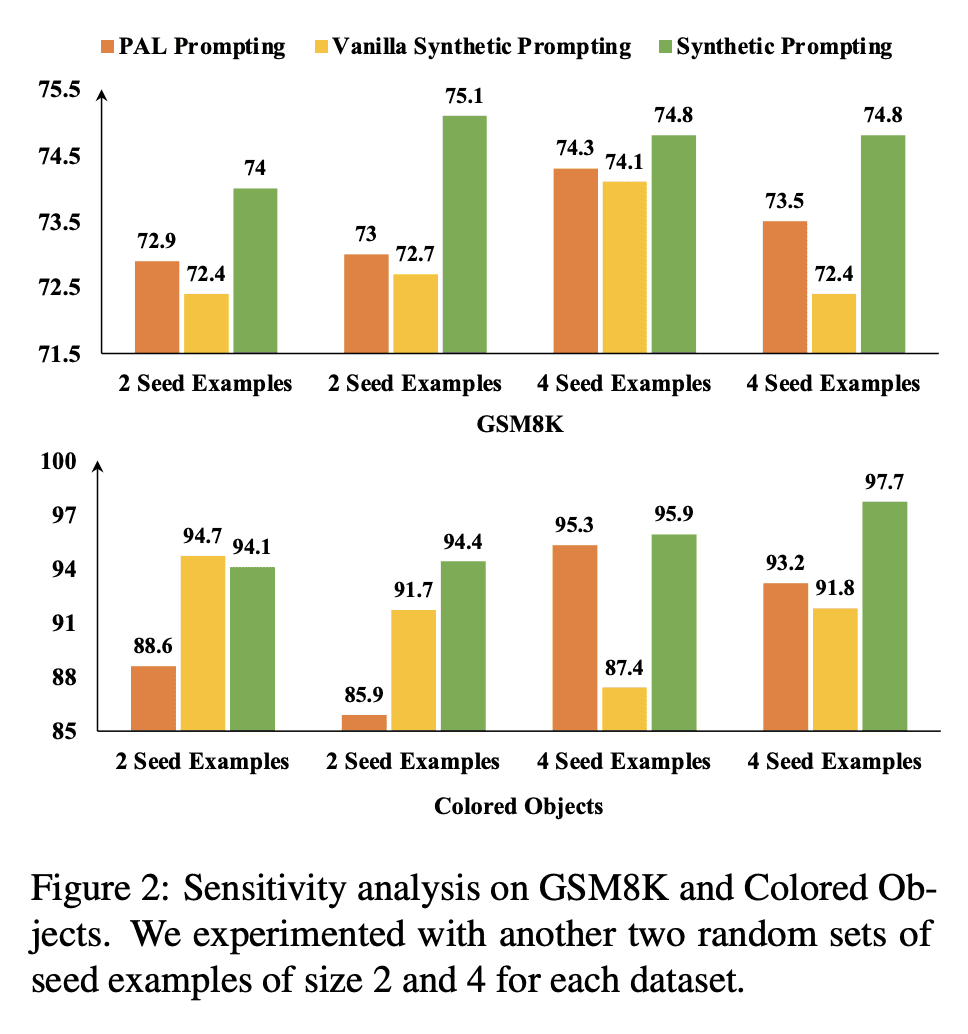

[CL] Synthetic Prompting: Generating Chain-of-Thought Demonstrations for Large Language Models

Z Shao, Y Gong, Y Shen, M Huang, N Duan, W Chen

[Microsoft Research Asia & Tsinghua University & Microsoft]

合成提示:为大型语言模型生成思想链演示

要点:

-

提出合成提示,一种利用自合成样本来提高大型语言模型(LLM)推理性能的新方法; -

除了上下文演示的学习器之外,还用 LLM 作为额外样本的生成器; -

与现有的提示方法(如思维链提示和PAL提示)相比,提高了数字、符号和算法任务的推理性能。

一句话总结:

合成提示是一种利用自合成样本提高大型语言模型推理性能的新方法,在数字、符号和算法任务中的提示技术优于现有的提示技术。

Large language models can perform various reasoning tasks by using chain-of-thought prompting, which guides them to find answers through step-by-step demonstrations. However, the quality of the prompts depends on the demonstrations given to the models, and creating many of them by hand is costly. We introduce Synthetic prompting, a method that leverages a few handcrafted examples to prompt the model to generate more examples by itself, and selects effective demonstrations to elicit better reasoning. Our method alternates between a backward and forward process to generate new examples. The backward process generates a question that match a sampled reasoning chain, so that the question is solvable and clear. The forward process produces a more detailed reasoning chain for the question, improving the quality of the example. We evaluate our method on numerical, symbolic, and algorithmic reasoning tasks, and show that it outperforms existing prompting techniques.

https://arxiv.org/abs/2302.00618

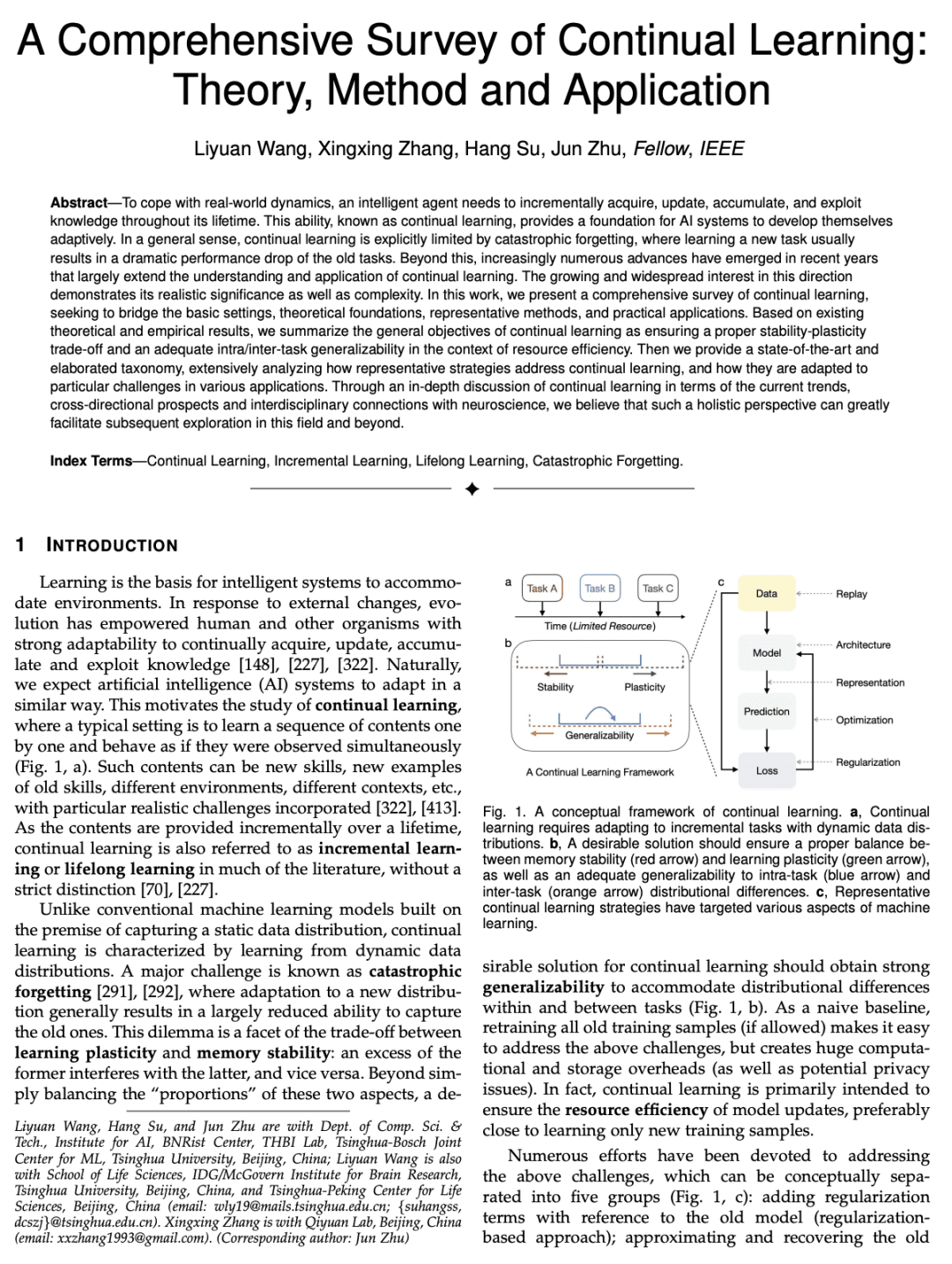

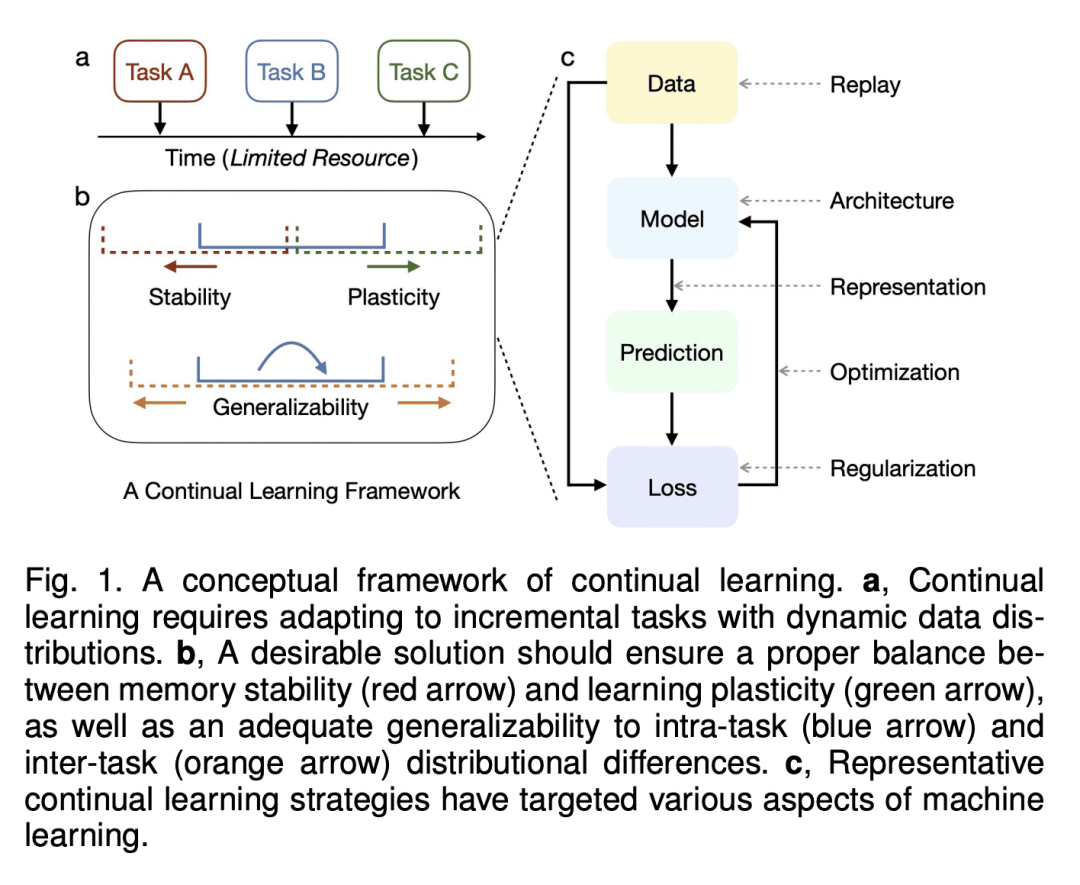

[LG] A Comprehensive Survey of Continual Learning: Theory, Method and Application

L Wang, X Zhang, H Su, J Zhu

[Tsinghua University]

持续学习综述:理论、方法和应用

要点:

-

提供对持续学习的全面综述,将理论、方法和应用方面的进展联系起来; -

总结持续学习的通用目标,提出最先进的和详细的代表性策略分类法; -

分析代表性策略如何适应各种应用中的特殊挑战,如场景的复杂性和任务的特殊性。

一句话总结:

对持续学习进行了全面的综述,涵盖了其理论、方法和应用,并总结了通用的目标和挑战,阐述了分类法和策略。

To cope with real-world dynamics, an intelligent agent needs to incrementally acquire, update, accumulate, and exploit knowledge throughout its lifetime. This ability, known as continual learning, provides a foundation for AI systems to develop themselves adaptively. In a general sense, continual learning is explicitly limited by catastrophic forgetting, where learning a new task usually results in a dramatic performance drop of the old tasks. Beyond this, increasingly numerous advances have emerged in recent years that largely extend the understanding and application of continual learning. The growing and widespread interest in this direction demonstrates its realistic significance as well as complexity. In this work, we present a comprehensive survey of continual learning, seeking to bridge the basic settings, theoretical foundations, representative methods, and practical applications. Based on existing theoretical and empirical results, we summarize the general objectives of continual learning as ensuring a proper stability-plasticity trade-off and an adequate intra/inter-task generalizability in the context of resource efficiency. Then we provide a state-of-the-art and elaborated taxonomy, extensively analyzing how representative strategies address continual learning, and how they are adapted to particular challenges in various applications. Through an in-depth discussion of continual learning in terms of the current trends, cross-directional prospects and interdisciplinary connections with neuroscience, we believe that such a holistic perspective can greatly facilitate subsequent exploration in this field and beyond.

https://arxiv.org/abs/2302.00487

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.

ดูบอลสด

Very well presented. Every quote was awesome and thanks for sharing the content. Keep sharing and keep motivating others.